Large Language Model Meta AI (LLaMA)

Large Language Model Meta AI (LLaMA) is a cutting-edge natural language processing model developed by Meta. With up to 65 billion parameters, LLaMA excels at un...

AI-powered data extraction automates data processing, reduces errors, and handles large datasets efficiently. Learn about top tools, methods, and future trends.

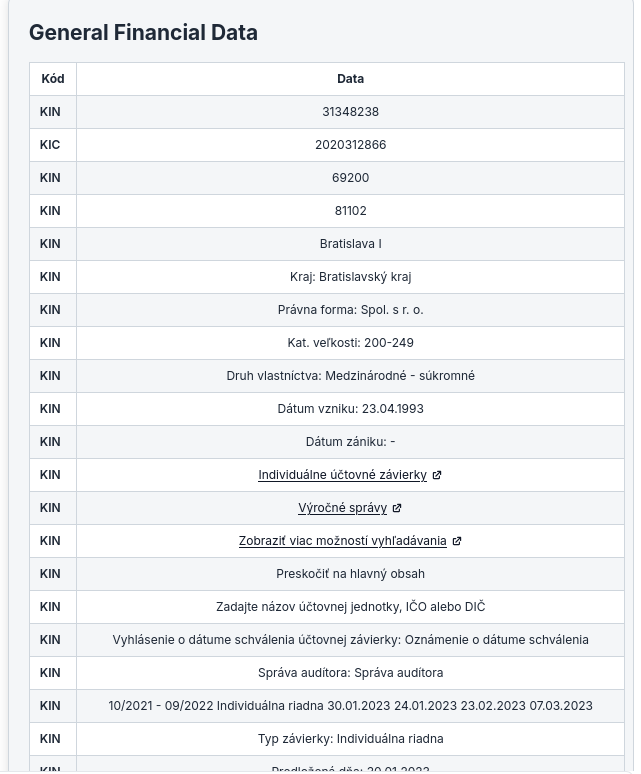

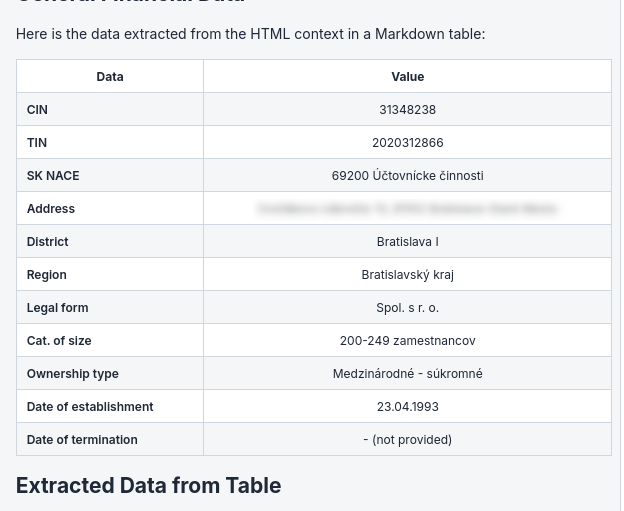

These are the models we have tried to extract data from a webpage in HTML. Below, we explore the performance of several models we’ve tested for extracting specific data into structured formats like markdown tables from HTML pages.

This is the prompt we used to evaluate different models, and we fetched unstructured data from HTML and showed it as Markdown table.

This model, while innovative in its architecture, showed limitations when it came to adhering strictly to the prompts provided for data extraction. In our task, the model extracted all data, and not the specified data in the prompt.

The Haiku model from Anthropic AI stood out in our evaluation. It demonstrated a robust capability to not only understand the prompt but also to execute the extraction task with high fidelity. It excelled in parsing HTML content and formatting the extracted data into well-structured markdown tables. The model’s ability to maintain context and follow detailed instructions made it particularly effective for this use case.

Although Haiku Model is the smallest model of Anthropic, it did a better job than any other model in the Eval.

While OpenAI models are renowned for their versatility and language understanding, they didn’t shine as brightly in our specific task of converting HTML to markdown tables. The primary issue encountered was with the formatting of the markdown table. The model occasionally produced tables with misaligned columns or inconsistent markdown syntax, which required manual tweaking post-extraction. There were a lot of placeholders in the generated OpenAI output.

Data extraction methods are crucial for businesses that want to make the most of their data. These methods come in different levels of complexity and are suited for various types of data and business needs.

Web scraping is a popular way to gather data directly from websites. It involves using automated tools or scripts to collect large amounts of data from web pages. This method is especially helpful for collecting publicly available information like prices, product details, or customer reviews. Tools such as BeautifulSoup and Cheerio are well-known for scraping content from static web pages. Moreover, AI-powered scrapers can automate and improve the process, saving time and effort.

Text extraction is all about getting specific information from sources that are mostly text. This method is important for working with documents, emails, and other text-heavy formats. Advanced text extraction techniques can find and pull out patterns or entities, such as names, dates, and financial figures from unstructured text. Often, this process is aided by machine learning models that become more accurate and efficient over time.

API tools make data extraction easier by offering a structured way to access data from external sources. Through APIs, businesses can get data from various services like social media platforms, databases, and cloud applications securely and efficiently. This approach is perfect for integrating real-time data into business applications, ensuring a smooth data flow and up-to-date information.

Data mining is about analyzing large sets of data to uncover patterns, correlations, and insights that aren’t immediately obvious. This method is invaluable for businesses that want to optimize processes, predict trends, or understand customer behavior better. Data mining techniques can be used on both structured and unstructured data, making them versatile tools for strategic decision-making.

OCR technology converts written text, like handwritten notes or printed documents, into digital data that can be edited and searched. This method is particularly useful for turning paper-based information into digital format, helping businesses to streamline document management and improve access to data. OCR engines have become more advanced, offering high accuracy and speed when converting physical documents into digital formats.

Adding these data extraction methods into a business plan can significantly boost data processing abilities, leading to better decision-making and improved operational efficiency. By choosing the right method or combination of methods, businesses can ensure they are making the most of their data.

Docsumo is a document processing and data extraction tool designed to automate the data entry process by extracting information from various types of documents. Utilizing Intelligent OCR technology, it significantly reduces the time and effort required for manual data entry, making it a valuable asset across several industries such as finance, healthcare, and insurance.

Pros:

Cons:

Target Audience: The ideal users for Docsumo include:

Recommendations:

We recommend Docsumo to businesses that handle large volumes of documents and require reliable data extraction capabilities. Its automation features enhance efficiency and accuracy, making it an indispensable tool for various sectors.

Hevo Data is a comprehensive data integration platform that enables businesses to consolidate and integrate data from multiple sources into a single, unified view. The platform is designed with a user-friendly interface, allowing users to set up data pipelines without the need for any coding skills. This accessibility makes it an ideal solution for companies looking to leverage their data for analytics and reporting purposes. Hevo Data supports various data sources, including databases, cloud storage, and SaaS applications, allowing organizations to streamline their data workflows and enhance their decision-making capabilities.

Hevo Data has received positive feedback from users for its ease of use, real-time capabilities, and robust integration features. Many users appreciate the platform’s no-code approach, which enables teams to set up data pipelines quickly without requiring extensive technical knowledge. The real-time data replication feature has also been highlighted as a significant advantage for businesses that rely on up-to-date information for decision-making. However, some users have mentioned that there is a learning curve when it comes to more advanced features.

Hevo Data is highly recommended for small to medium-sized businesses looking to streamline their data integration processes without the need for extensive technical resources. It is particularly suitable for teams that require real-time data analytics and reporting capabilities. Businesses in sectors such as e-commerce, finance, and marketing can benefit significantly from using Hevo Data to consolidate their data for informed decision-making. Overall, Hevo Data is an excellent choice for organizations seeking a reliable and user-friendly data integration solution.

Airbyte is an open-source data integration platform designed to help businesses synchronize their data across various systems efficiently. It facilitates the building of ELT (Extract, Load, Transform) data pipelines that connect different sources and destinations, enabling seamless data transfer and reporting. Founded in January 2020, Airbyte aims to simplify data integration by providing a no-code tool that allows users to connect various systems without extensive engineering resources. With over 400 connectors available, Airbyte has quickly gained traction in the market, raising significant funding since its inception.

Positive Feedback:

Users appreciate the ease of use, extensive integrations, open-source nature, and customer support. Many find the platform user-friendly, enabling quick setup of data pipelines.

Criticisms:

Some users report performance issues with large data volumes and mention the need for improved documentation. Others feel that while effective for basic integration, advanced features are lacking.

Airbyte is particularly suitable for:

In conclusion, Airbyte presents a robust solution for a wide range of users looking to enhance their data integration processes. Its open-source model, extensive features, and community support make it an attractive choice for businesses aiming to leverage their data effectively.

Import.io is a web data integration platform that enables users to extract, transform, and load data from the web into usable formats. The product is designed to help businesses gather data from various online sources for analysis and decision-making. Import.io provides a SaaS solution that converts complex web data into structured formats such as JSON, CSV, or Google Sheets. This functionality is crucial for businesses that rely on data for competitive intelligence, market analysis, and strategic planning. The platform is built to handle challenges associated with web data extraction, including navigating CAPTCHAs, logins, and varying website structures.

Positive Reviews:

Negative Reviews:

Import.io is an excellent choice for marketing teams, e-commerce businesses, data analysts, and researchers looking to streamline their data collection processes without extensive technical expertise. Its user-friendly interface and robust features make it suitable for a wide range of applications, from competitive analysis to market research and social media monitoring. Import.io stands out for its ability to provide accessible, actionable web data while saving time and reducing operational costs.

This comprehensive report should provide potential users with all necessary information to evaluate Import.io as a solution for their web data extraction needs.

Looking ahead, data extraction is set to change a lot because of some new trends. Models that use AI are leading the way, making things more accurate and efficient by using machine learning. There’s also something called edge analytics, which lets data be processed right where it’s created, cutting down on delays and reducing the amount of data that needs to be transferred. Another big trend is making data more accessible, which AI is helping with by breaking down barriers and letting more people in an organization access important insights. Plus, there’s a growing focus on ethical data practices, making sure data extraction is done in a way that’s open and respects privacy. As these trends keep developing, staying informed and flexible will be important to using data extraction to gain a strategic edge.

AI-powered data extraction increases efficiency by automating data processing, reduces manual errors, and can handle large datasets, allowing businesses to allocate resources to more strategic tasks.

Leading models include Anthropic AI's Haiku, which excels at structured extraction from HTML, as well as models from OpenAI and Llama 3.2, though Anthropic's model showed the best adherence to structured extraction prompts.

Common methods include web scraping, text extraction, API integration, data mining, and OCR (Optical Character Recognition), each suited for specific data types and business needs.

Top tools include Docsumo for document processing with OCR, Hevo Data and Airbyte for no-code data integration, and Import.io for web data extraction and transformation.

Key trends include the rise of AI and machine learning for improved accuracy, edge analytics for faster processing, greater data accessibility across organizations, and a focus on ethical and privacy-conscious data practices.

Smart Chatbots and AI tools under one roof. Connect intuitive blocks to turn your ideas into automated Flows.

Large Language Model Meta AI (LLaMA) is a cutting-edge natural language processing model developed by Meta. With up to 65 billion parameters, LLaMA excels at un...

FlowHunt 2.4.1 introduces major new AI models including Claude, Grok, Llama, Mistral, DALL-E 3, and Stable Diffusion, expanding your options for experimentation...

FlowHunt supports dozens of AI models, including Claude models by Anthropic. Learn how to use Claude in your AI tools and chatbots with customizable settings fo...