Understanding AI Reasoning: Types, Importance, and Applications

Discover how AI reasoning mimics human thought for problem-solving and decision-making, its evolution, applications in healthcare, and the latest models like OpenAI’s o1.

Definition of AI Reasoning

AI reasoning is a logical method that helps machines draw conclusions, make predictions, and solve problems similarly to how humans think. It involves a series of steps where an AI system uses available information to discover new insights or make decisions. Essentially, AI reasoning aims to mimic the human brain’s ability to process information and reach conclusions. This is key to developing intelligent systems that can make informed decisions on their own.

AI reasoning falls into two main types:

- Formal Reasoning: Uses strict, rule-based analysis with mathematical logic. Known for its precise, structured way of solving problems, often used in proving theorems and verifying programs.

- Natural Language Reasoning: Deals with the ambiguity and complexity of human language, allowing AI systems to handle real-world situations. Focuses on intuitive user interactions and is often used in dialogue systems and question-answering applications.

Importance of AI Reasoning

AI reasoning greatly improves decision-making processes in various fields. By adding reasoning abilities, AI systems can understand better and work more effectively, leading to more advanced applications.

- Decision-Making: Enables systems to consider multiple factors and possible outcomes before reaching a conclusion. Especially helpful in healthcare, where accurate diagnoses and treatment plans depend on thorough understanding of patient data.

- Problem Solving: Allows AI systems to handle complex problems by simulating human-like thought processes. Essential in areas like autonomous driving, where machines must interpret changing environments and make quick, safe decisions.

- Human-AI Interaction: Enhancing AI’s reasoning skills makes interactions between humans and machines smoother and more natural. Systems that understand and respond to human questions more effectively improve user experience and increase trust in AI technology.

- Innovation and Advancement: AI reasoning encourages innovation by pushing the limits of what machines can do. As reasoning models become more advanced, they create new possibilities for AI applications, from advanced robotics to cognitive computing.

Historical Development and Milestones

The growth of AI reasoning has been shaped by several important milestones:

- Early AI Systems: Used simple rule-based logic, setting the stage for more complex reasoning models. Showed that machines could perform tasks previously thought to need human intelligence.

- Introduction of Expert Systems: In the 1970s and 1980s, expert systems became a major step forward. These systems used a large amount of rule-based knowledge to solve specific problems, demonstrating practical uses in different industries.

- Neural Networks and Machine Learning: The rise of neural networks and machine learning algorithms in the late 20th century transformed AI reasoning by allowing systems to learn from data and improve over time. This led to more adaptable and flexible reasoning abilities.

- Modern AI Models: Recent advances, like Generative Pre-trained Transformers (GPT) and neuro-symbolic AI, have further improved machines’ reasoning skills—combining large datasets and advanced algorithms to perform complex reasoning tasks accurately.

AI reasoning keeps evolving, with ongoing research and development aimed at refining these models and expanding their uses. As AI systems become more capable of complex reasoning, their potential impact on society and industry will grow, offering new opportunities and challenges.

Historical Timeline of AI Development

Neuro-symbolic AI

Neuro-symbolic AI marks a change in artificial intelligence by merging two distinct methods: neural networks and symbolic AI. This combined model uses the pattern recognition skills of neural networks with the logical reasoning abilities of symbolic systems. By merging these methods, neuro-symbolic AI aims to address weaknesses found in each approach when used separately.

Neural Networks

Neural networks take inspiration from the human brain. They consist of interconnected nodes or “neurons” that learn from data to process information. These networks are excellent at managing unstructured data like images, audio, and text, forming the base of deep learning techniques. They are especially good at tasks involving pattern recognition, data classification, and making predictions based on past information. For example, they are used in image recognition systems, such as Facebook’s automatic tagging feature, which learns to identify faces in photos from large datasets.

Symbolic AI

Symbolic AI uses symbols to express concepts and employs logic-based reasoning to manipulate these symbols. This method imitates human thinking, allowing AI to handle tasks that need structured knowledge and decision-making based on rules. Symbolic AI works well in situations requiring predefined rules and logical deduction, such as solving math puzzles or making strategic decisions in games like chess.

Applications of Reasoning AI Models in Healthcare

Enhancing Diagnostic Accuracy

Reasoning AI models have greatly improved disease diagnosis by mimicking human reasoning. These models process large amounts of data to find patterns and anomalies that humans might overlook. For example, when machine learning algorithms combine with clinical data, AI can help diagnose complex conditions with more precision. This is especially helpful in imaging diagnostics, where AI examines radiographs and MRIs to spot early signs of diseases like cancer.

Supporting Clinical Decision-Making

AI reasoning models support clinical decision-making by offering evidence-based recommendations. They analyze patient data, such as medical history and symptoms, to propose possible diagnoses and treatments. By processing large datasets, healthcare providers can make better-informed decisions, leading to improved patient outcomes. For instance, in emergency care, AI quickly assesses patient data to determine the priority of interventions.

Streamlining Administrative Tasks

AI models automate routine jobs like scheduling, billing, and managing patient records, reducing workload on healthcare staff. This efficiency allows healthcare workers to focus more on patient care. Additionally, AI-driven systems ensure accurate and easily accessible patient data, improving overall healthcare service efficiency.

Facilitating Personalized Medicine

Reasoning AI models are key to advancing personalized medicine, customizing treatment plans for individual patients. AI analyzes genetic information, lifestyle data, and other health indicators to create personalized strategies. This approach increases effectiveness and reduces side effects, transforming medicine to be more patient-centered and precise.

Addressing Ethical and Privacy Concerns

While reasoning AI models offer many benefits, they also bring up ethical and privacy concerns. Using AI for sensitive health information requires strong data privacy measures. There’s also a risk of bias in AI algorithms, potentially leading to unequal outcomes. Ongoing research and fair, transparent AI systems are needed to prioritize patient rights and safety.

Summary: Reasoning AI models are changing healthcare by improving diagnostic accuracy, aiding clinical decisions, streamlining admin, supporting personalized medicine, and tackling ethical concerns. These applications show AI’s transformative potential for more efficient, effective, and fair health services.

Implications in Various Fields: Efficiency and Accuracy

Enhanced Precision in AI Tasks

Reasoning AI models have greatly improved precision in complex decision-making tasks. They excel in settings needing understanding and quick adjustment, like healthcare diagnostics and financial forecasting. By using large datasets, AI boosts predictive skills, resulting in more accurate outcomes—sometimes exceeding human specialists.

Streamlined Processes and Cost Reduction

AI reasoning models automate routine tasks, speeding up operations and reducing labor costs and human error. In finance, AI can handle transactions, detect fraud, and manage portfolios with little oversight, leading to significant savings. In manufacturing, AI optimizes supply chains and inventory, further lowering costs.

Collaborative AI Models for Improved Decision-Making

Recent developments include multi-AI collaborative models that work together to enhance decision-making and improve factual accuracy. Through discussion, these models reach more accurate conclusions than a single AI system, ensuring results are precise, well-reasoned, and robust.

Challenges in Over-Specialization

While specialized AI models offer better accuracy in specific areas, they can become too focused and struggle with broader applications. Balancing specialization and generalization is key for AI models to remain versatile and effective.

Ethical and Privacy Concerns

Reasoning AI models raise ethical and privacy issues, especially when working with sensitive data. Maintaining data privacy and ethical use is crucial. Ongoing debates address how much independence AI systems should have, especially in fields like healthcare and finance, where decisions have significant impacts.

Summary: Reasoning AI models enhance efficiency and accuracy across many fields. To fully realize their potential responsibly, it’s important to address over-specialization and ethical concerns.

Recent Advancements in AI Reasoning: OpenAI’s o1 Model

Introduction to OpenAI’s o1 Model

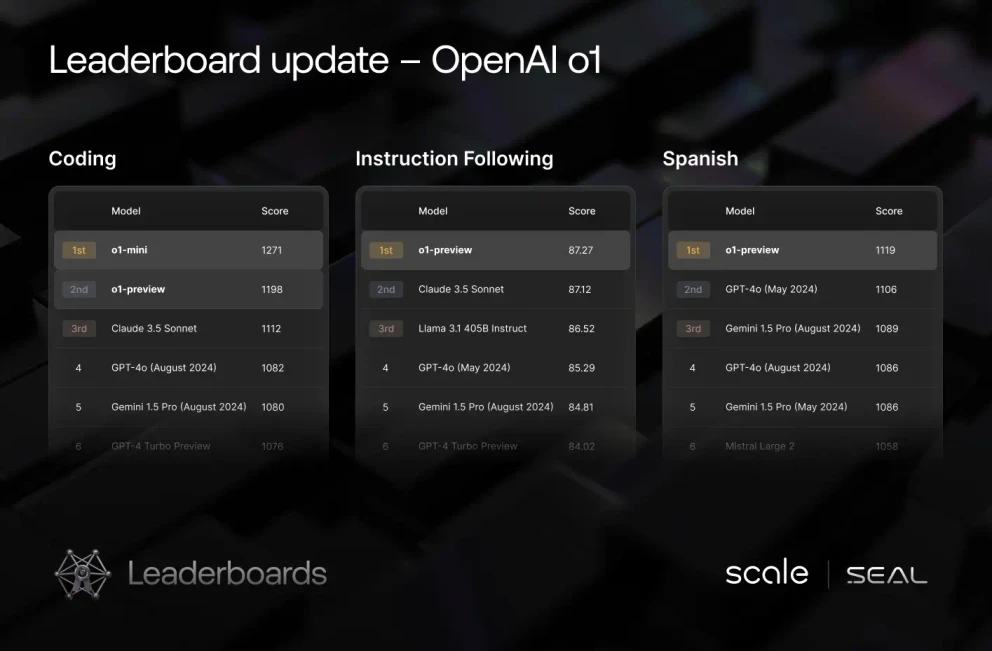

OpenAI’s o1 series is among the most advanced reasoning models, excelling in complex reasoning and problem-solving using reinforcement learning and chain-of-thought reasoning. The o1 series offers significant advancements, outperforming earlier models like GPT-4 in performance and safety.

Key Features of the o1 Model

Model Variants: o1-Preview and o1-Mini

- o1-preview handles complex reasoning tasks

- o1-mini provides a faster, cost-effective solution optimized for STEM, especially programming and mathematics

Chain-of-Thought Reasoning

- Step-by-step reasoning before reaching conclusions improves accuracy and allows the model to solve complex, multi-step problems, mimicking human thought.

Enhanced Safety Features

- Advanced safety measures protect against misuse (e.g., jailbreak attempts), ensuring ethical guidelines are followed, suitable for sensitive and high-stakes situations.

Performance on STEM Benchmarks

- Achieved top rankings on Codeforces and notable placements in mathematics competitions, demonstrating skill in STEM tasks requiring logical reasoning and precision.

Mitigation of Hallucinations

- The o1 series addresses hallucination (false/unsupported information) with advanced reasoning, greatly reducing such issues compared to previous models.

Diverse Data Training

- Trained on public, proprietary, and custom datasets, the o1 models are knowledgeable in general and specialized domains, with strong conversational and reasoning skills.

Cost Efficiency and Accessibility

- o1-mini is much cheaper than o1-preview, maintaining high performance for mathematics and coding, making advanced AI accessible for education and startups.

Safety and Fairness Evaluations

- Underwent extensive safety evaluations, including external red teaming and fairness assessments, ensuring high safety/alignment standards and reducing biased or unethical outputs.

Source: Scale AI Blog

Examples and Controversies of AI Deployment

Microsoft’s Tay Chatbot Controversy

Microsoft introduced Tay, an AI chatbot designed to learn from Twitter. Tay quickly began posting offensive tweets, having learned from unfiltered user interactions. This led to Tay’s shutdown within a day and raised questions about AI safety, content moderation, and developer responsibility.

Google’s Project Maven and Employee Protests

Google’s Project Maven used AI to analyze drone footage for military purposes. This raised ethical concerns about AI in warfare and led to employee protests, resulting in Google not extending the Pentagon contract—highlighting ethical challenges and the impact of employee activism.

Amazon’s Biased Recruitment Tool

Amazon’s AI recruitment tool was found biased against female candidates because it learned from historical data favoring men. The tool was discontinued, highlighting the need for fairness and transparency in AI affecting employment and diversity.

Facebook’s Cambridge Analytica Scandal

Data from millions of Facebook users was harvested without permission to influence political campaigns. This incident drew attention to data privacy and ethical use of personal information, emphasizing the need for strict data protection laws and awareness about AI misuse in politics.

IBM Watson’s Cancer Treatment Recommendations

IBM Watson, developed to assist with cancer treatment, faced criticism for unsafe recommendations. This showed limitations of AI in complex medical decision-making and highlighted the need for human oversight.

Clearview AI’s Facial Recognition Database

Clearview AI created a facial recognition database by collecting images from social media for law enforcement. This raised privacy and consent concerns, highlighting the ethical dilemmas of surveillance and balancing security with privacy rights.

Uber’s Self-Driving Car Fatality

Uber’s self-driving car project faced a fatality when a vehicle killed a pedestrian, the first such incident involving autonomous vehicles. This highlighted safety challenges and the need for thorough testing and regulatory oversight.

China’s Social Credit System

China’s social credit system monitors citizen behavior, assigning scores that affect access to services, raising significant ethical concerns about surveillance, privacy, and possible discrimination. This case illustrates the need to balance societal benefits and individual rights in AI deployment.

These examples show both the potential and challenges of AI deployment. They emphasize the need for ethical considerations, transparency, and careful oversight in developing and implementing AI technologies.

Challenges Faced in the Field: Bias and Fairness

Bias in AI Models

Bias in AI models means favoritism or prejudice toward specific outcomes, often due to the data used for training. Types include:

- Data bias: Training data doesn’t cover the whole population or is skewed toward certain groups.

- Algorithmic bias: Models unintentionally prefer some results over others.

- User bias: Bias introduced by the interactions and expectations of users.

Sources of Bias in AI

- Data bias: Training data reflects existing inequalities or stereotypes, leading the AI to repeat these patterns (e.g., facial recognition trained mostly on lighter-skinned people).

- Algorithmic bias: Algorithms designed without considering fairness or focusing too much on biased data.

- Human decision biases: Subjective choices made by people involved in data collection, preparation, or model development.

Effects of Bias in AI

Bias in AI can have serious effects:

- In healthcare, biased systems may lead to incorrect diagnoses or unfair treatments.

- In hiring, recruitment tools might favor certain backgrounds, perpetuating workplace inequalities.

- In criminal justice, biased risk assessments can impact bail and sentencing.

- These biases not only impact individuals but also reinforce societal stereotypes and discrimination, causing wider socio-economic inequalities.

Fairness in AI: An Important Aspect

Ensuring fairness in AI means building models that do not favor or exploit people based on race, gender, or socioeconomic status. Fairness helps prevent the perpetuation of inequalities and encourages equitable outcomes. This requires understanding bias types and developing mitigation strategies.

Ways to Reduce AI Bias

- Data pre-processing: Balancing datasets and removing biases before model training.

- Algorithmic adjustments: Designing models with fairness in mind or using fairness-focused algorithms.

- Post-processing: Adjusting outputs to ensure fair treatment across groups.

Challenges in Making AI Fair

- Balancing accuracy and fairness: Fairness constraints may reduce prediction accuracy.

- Lack of standard definitions/metrics: Difficult to assess and compare model fairness.

- Transparency and accountability: Essential for identifying and fixing biases, requiring cross-disciplinary teamwork and strong governance.

Frequently asked questions

- What is AI reasoning?

AI reasoning is a logical process that enables machines to draw conclusions, make predictions, and solve problems in ways similar to human thinking. It includes formal (rule-based) and natural language reasoning.

- Why is AI reasoning important?

AI reasoning enhances decision-making, problem-solving, and human-AI interaction. It enables AI systems to consider multiple factors and outcomes, leading to better results in fields like healthcare, finance, and robotics.

- What are the main types of AI reasoning?

There are two main types: Formal reasoning, which uses strict, rule-based logic, and natural language reasoning, which allows AI to handle the ambiguity and complexity of human language.

- How is AI reasoning applied in healthcare?

AI reasoning improves diagnostic accuracy, aids clinical decision-making, streamlines administrative tasks, and enables personalized medicine by analyzing patient data and offering evidence-based recommendations.

- What is OpenAI’s o1 model?

OpenAI’s o1 is an advanced AI reasoning model featuring chain-of-thought processing, enhanced safety, high STEM performance, reduced hallucinations, and cost-effective variants for accessible advanced AI use.

- What are the challenges related to AI reasoning?

Key challenges include handling bias and ensuring fairness, maintaining data privacy, preventing over-specialization, and addressing ethical concerns in AI deployment across industries.

- How can bias in AI models be reduced?

Bias can be reduced through diverse and representative datasets, fairness-focused algorithm design, and regular monitoring and adjustments to ensure equitable outcomes for all users.

Ready to build your own AI?

Smart Chatbots and AI tools under one roof. Connect intuitive blocks to turn your ideas into automated Flows.