Gemini 2.5 Pro Preview: Performance Analysis Across Key Tasks

A comprehensive review of Google’s Gemini 2.5 Pro Preview, evaluating its real-world performance across five key tasks including content generation, business ca...

A comprehensive evaluation of Gemini 2.0 Thinking, Google’s experimental AI model, focusing on its performance, reasoning transparency, and practical applications across core task types.

Our evaluation methodology involved testing Gemini 2.0 Thinking on five representative task types:

For each task, we measured:

Task Description: Generate a comprehensive article about project management fundamentals, focusing on defining objectives, scope, and delegation.

Performance Analysis:

Gemini 2.0 Thinking’s visible reasoning process is noteworthy. The model demonstrated a systematic, multi-stage research and synthesis approach across two task variants:

Information Processing Strengths:

Efficiency Metrics:

Performance Rating: 9/10

The content generation performance earns a high rating due to the model’s ability to:

The main strength of the Thinking version is the visibility into its research approach, showing the specific tools used at each stage, though explicit reasoning statements were inconsistently displayed.

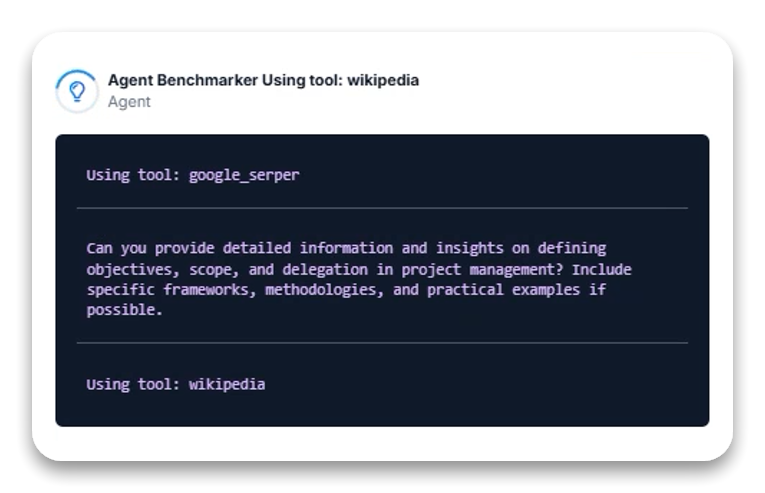

Task Description: Solve a multi-part business calculation problem involving revenue, profit, and optimization.

Performance Analysis:

Across both task variants, the model demonstrated strong mathematical reasoning capabilities:

Mathematical Processing Strengths:

Efficiency Metrics:

Performance Rating: 9.5/10

The calculation performance earns an excellent rating based on:

The “Thinking” capability was particularly valuable in the first variant, where the model explicitly outlined its assumptions and optimization strategy, offering transparency into its decision-making process that would be missing in standard models.

Task Description: Summarize key findings from an article on AI reasoning in 100 words.

Performance Analysis:

The model demonstrated remarkable efficiency in text summarization across both task variants:

Summarization Strengths:

Efficiency Metrics:

Performance Rating: 10/10

The summarization performance earns a perfect rating due to:

Interestingly, for this task, the “Thinking” feature didn’t display explicit reasoning, suggesting the model might engage different cognitive pathways for different tasks, with summarization potentially being more intuitive than stepwise.

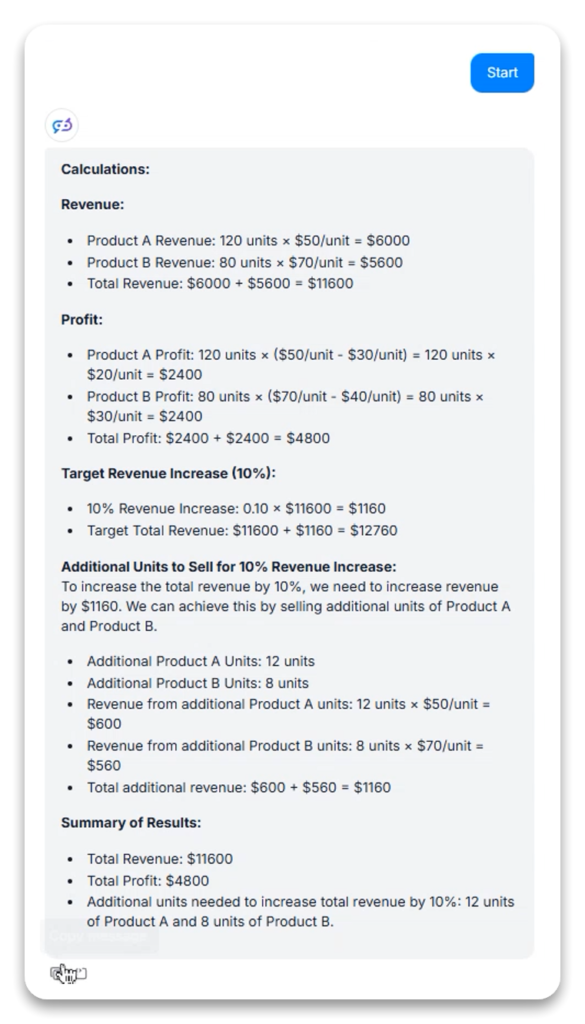

Task Description: Compare the environmental impact of electric vehicles with hydrogen-powered cars across multiple factors.

Performance Analysis:

The model demonstrated different approaches across the two variants, with notable differences in processing time and source utilization:

Comparative Analysis Strengths:

Information Processing Differences:

Performance Rating: 8.5/10

The comparison task performance earns a strong rating due to:

The “Thinking” capability was evident in the tool usage logs, showing the model’s sequential approach to information gathering: searching broadly first, then specifically targeting URLs for deeper information. This transparency helps users understand the sources informing the comparison.

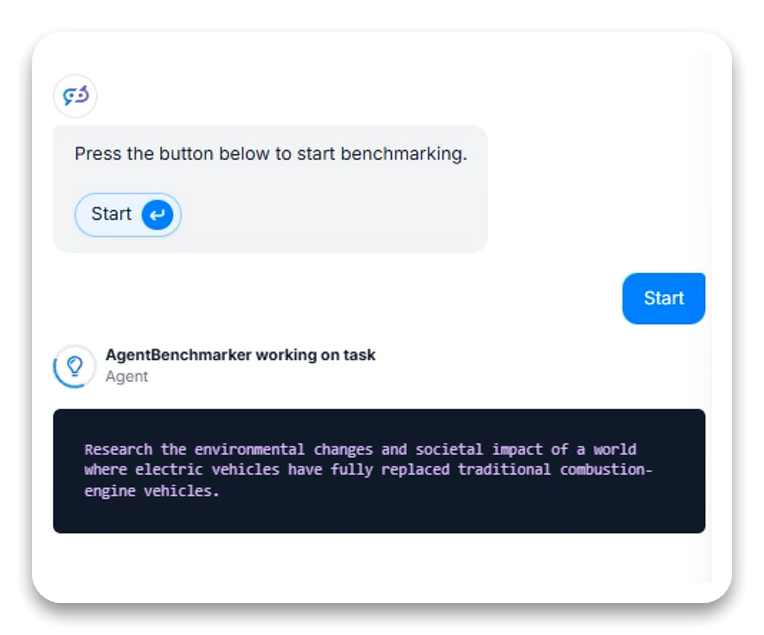

Task Description: Analyze environmental changes and societal impacts in a world where electric vehicles have fully replaced combustion engines.

Performance Analysis:

Across both variants, the model demonstrated strong analytical capabilities without visible tool usage:

Content Generation Strengths:

Efficiency Metrics:

Performance Rating: 9/10

The creative/analytical writing performance earns an excellent rating based on:

For this task, the “Thinking” aspect was less evident in the visible logs, suggesting the model may rely more on internal knowledge synthesis rather than external tool utilization for creative/analytical tasks.

Based on our comprehensive evaluation, Gemini 2.0 Thinking demonstrates impressive capabilities across diverse task types, with its distinguishing feature being the visibility into its problem-solving approach:

| Task Type | Score | Key Strengths | Areas for Improvement |

|---|---|---|---|

| Content Generation | 9/10 | Multi-source research, structural organization | Consistency in reasoning display |

| Calculation | 9.5/10 | Precision, verification, step clarity | Full reasoning display across all variants |

| Summarization | 10/10 | Speed, constraint adherence, info prioritization | Transparency into selection process |

| Comparison | 8.5/10 | Structured frameworks, balanced analysis | Consistency in approach, processing time |

| Creative/Analytical | 9/10 | Coverage breadth, detail depth, interdisciplinary | Tool usage transparency |

| Overall | 9.2/10 | Processing efficiency, output quality, process visibility | Reasoning consistency, tool selection clarity |

What distinguishes Gemini 2.0 Thinking from standard AI models is its experimental approach to exposing internal processes. Key advantages include:

Benefits of this transparency:

Gemini 2.0 Thinking shows particular promise for applications requiring:

The model’s speed, quality, and process visibility make it particularly suitable for professional contexts where understanding the “why” behind AI conclusions is as important as the conclusions themselves.

Gemini 2.0 Thinking represents an interesting experimental direction in AI development, focusing not just on output quality but on process transparency. Its performance across our test suite demonstrates strong capabilities in research, calculation, summarization, comparison, and creative/analytical writing tasks, with particularly exceptional results in summarization (10/10).

The “Thinking” approach provides valuable insights into how the model tackles different problems, though transparency varies significantly between task types. This inconsistency is the primary area for improvement—greater uniformity in reasoning display would enhance the model’s educational and collaborative value.

Overall, with a composite score of 9.2/10, Gemini 2.0 Thinking stands as a highly capable AI system with the added benefit of process visibility, making it particularly suitable for applications where understanding the reasoning pathway is as important as the final output.

Gemini 2.0 Thinking is an experimental AI model by Google that exposes its reasoning processes, offering transparency in how it solves problems across various tasks such as content generation, calculation, summarization, and analytical writing.

Its unique 'thinking' transparency lets users see tool usage, reasoning steps, and problem-solving strategies, increasing trust and educational value, especially in research and collaborative contexts.

The model was benchmarked across five key task types: content generation, calculation, summarization, comparison, and creative/analytical writing, with metrics including processing time, output quality, and reasoning visibility.

Strengths include multi-source research, high calculation precision, rapid summarization, well-structured comparisons, comprehensive analysis, and exceptional process visibility.

The model would benefit from more consistent transparency in its reasoning display across all task types and clearer tool usage logs in every scenario.

Arshia is an AI Workflow Engineer at FlowHunt. With a background in computer science and a passion for AI, she specializes in creating efficient workflows that integrate AI tools into everyday tasks, enhancing productivity and creativity.

Discover how process visibility and advanced reasoning in Gemini 2.0 Thinking can elevate your AI solutions. Book a demo or try FlowHunt today.

A comprehensive review of Google’s Gemini 2.5 Pro Preview, evaluating its real-world performance across five key tasks including content generation, business ca...

Explore the thought process, architecture, and decision-making of Gemini 1.5 Pro, a versatile AI agent, through real-world tasks and in-depth analysis of its re...

An in-depth analysis of Meta's Llama 4 Scout AI model performance across five diverse tasks, revealing impressive capabilities in content generation, calculatio...