The Art of Prompt Optimization for Smarter AI Workflows

Master prompt optimization for AI by crafting clear, context-rich prompts to boost output quality, reduce costs, and cut processing time. Explore techniques for smarter AI workflows.

Getting Started with Prompt Optimization

Prompt optimization means refining the input you provide to an AI model so that it delivers the most accurate and efficient responses possible. It’s not just about clear communication—optimized prompts also reduce computational overhead, leading to faster processing times and lower costs. Whether you’re writing queries for customer support chatbots or generating complex reports, how you structure and phrase your prompts matters.

The Difference Between a Good and a Bad Prompt

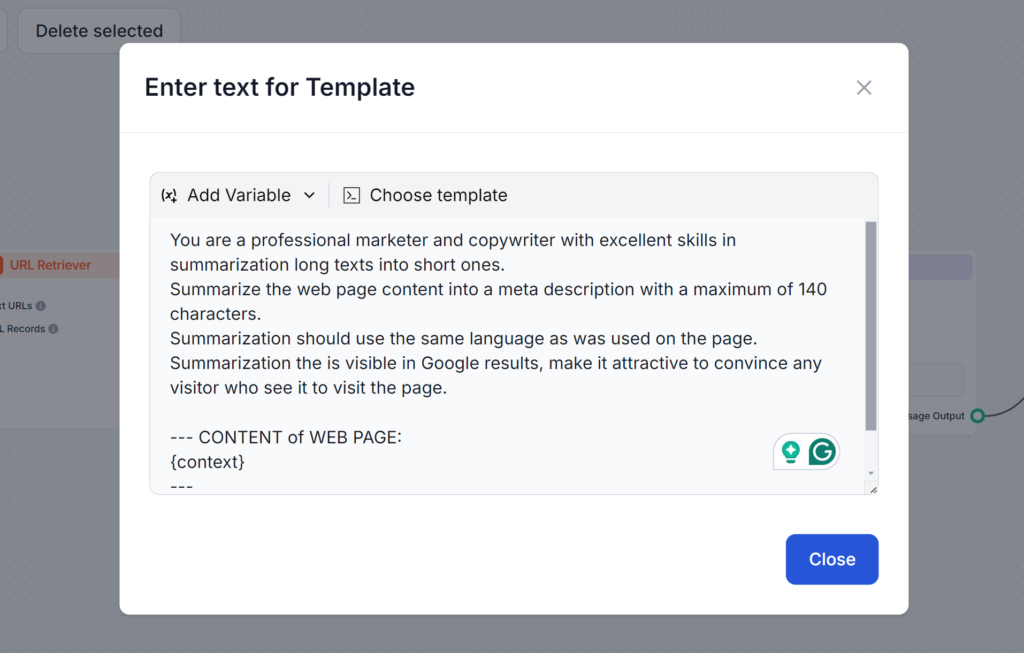

Have you ever tried prompting AI to write a meta description? Chances are, your first prompt went a little like this:

Write me a meta description for the topic of prompt optimization.

This prompt is wrong for several reasons. If you don’t specify the length of 140 characters mandated by Google, the AI would go way over. If it got the length right, it would often use a different style or make it too descriptive and boring for anyone to click. Lastly, without getting to read your article, it can only produce vague meta descriptions.

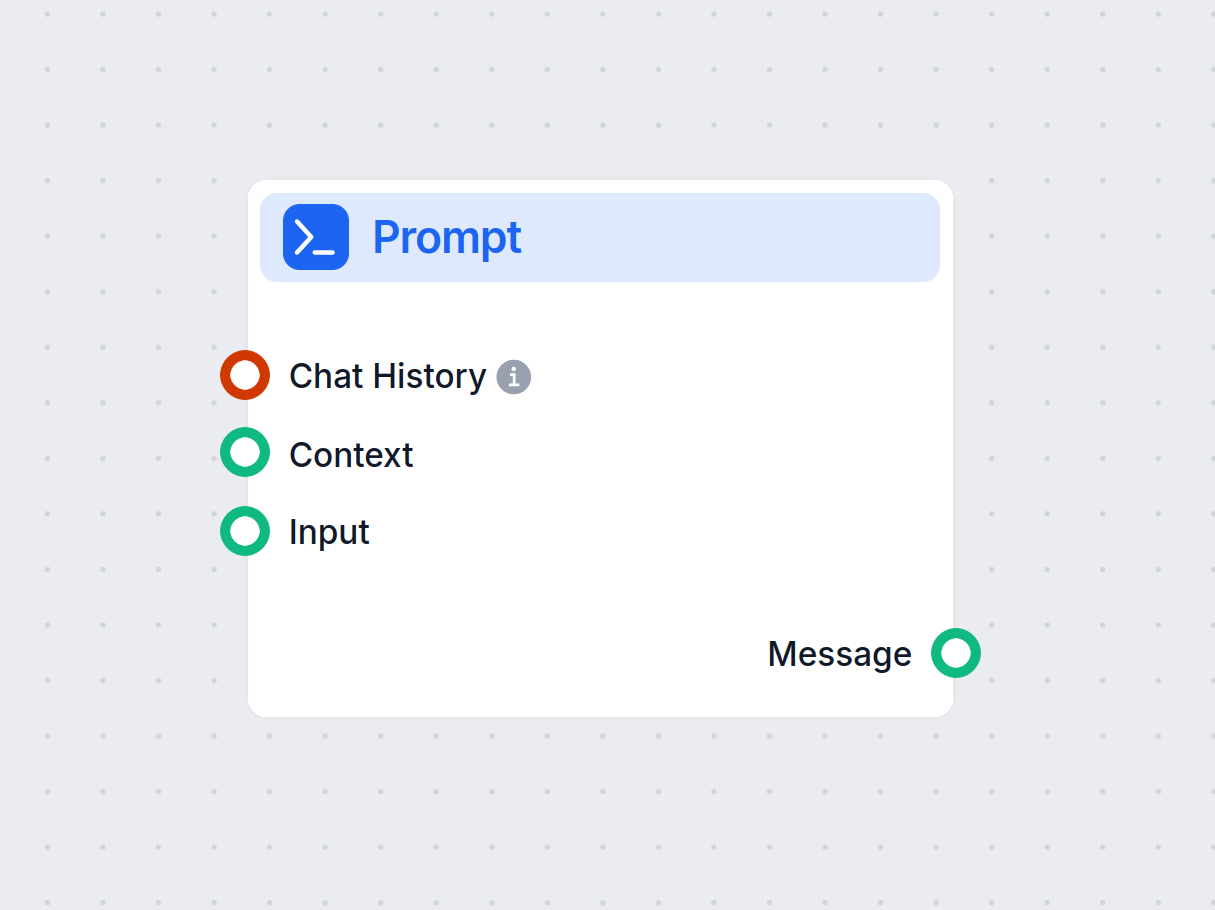

See the prompt below. It’s longer and uses several techniques we will learn in this blog. What this prompt does well is that it addresses all possible issues, ensuring you’ll get the exact output you need on the first try:

Understanding the Basics

Tokens are the building blocks of text that AI models process. The models break the text down into these tokens. A single token may be one word, more words or even a fraction of a word. More tokens usually mean slower responses and higher computing costs. So, understanding how tokens work is essential for making prompts better and ensuring they are cost-friendly and quick to execute.

Why Tokens Matter:

- Cost: Many AI models, like OpenAI’s ChatGPT, charge based on the number of tokens processed.

- Speed: Fewer tokens lead to faster responses.

- Clarity: A concise prompt helps the model focus on relevant details.

For instance:

- High-Token Prompt:

Can you please explain in detail every aspect of how machine learning models are trained, including all possible algorithms? - Low-Token Prompt:

Summarize the process of training machine learning models, highlighting key algorithms.

In the high-token prompt, the AI is tasked to go into detail on all possible options, while the low-token prompt asks for a simple overview. Seeing the overview, you can expand on it based on your needs, arriving at your desired outcome faster and cheaper.

Ways to Craft Effective Prompts

Creating effective prompts requires a blend of clarity, context, and creativity. Trying out different formats is recommended to discover the most effective ways to prompt AI. Here are some essential techniques:

Be Specific and Clear

Ambiguous prompts can confuse the model. A well-structured prompt ensures the AI understands your intent.

Example:

- Ambiguous Prompt:

Write about sports. - Specific Prompt:

Write a 200-word blog post on the benefits of regular exercise in basketball players.

Provide Context

Including relevant details helps the AI generate responses tailored to your needs.

Example:

- No Context:

Explain photosynthesis. - With Context:

Explain photosynthesis to a 10-year-old using simple language.

Use Examples

Adding examples guides the AI in understanding the format or tone you want.

Example:

- Without Example:

Generate a product review for a smartphone. - With Example:

Write a positive review of a smartphone like this: “I’ve been using the [Product Name] for a week, and its camera quality is outstanding…”

Experiment with Templates

Using standardized templates for similar tasks ensures consistency and saves time.

Example Template for Blog Creation:

“Write a [word count] blog post on [topic], focusing on [specific details]. Use a friendly tone and include [keywords].”

Advanced Techniques for Context Optimization

Several advanced strategies can help you take your prompts to the next level. These techniques go beyond basic clarity and structure, allowing you to handle more complex tasks, integrate dynamic data, and tailor AI responses to specific domains or needs. Here’s a short overview of how each technique works, with practical examples to guide you.

Few-Shot Learning

Few-shot learning is about providing a small number of examples within your prompt to help the AI understand the pattern or format you need. It enables the model to generalize effectively with minimal data, making it ideal for new or unfamiliar tasks.

Simply provide a few examples within your prompt to help the model understand your expectations.

Example prompt:

Translate the following phrases to French:

- Good morning → Bonjour

- How are you? → Comment ça va?

Now, translate: What is your name?

Prompt Chaining

Prompt chaining is the process of breaking down complex tasks into smaller, manageable steps that build upon each other. This method allows the AI to tackle multi-step problems systematically, ensuring clarity and precision in the output.

Example prompt:

- Step 1: Summarize this article in 100 words.

- Step 2: Turn the summary into a tweet.

Contextual Retrieval

Contextual retrieval integrates relevant, up-to-date information into the prompt by referencing external sources or summarizing key details. This way you give the AI access to accurate and current data for more informed responses.

Example:

“Using data from this report insert link], summarize the key findings on renewable energy [trends.”

Fine-Tuning with Embeddings

Fine-tuning with embeddings tailors the AI model to specific tasks or domains by using specialized data representations. This customization enhances the relevance and accuracy of responses in niche or industry-specific applications.

Token Management Strategies

Managing token usage allows you to control how quickly and cost-effectively AI handles inputs and outputs. By reducing the number of tokens processed, you can save costs and get faster response times without sacrificing quality. Here are techniques to manage tokens effectively:

- Trim Unnecessary Words: Avoid redundant or verbose language. Keep prompts concise and to the point.

- Verbose: Could you please, if you don’t mind, provide an overview of…?

- Concise: Provide an overview of…

- Use Windowing: Focus on processing only the most relevant sections of long inputs. By dividing it into manageable parts, AI can better extract the insights without repeatedly going over the entire input.

- Example: Extract key points from a 10,000-word document by dividing it into sections and prompting for summaries of each.

- Batch and Split Inputs: When processing multiple prompts, group them for efficiency.

- Example: Combine multiple related queries into a single prompt with clear separators.

How to Keep an Eye on the Performance

Optimization doesn’t stop at writing better prompts. Regularly track performance and iterate based on feedback. This ongoing tracking allows steady refinement, giving you the chance to make informed changes.

Focus on these key areas:

- Response Accuracy: Are outputs meeting expectations?

- Efficiency: Are token usage and processing times within acceptable limits?

- Relevance: Are the responses staying on-topic?

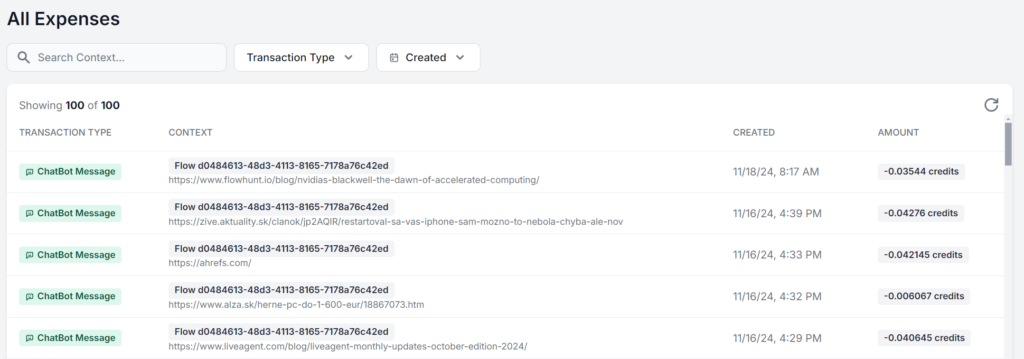

The best approach is to work within an interface that lets you see and analyze your exact usage for each prompt. Here’s the same FlowHunt AI workflow running 5 times with only the source material changing. The difference between charges is only in cents, but as new charges pile up, the difference quickly becomes noticeable:

Conclusion

Whether you’re just looking to get the best of the free limit on AI models or building your AI strategy at scale, prompt optimization is critical for anyone using AI. These techniques allow you to use AI efficiently, get accurate outputs, and reduce costs.

As AI technology advances, the importance of clear and optimized communication with models will only grow. Start experimenting with these strategies today for free. FlowHunt allows you to build with various AI models and capabilities in a single dashboard, allowing for optimized and efficient AI workflows for any task. Try the 14-day free trial!

Frequently asked questions

- What is prompt optimization in AI?

Prompt optimization involves refining the input you provide to an AI model so it delivers the most accurate and efficient responses. Optimized prompts reduce computational overhead, leading to faster processing times and lower costs.

- Why does token count matter in prompt engineering?

Token count affects both the speed and cost of AI outputs. Fewer tokens result in faster responses and lower costs, while concise prompts help models focus on relevant details.

- What are some advanced prompt optimization techniques?

Advanced techniques include few-shot learning, prompt chaining, contextual retrieval, and fine-tuning with embeddings. These methods help tackle complex tasks, integrate dynamic data, and tailor responses to specific needs.

- How can I measure prompt optimization performance?

Monitor response accuracy, token usage, and processing times. Regular tracking and iteration based on feedback help refine prompts and maintain efficiency.

- How can FlowHunt help with prompt optimization?

FlowHunt provides tools and a dashboard to build, test, and optimize AI prompts, allowing you to experiment with various models and strategies for efficient AI workflows.

Maria is a copywriter at FlowHunt. A language nerd active in literary communities, she's fully aware that AI is transforming the way we write. Rather than resisting, she seeks to help define the perfect balance between AI workflows and the irreplaceable value of human creativity.

Try FlowHunt for Smarter AI Workflows

Start building optimized AI workflows with FlowHunt. Experiment with prompt engineering and boost your productivity.