Introduction

The artificial intelligence landscape is experiencing unprecedented acceleration, with breakthrough technologies emerging across multiple domains simultaneously. From wearable AI glasses that augment human perception to reasoning models that surpass human performance in complex problem-solving, the convergence of these innovations is fundamentally reshaping how we interact with technology and automate our workflows. This comprehensive exploration examines the most significant AI developments of 2025, including Meta’s advanced Ray-Ban glasses, OpenAI’s superhuman reasoning capabilities, revolutionary 3D world generation technology, and the emerging infrastructure enabling autonomous agents to collaborate and transact with one another. Understanding these developments is crucial for businesses and individuals seeking to leverage AI’s transformative potential in their operations and strategic planning.

Understanding the Current State of AI Hardware and Wearables

The evolution of artificial intelligence has historically been constrained by the interfaces through which humans interact with intelligent systems. For decades, we relied on keyboards, mice, and screens to communicate with computers, creating a fundamental disconnect between our natural modes of perception and the digital tools we use. The emergence of wearable AI represents a paradigm shift in this relationship, moving computation from stationary devices into form factors that integrate seamlessly with our daily lives. Meta’s investment in Ray-Ban glasses exemplifies this transition, building upon decades of augmented reality research and the company’s extensive experience with the Oculus platform. The significance of this shift cannot be overstated—approximately one-third of the global population wears glasses daily, representing an enormous addressable market for AI-enhanced eyewear. By embedding AI capabilities directly into a device people already wear, Meta is positioning itself at the intersection of personal computing and artificial intelligence, creating a platform where AI can observe, understand, and interact with the world in real-time alongside the wearer.

Ready to grow your business?

Start your free trial today and see results within days.

Why AI-Powered Wearables Matter for the Future of Work and Interaction

The implications of AI-enhanced wearables extend far beyond consumer convenience, touching fundamental aspects of how we work, learn, and communicate. When an AI system can see what you see, hear what you hear, and project information directly into your visual field, it fundamentally changes the nature of human-computer interaction. Instead of breaking focus to check a device, information flows naturally into your field of vision. Instead of typing queries, you can simply speak to your AI assistant while maintaining engagement with your physical environment. For professional applications, this represents a massive productivity enhancement—imagine a technician wearing AI glasses that can identify equipment, retrieve maintenance procedures, and guide repairs in real-time, or a surgeon whose AI assistant provides real-time anatomical information and procedural guidance during operations. The battery improvements in the latest generation of Ray-Ban glasses, with 42% increased capacity enabling up to five hours of continuous use, address one of the primary barriers to adoption. As these devices become more practical and capable, they will likely become as ubiquitous as smartphones, fundamentally altering how we access information and interact with AI systems throughout our daily lives.

One of the most significant developments in artificial intelligence during 2025 is the achievement of superhuman performance in complex reasoning tasks. The International Collegiate Programming Contest (ICPC) World Finals represents the pinnacle of competitive programming, where the world’s top university teams solve extraordinarily difficult algorithmic problems under time pressure. These problems require not just coding knowledge but deep mathematical reasoning, creative problem-solving, and the ability to handle edge cases and complex constraints. OpenAI’s reasoning system achieved a perfect score of 12 out of 12 problems at the 2025 ICPC World Finals, a feat that places it above all human competitors who participated in the same competition. The methodology employed was particularly noteworthy—the system received problems in the exact same PDF format as human competitors, had the same five-hour time limit, and made submissions without any specialized test harnesses or competition-specific optimizations. For eleven of the twelve problems, the system’s first submitted answer was correct, demonstrating not just problem-solving capability but also confidence calibration and solution verification. For the most challenging problem, the system required nine submissions before arriving at the correct solution, still outperforming the best human team which achieved eleven out of twelve problems.

The technical approach underlying this achievement involved an ensemble of reasoning models, including GPT-5 and an experimental reasoning model, working together to generate and evaluate solutions. This represents a fundamental shift in how AI systems approach complex problems—rather than attempting to solve everything in a single forward pass, these systems employ iterative refinement, test-time adaptation, and ensemble methods to progressively improve their solutions. The implications are profound: if AI systems can now outperform the world’s best human programmers at solving novel, complex algorithmic problems, this suggests that many knowledge-work tasks previously thought to require human expertise may be automatable or augmentable with AI assistance. The achievement has been validated by industry experts, including Scott Woo, CEO of Cognition and a former mathematics competition champion, who emphasized the extraordinary difficulty of this accomplishment. Mark Chen, Chief Research Officer at OpenAI, contextualized the achievement within a broader trajectory of AI capabilities, noting that the core intelligence of these models is now sufficient—what remains is building the scaffolding and infrastructure to deploy these capabilities effectively.

Join our newsletter

Get latest tips, trends, and deals for free.

While reasoning capabilities represent one frontier of AI advancement, the practical deployment of AI systems requires robust infrastructure for managing information and context. Retrieval Augmented Generation (RAG) has emerged as a critical technology for enabling AI systems to access and utilize external knowledge sources—whether company documents, research papers, or proprietary databases. Traditional RAG systems face a fundamental challenge: as the amount of retrievable information grows, the computational cost of searching through and processing that information increases dramatically. Meta’s Super Intelligence Labs addressed this challenge through ReRAG, a novel optimization that improves RAG speed by 30x while simultaneously enabling the system to work with 16x longer contexts without sacrificing accuracy. The innovation works by replacing most retrieved tokens with precomputed and reusable chunk embeddings, fundamentally changing how information is stored and retrieved. Rather than processing raw text every time a query is made, the system leverages pre-computed embeddings that capture the semantic meaning of information chunks, allowing for faster retrieval and more efficient use of the model’s context window.

This optimization has immediate practical implications for enterprise AI deployment. Companies can now provide their AI systems with access to vastly larger knowledge bases without proportional increases in computational cost or latency. A customer service AI could have access to millions of pages of documentation and still respond to queries in milliseconds. A research assistant could search through entire libraries of academic papers and synthesize findings without the computational overhead that would previously have made such tasks impractical. The 30x speed improvement is particularly significant because it moves RAG from being a specialized technique used in specific applications to a practical default approach for any AI system that needs to access external information. Combined with improvements in context window length, ReRAG enables AI systems to maintain coherent understanding across much longer documents and more complex information hierarchies, essential for applications like legal document analysis, scientific research synthesis, and comprehensive business intelligence.

FlowHunt and the Orchestration of AI Workflows

The convergence of advanced AI capabilities—reasoning models, information retrieval systems, and autonomous agents—creates both opportunities and challenges for organizations seeking to leverage these technologies. The real value emerges not from individual AI capabilities in isolation, but from their orchestration into coherent workflows that solve real business problems. FlowHunt addresses this need by providing a platform for building and managing complex AI automation flows that connect multiple tools, data sources, and AI models into unified processes. Consider a practical example: converting news stories into formatted social media content. This seemingly simple task actually requires orchestrating multiple AI capabilities and external tools. The workflow begins by capturing a news URL and creating records in project management systems, automatically triggering parallel processing paths for different social media platforms. For each platform, the workflow uses AI to generate platform-specific headlines, retrieves additional assets and information through web scraping, generates custom header images with text overlays, and finally publishes the formatted content to scheduling platforms. Each step in this workflow involves different tools and AI models working in concert, with the output of one step feeding into the next.

This type of orchestration is becoming increasingly essential as AI capabilities proliferate. Rather than building custom integrations for each combination of tools and models, platforms like FlowHunt provide the infrastructure for rapid workflow development and deployment. The platform’s integration with over 8,000 tools means that virtually any business process can be automated by combining existing tools with AI capabilities. This democratizes AI automation, enabling organizations without specialized AI engineering teams to build sophisticated automated workflows. As AI agents become more capable and autonomous, the ability to orchestrate their activities, manage their interactions with external systems, and ensure their outputs meet business requirements becomes increasingly critical. FlowHunt’s approach of providing visual workflow builders combined with AI orchestration capabilities positions it as a key infrastructure layer in the emerging AI-driven economy.

The Emergence of Autonomous Agent Infrastructure

Beyond individual AI capabilities, 2025 is witnessing the emergence of infrastructure specifically designed to enable autonomous agents to interact with each other and with external systems. Google’s announcement of the Agent Payment Protocol (AP2) represents a significant milestone in this evolution. Building on the earlier Agent-to-Agent protocol that enabled agents to communicate with each other, AP2 extends this capability to include financial transactions. The protocol provides a common language for secure and compliant transactions between agents and merchants, enabling a new class of autonomous economic activity. Imagine an AI agent managing your business operations that can autonomously purchase services, negotiate contracts, and manage vendor relationships without human intervention. Or consider supply chain optimization where multiple AI agents representing different companies can transact with each other to optimize inventory, pricing, and delivery schedules in real-time.

The protocol has already attracted major technology and business partners including Adobe, Accenture, OnePassword, Intuit, Red Hat, Salesforce, and Okta. This level of industry support suggests that agent-to-agent transactions are not a speculative future capability but an emerging reality that enterprises are preparing to integrate into their operations. The implications extend beyond simple transactions—AP2 enables the formation of agent networks where autonomous systems can collaborate, compete, and coordinate to achieve complex objectives. A manufacturing AI agent could automatically source raw materials from supplier agents, coordinate with logistics agents for delivery, and manage payments through the protocol, all without human intervention. This represents a fundamental shift in how business processes are organized, moving from human-directed workflows to agent-coordinated ecosystems where AI systems operate with increasing autonomy within defined parameters.

The achievement of superhuman performance in competitive programming is part of a broader pattern of AI systems reaching or exceeding human-level performance in increasingly complex domains. The ICPC achievement builds on previous milestones including sixth place in the International Olympiad in Informatics (IOI), a gold medal at the International Mathematical Olympiad (IMO), and second place in the AtCoder Heuristic Contest. This progression demonstrates that AI reasoning capabilities are not limited to narrow domains but are generalizing across different types of complex problem-solving. The implications for knowledge work are significant—if AI systems can solve novel programming problems that require deep algorithmic understanding, they can likely assist with or automate many other knowledge-work tasks that involve similar reasoning patterns.

However, it’s important to contextualize these achievements within the broader landscape of AI development. As Mark Chen noted, the core intelligence of these models is now sufficient for many tasks—what remains is building the scaffolding and infrastructure to deploy these capabilities effectively. This scaffolding includes not just technical infrastructure like RAG systems and agent protocols, but also organizational processes, safety measures, and integration frameworks that allow AI capabilities to be deployed responsibly and effectively within existing business and social structures. The next phase of AI development will likely focus less on raw capability improvements and more on practical deployment, integration, and orchestration of existing capabilities.

Spatial Intelligence and 3D World Generation

While reasoning and agent infrastructure represent one frontier of AI development, spatial intelligence represents another. World Labs, founded by Fei-Fei Li, is pioneering the development of Large World Models (LWMs) that can generate and understand three-dimensional environments. The technology demonstrated by World Labs takes a single image and generates an entire interactive 3D world that users can explore and navigate. This represents a fundamental advance in how AI systems understand and represent spatial information. Rather than treating images as static 2D data, these systems construct coherent 3D models that maintain consistency as the viewer moves through the space. The generated worlds include detailed environmental features, proper lighting and shadows, and realistic physics, creating immersive experiences that feel natural and coherent.

The applications of this technology extend far beyond entertainment and visualization. In architecture and urban planning, designers could generate complete 3D environments from concept sketches, allowing stakeholders to explore and evaluate designs before construction begins. In education, students could explore historical sites, scientific environments, or complex systems in immersive 3D space. In training and simulation, organizations could generate realistic environments for training scenarios without the cost and complexity of building physical facilities. The technology also has implications for robotics and autonomous systems—if AI can generate coherent 3D models of environments, it can better understand spatial relationships and plan movements through complex spaces. As this technology matures and becomes more accessible, it will likely become a standard tool for visualization, design, and simulation across numerous industries.

Open-Source AI Agents and Competitive Benchmarking

The competitive landscape for AI capabilities is intensifying, with multiple organizations developing advanced reasoning and agent systems. Alibaba’s Tongyi DeepResearch represents a significant open-source contribution to this landscape, achieving state-of-the-art performance on multiple benchmarks with only 30 billion parameters, of which only 3 billion are activated during inference. This efficiency is remarkable—the system achieves performance comparable to much larger proprietary models while using a fraction of the computational resources. The system scores 32.9 on Humanity’s Last Exam, 45.3 on BrowseComp, and 75 on the XBench Deep Research benchmark, demonstrating strong performance across diverse reasoning and research tasks.

The open-source nature of Tongyi DeepResearch is particularly significant because it democratizes access to advanced AI capabilities. Rather than being limited to organizations with the resources to train massive proprietary models, researchers and developers can now work with state-of-the-art reasoning systems. The technical approach underlying Tongyi DeepResearch involves a novel automated multi-stage data strategy designed to create vast quantities of high-quality agentic training data without relying on costly human annotation. This addresses one of the fundamental challenges in AI development—the need for large quantities of high-quality training data. By automating the data generation process, Tongyi DeepResearch demonstrates that it’s possible to achieve state-of-the-art performance without the massive human annotation efforts that have traditionally been required.

AI Infrastructure Investment and Scaling

The rapid advancement of AI capabilities is driving massive investment in AI infrastructure, particularly in data center capacity and specialized hardware. GRQ, an AI chip builder, secured $750 million in new funding at a post-funding valuation of $6.9 billion, with plans to expand data center capacity including new locations in Asia-Pacific. This funding round, led by Disruptive and including major investors like BlackRock and Neuberger Berman, reflects the intense competition for inference capacity and the recognition that AI infrastructure will be a critical bottleneck in the coming years. The overwhelming demand for inference capacity—the computational resources needed to run trained AI models—is driving companies like Nvidia, GRQ, and Cerebras to expand production as rapidly as possible.

This infrastructure buildout is essential for realizing the potential of advanced AI capabilities. Reasoning models, large language models, and autonomous agents all require significant computational resources to operate. As these systems become more widely deployed, the demand for inference capacity will only increase. The investment in infrastructure is not speculative—it reflects the reality that organizations are already deploying AI systems at scale and need reliable, scalable infrastructure to support these deployments. The geographic expansion into Asia-Pacific reflects the global nature of AI deployment and the recognition that computational resources need to be distributed globally to serve users with acceptable latency and compliance with local data residency requirements.

Emerging Trends in AI Model Development

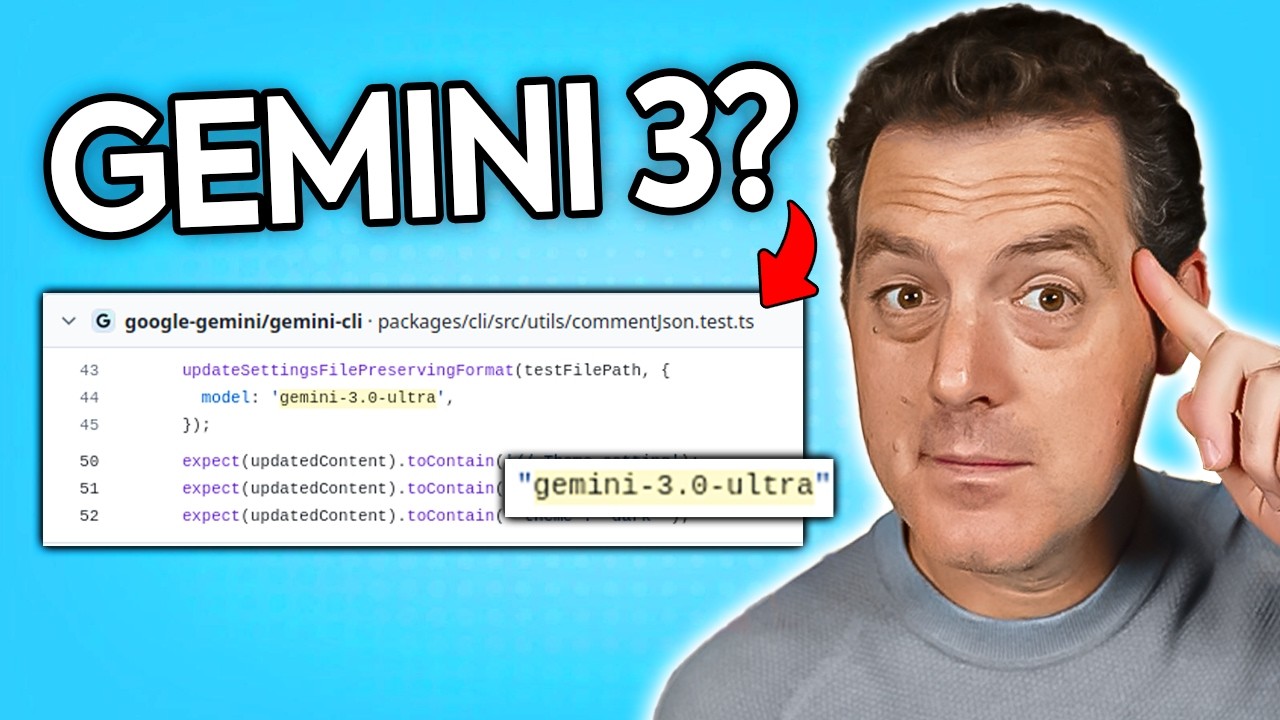

The competitive landscape for AI models is evolving rapidly, with multiple organizations pursuing different approaches to advancing AI capabilities. The rumored development of Gemini 3.0 Ultra, spotted in Google’s Gemini CLI repository, suggests that Google is preparing to release a new generation of its flagship reasoning model. The discovery of references to Gemini 3.0 Ultra in code committed just days before the news broke indicates that major model releases are often preceded by infrastructure changes and preparation work. The pattern of model versioning and release cycles suggests that we can expect regular updates and improvements to major AI systems, with each generation bringing incremental or sometimes significant improvements in capabilities.

Elon Musk’s announcement that Grok 5 training would begin in a few weeks indicates that xAI is also advancing its reasoning capabilities. The question of what constitutes a major version increment in AI models—whether it represents a new training run, significant architectural changes, or capability thresholds—remains somewhat ambiguous, but the pattern is clear: multiple organizations are investing heavily in developing advanced reasoning models, and we can expect regular releases of new versions with improved capabilities. This competitive dynamic is beneficial for the broader AI ecosystem, as competition drives innovation and ensures that no single organization has a monopoly on advanced AI capabilities.

Autonomous Vehicles and Real-World AI Deployment

While much of the discussion around AI focuses on reasoning models and agent infrastructure, real-world deployment of AI systems is advancing rapidly in domains like autonomous vehicles. The approval of Waymo’s pilot permit to operate autonomous rides at San Francisco International Airport represents a significant milestone in the commercialization of autonomous vehicle technology. The phased rollout approach, starting with airport operations and gradually expanding to broader service areas, reflects the careful approach required for deploying safety-critical AI systems in real-world environments. The competition from other autonomous vehicle companies like Zoox (owned by Amazon) demonstrates that multiple organizations are making significant progress in this domain.

The deployment of autonomous vehicles at major transportation hubs like SFO is significant because it represents a transition from controlled testing environments to real-world operations serving actual customers. The airport environment, while still relatively controlled compared to general urban driving, presents real challenges including weather variability, complex traffic patterns, and the need to interact with human drivers and pedestrians. Successful deployment in this environment demonstrates that autonomous vehicle technology has matured to the point where it can operate reliably in real-world conditions. As these systems accumulate more operational experience and data, they will likely improve further, eventually enabling broader deployment across more complex driving environments.

The Integration of AI Capabilities into Business Processes

The convergence of advanced AI capabilities, agent infrastructure, and automation platforms is enabling organizations to integrate AI into their core business processes in ways that were previously impossible. The practical example of converting news stories into social media content, while seemingly simple, illustrates the complexity of real-world AI automation. The workflow requires coordinating multiple AI models (for headline generation and image creation), external tools (for web scraping and content scheduling), and business logic (for platform-specific formatting and timing). Successfully implementing such workflows requires not just individual AI capabilities but also orchestration platforms that can manage the interactions between different components.

FlowHunt’s approach of providing visual workflow builders combined with AI orchestration capabilities addresses this need. By abstracting away the technical complexity of integrating different tools and models, these platforms enable business users to build sophisticated automated workflows without requiring specialized AI engineering expertise. As AI capabilities become more powerful and more widely available, the ability to orchestrate these capabilities into coherent business processes becomes increasingly valuable. Organizations that can effectively integrate AI into their workflows will gain significant competitive advantages through improved efficiency, reduced costs, and faster time-to-market for new products and services.

The Future of AI-Powered Automation

The developments discussed throughout this article point toward a future where AI systems are deeply integrated into business processes and daily life. Rather than AI being a specialized tool used for specific tasks, it becomes the default approach for automating and optimizing workflows. Autonomous agents coordinate with each other to manage complex business processes. AI systems with advanced reasoning capabilities solve novel problems and make strategic decisions. Wearable AI provides real-time information and assistance throughout the day. Spatial intelligence enables new forms of visualization and simulation. This future is not speculative—the technologies enabling it are already being deployed and refined.

The role of platforms like FlowHunt becomes increasingly important in this context. As AI capabilities proliferate and become more powerful, the ability to orchestrate these capabilities into coherent workflows becomes a critical competitive advantage. Organizations that can effectively integrate AI into their operations will be better positioned to compete in an increasingly AI-driven economy. The infrastructure investments being made by companies like GRQ, the open-source contributions from organizations like Alibaba, and the commercial platforms being developed by companies like FlowHunt all contribute to making advanced AI capabilities more accessible and practical for real-world deployment.

Conclusion

The AI landscape in 2025 is characterized by rapid advancement across multiple dimensions—from wearable hardware that augments human perception to reasoning models that exceed human performance in complex problem-solving, from infrastructure enabling autonomous agent transactions to spatial intelligence systems that generate immersive 3D environments. These developments are not isolated achievements but interconnected advances that collectively represent a fundamental shift in how AI is deployed and integrated into business and society. The convergence of these capabilities, enabled by orchestration platforms and supported by massive infrastructure investments, is creating the foundation for an AI-driven future where autonomous systems handle increasingly complex tasks with minimal human intervention. Organizations and individuals who understand these developments and position themselves to leverage these capabilities will be best prepared to thrive in the emerging AI-driven economy.