Top-k Accuracy

Top-k accuracy is a machine learning evaluation metric that assesses if the true class is among the top k predicted classes, offering a comprehensive and forgiv...

AI model accuracy measures correct predictions, while stability ensures consistent performance across datasets—both are vital for robust, reliable AI solutions.

AI model accuracy is a critical metric in the field of machine learning, representing the proportion of correct predictions made by a model out of the total predictions. This metric is especially pivotal in classification tasks, where the goal is to categorize instances correctly. The formal calculation of accuracy is expressed as:

Accuracy = (Number of Correct Predictions) / (Total Number of Predictions)

This ratio provides a straightforward measure of a model’s effectiveness in predicting the correct outcomes, but it should be noted that accuracy alone may not always provide a complete picture, especially in cases of imbalanced datasets.

In machine learning, accuracy serves as a fundamental indicator of a model’s performance. High accuracy suggests that a model is performing well in its task, such as identifying fraudulent transactions in a credit card fraud detection system. However, the importance of accuracy extends beyond classification tasks; it is crucial for models used in various high-stakes applications where decision-making relies heavily on the model’s predictions.

While accuracy is a valuable metric, it can be misleading, especially with imbalanced datasets where one class significantly outnumbers others. In such cases, accuracy might not reflect the model’s true performance, and metrics like the F1-score or area under the ROC curve may provide more insight.

AI model stability refers to the consistency of a model’s performance over time and across various datasets or environments. A stable model delivers similar results despite minor variations in input data or changes in the computational environment, ensuring reliability and robustness in predictions.

Stability is crucial for models deployed in production environments, where they encounter data distributions that may differ from the training dataset. A stable model ensures reliable performance and consistent predictions over time, regardless of external changes.

Maintaining stability can be challenging in rapidly changing environments. Achieving a balance between flexibility and consistency often requires sophisticated strategies, such as transfer learning or online learning, to adapt to new data without compromising performance.

In AI automation and chatbots, both accuracy and stability are crucial. A chatbot must accurately interpret user queries (accuracy) and consistently deliver reliable responses across various contexts and users (stability). In customer service applications, an unstable chatbot could lead to inconsistent responses and user dissatisfaction.

AI model leaderboards are platforms or tools designed to rank machine learning models based on their performance across a variety of metrics and tasks. These leaderboards provide standardized and comparative evaluation frameworks, crucial for researchers, developers, and practitioners to identify the most suitable models for specific applications. They offer insights into model capabilities and limitations, which are invaluable in understanding the landscape of AI technologies.

| Leaderboard Name | Description |

|---|---|

| Hugging Face Open LLM Leaderboard | Evaluates open large language models using a unified framework to assess capabilities like knowledge, reasoning, and problem-solving. |

| Artificial Analysis LLM Performance Leaderboard | Focuses on evaluating models based on quality, price, speed, and other metrics, especially for serverless LLM API endpoints. |

| LMSYS Chatbot Arena Leaderboard | Uses human preference votes and the Elo ranking method to assess chatbot models through interactions with custom prompts and scenarios. |

Metrics are quantitative criteria used to evaluate the performance of AI models on leaderboards. They provide a standardized way to measure and compare how well models perform specific tasks.

AI model accuracy is a metric representing the proportion of correct predictions made by a model out of the total predictions, especially important in classification tasks.

Stability ensures that an AI model delivers consistent performance over time and across different datasets, making it reliable for real-world applications.

Accuracy can be misleading with imbalanced datasets and may not reflect true model performance. Metrics like F1-score, precision, and recall are often used alongside accuracy for a more complete evaluation.

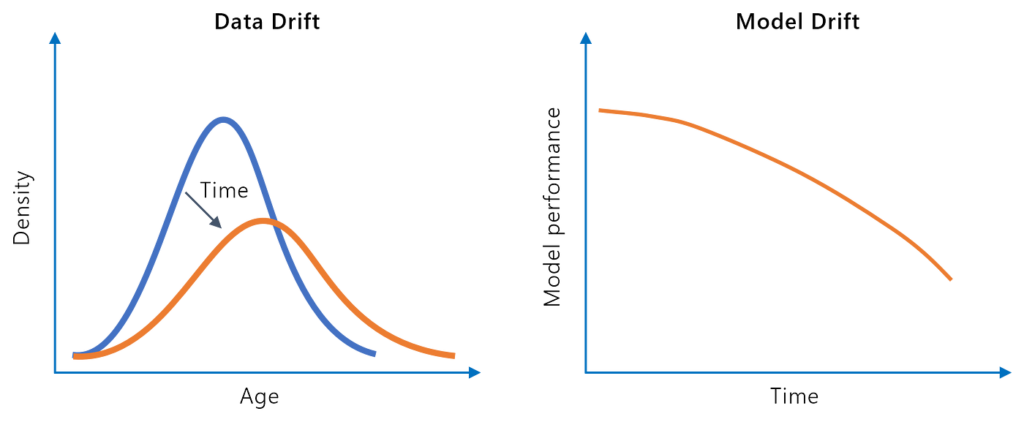

Model stability can be enhanced by regular monitoring, retraining with new data, managing data drift, and using techniques like transfer learning or online learning.

AI model leaderboards rank machine learning models based on their performance across various metrics and tasks, providing standardized evaluation frameworks for comparison and innovation.

Discover how FlowHunt helps you create accurate and stable AI models for automation, chatbots, and more. Enhance reliability and performance today.

Top-k accuracy is a machine learning evaluation metric that assesses if the true class is among the top k predicted classes, offering a comprehensive and forgiv...

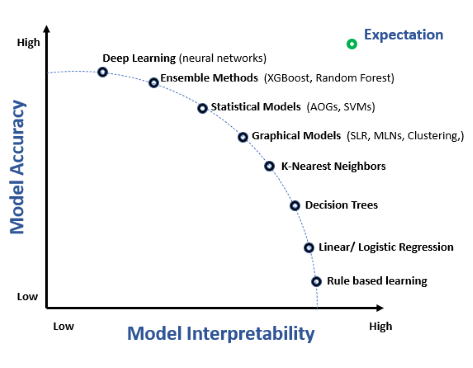

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

Model drift, or model decay, refers to the decline in a machine learning model’s predictive performance over time due to changes in the real-world environment. ...