Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation (RAG) is an advanced AI framework that combines traditional information retrieval systems with generative large language models (...

Document reranking refines retrieved search results by prioritizing documents most relevant to a user’s query, improving the accuracy of AI and RAG systems.

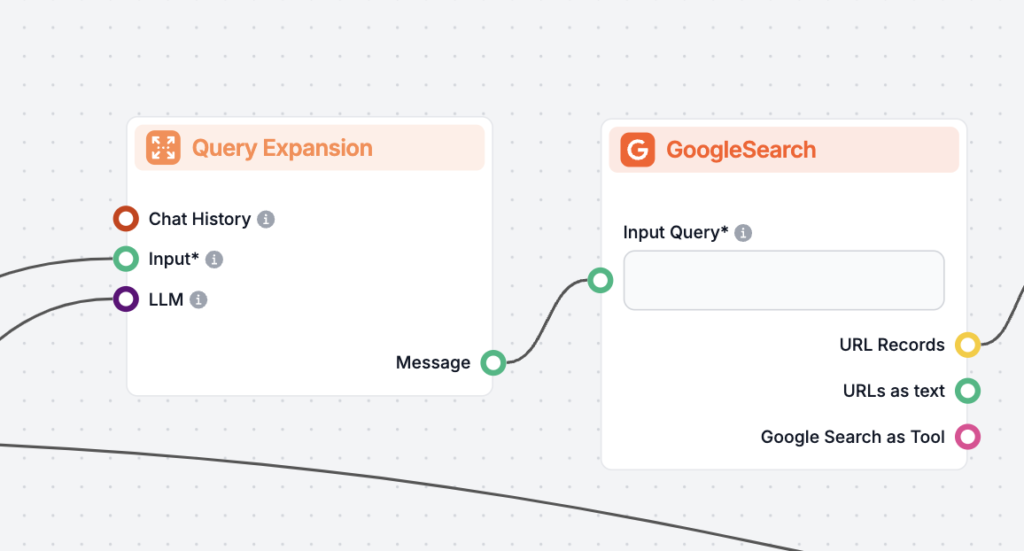

Document reranking reorders retrieved documents based on query relevance, refining search results. Query expansion enhances search by adding related terms, improving recall and addressing ambiguity. Combining these techniques in RAG systems boosts retrieval accuracy and response quality.

Document reranking is the process of reordering retrieved documents based on their relevance to the user’s query. After an initial retrieval step, reranking refines the results by evaluating each document’s relevance more precisely, ensuring that the most pertinent documents are prioritized.

Retrieval-Augmented Generation (RAG) is an advanced framework that combines the capabilities of Large Language Models (LLMs) with information retrieval systems. In RAG, when a user submits a query, the system retrieves relevant documents from a vast knowledge base and feeds this information into the LLM to generate informed and contextually accurate responses. This approach enhances the accuracy and relevance of AI-generated content by grounding it in factual data.

Definition

Query expansion is a technique used in information retrieval to enhance the effectiveness of search queries. It involves augmenting the original query with additional terms or phrases that are semantically related. The primary goal is to bridge the gap between the user’s intent and the language used in relevant documents, thereby improving the retrieval of pertinent information.

How It Works

In practice, query expansion can be achieved through various methods:

By expanding the query, the retrieval system can cast a wider net, capturing documents that might have been missed due to variations in terminology or phrasing.

Improving Recall

Recall refers to the ability of the retrieval system to find all relevant documents. Query expansion enhances recall by:

Addressing Query Ambiguity

Users often submit short or ambiguous queries. Query expansion helps in:

Enhancing Document Matching

By including additional relevant terms, the system increases the likelihood of matching the query with documents that might use different vocabulary, thus improving the overall effectiveness of the retrieval process.

What Is PRF?

Pseudo-Relevance Feedback is an automatic query expansion method where the system assumes that the top-ranked documents from an initial search are relevant. It extracts significant terms from these documents to refine the original query.

How PRF Works

Benefits and Drawbacks

Leveraging Large Language Models

With advancements in AI, LLMs like GPT-3 and GPT-4 can generate sophisticated query expansions by understanding context and semantics.

How LLM-Based Expansion Works

Example

Original Query:

“What were the most important factors that contributed to increases in revenue?”

LLM-Generated Answer:

“In the fiscal year, several key factors contributed to the significant increase in the company’s revenue, including successful marketing campaigns, product diversification, customer satisfaction initiatives, strategic pricing, and investments in technology.”

Expanded Query:

“Original Query: What were the most important factors that contributed to increases in revenue?

Hypothetical Answer: [LLM-Generated Answer]”

Advantages

Challenges

Step-by-Step Process

Benefits in RAG Systems

Why Reranking Is Necessary

Overview

Cross-encoders are neural network models that take a pair of inputs (the query and a document) and output a relevance score. Unlike bi-encoders, which encode query and document separately, cross-encoders process them jointly, allowing for richer interaction between the two.

How Cross-Encoders Work

Advantages

Challenges

What Is ColBERT?

ColBERT (Contextualized Late Interaction over BERT) is a retrieval model designed to balance efficiency and effectiveness. It uses a late interaction mechanism that allows for detailed comparison between query and document tokens without heavy computational costs.

How ColBERT Works

Advantages

Use Cases

Overview

FlashRank is a lightweight and fast reranking library that uses state-of-the-art cross-encoders. It’s designed to integrate easily into existing pipelines and improve reranking performance with minimal overhead.

Features

Example Usage

from flashrank import Ranker, RerankRequest

query = 'What were the most important factors that contributed to increases in revenue?'

ranker = Ranker(model_name="ms-marco-MiniLM-L-12-v2")

rerank_request = RerankRequest(query=query, passages=documents)

results = ranker.rerank(rerank_request)

Benefits

Process

Considerations

Complementary Techniques

Benefits of Combining

Example Workflow

Query Expansion with LLM:

def expand_query(query):

prompt = f"Provide additional related queries for: '{query}'"

expanded_queries = llm.generate(prompt)

expanded_query = ' '.join([query] + expanded_queries)

return expanded_query

Initial Retrieval:

documents = vector_db.retrieve_documents(expanded_query)

Document Reranking:

from sentence_transformers import CrossEncoder

cross_encoder = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

pairs = [[query, doc.text] for doc in documents]

scores = cross_encoder.predict(pairs)

ranked_docs = [doc for _, doc in sorted(zip(scores, documents), reverse=True)]

Selecting Top Documents:

top_documents = ranked_docs[:top_k]

Generating Response with LLM:

context = '\n'.join([doc.text for doc in top_documents])

prompt = f"Answer the following question using the context provided:\n\nQuestion: {query}\n\nContext:\n{context}"

response = llm.generate(prompt)

Monitoring and Optimization

Scenario

A company uses an AI chatbot to handle customer queries about their products and services. Customers often ask questions in various ways, using different terminologies or phrases.

Challenges

Implementation

Benefits

Scenario

Researchers use an AI assistant to find relevant academic papers, data, and insights for their work.

Challenges

Implementation

Document reranking is the process of reordering retrieved documents after an initial search based on their relevance to a user's query. It ensures that the most relevant and useful documents are prioritized, improving the quality of AI-powered search and chatbots.

In RAG systems, document reranking uses models like cross-encoders or ColBERT to assess the relevance of each document to the user’s query, after an initial retrieval. This step helps refine and optimize the set of documents provided to large language models for generating accurate responses.

Query expansion is a technique in information retrieval that augments the original user query with related terms or phrases, increasing recall and addressing ambiguity. In RAG systems, it helps retrieve more relevant documents that might use different terminology.

Key methods include cross-encoder neural models (which jointly encode query and document for high-precision scoring), ColBERT (which uses late interaction for efficient scoring), and libraries like FlashRank for fast, accurate reranking.

Query expansion broadens the search to retrieve more potentially relevant documents, while document reranking filters and refines these results to ensure only the most pertinent documents are passed to the AI for response generation, maximizing both recall and precision.

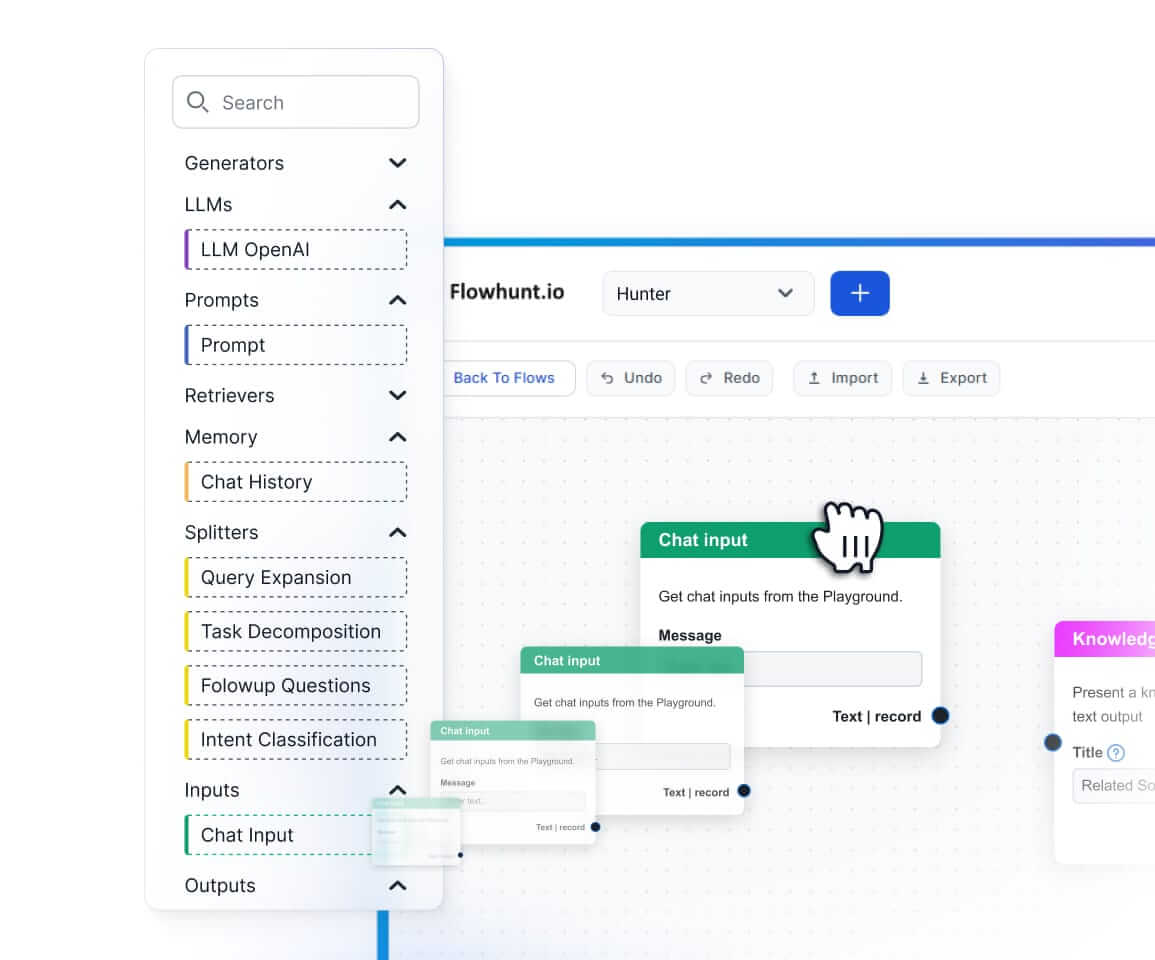

Discover how document reranking and query expansion can improve the accuracy and relevance of your AI chatbots and automation flows. Build smarter AI with FlowHunt.

Retrieval Augmented Generation (RAG) is an advanced AI framework that combines traditional information retrieval systems with generative large language models (...

Discover the key differences between Retrieval-Augmented Generation (RAG) and Cache-Augmented Generation (CAG) in AI. Learn how RAG dynamically retrieves real-t...

Document grading in Retrieval-Augmented Generation (RAG) is the process of evaluating and ranking documents based on their relevance and quality in response to ...