Word Embeddings

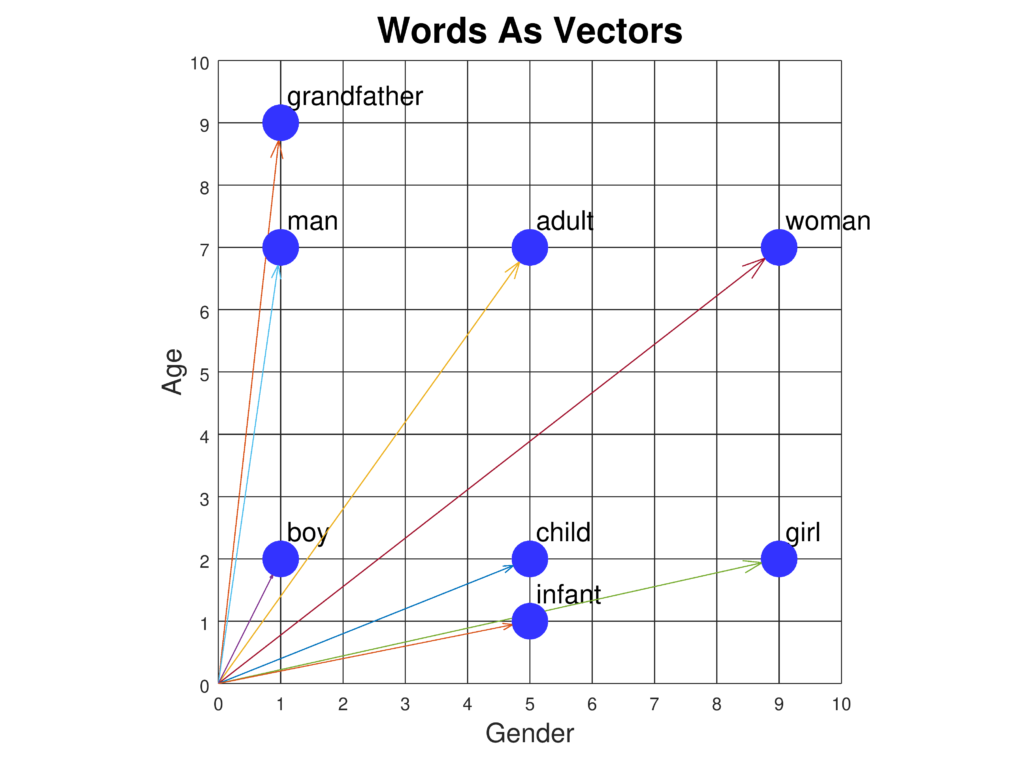

Word embeddings are sophisticated representations of words in a continuous vector space, capturing semantic and syntactic relationships for advanced NLP tasks l...

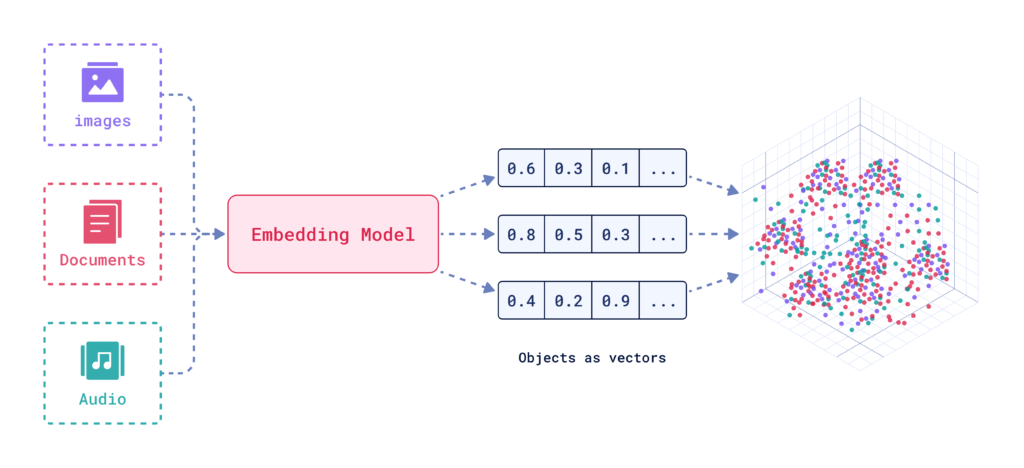

An embedding vector numerically represents data in a multidimensional space, enabling AI systems to capture semantic relationships for tasks like classification, clustering, and recommendations.

An embedding vector is a dense numerical representation of data where each piece of data is mapped to a point in a multidimensional space. This mapping is designed to capture the semantic information and contextual relationships between different data points. Similar data points are positioned closer together in this space, facilitating tasks such as classification, clustering, and recommendation.

Embedding vectors are essentially arrays of numbers that encapsulate the intrinsic properties and relationships of the data they represent. By translating complex data types into these vectors, AI systems can perform various operations more efficiently.

Embedding vectors are foundational to many AI and ML applications. They simplify the representation of high-dimensional data, making it easier to analyze and interpret.

Creating embedding vectors involves several steps:

Huggingface’s Transformers library offers state-of-the-art transformer models like BERT, RoBERTa, and GPT-3. These models are pre-trained on vast datasets and provide high-quality embeddings that can be fine-tuned for specific tasks, making them ideal for creating robust NLP applications.

First, ensure you have the transformers library installed in your Python environment. You can install it using pip:

pip install transformers

Next, load a pre-trained model from the Huggingface model hub. For this example, we’ll use BERT.

from transformers import BertModel, BertTokenizer

model_name = 'bert-base-uncased'

tokenizer = BertTokenizer.from_pretrained(model_name)

model = BertModel.from_pretrained(model_name)

Tokenize your input text to prepare it for the model.

inputs = tokenizer("Hello, Huggingface!", return_tensors='pt')

Pass the tokenized text through the model to obtain embeddings.

outputs = model(**inputs)

embedding_vectors = outputs.last_hidden_state

Here’s a complete example demonstrating the steps mentioned above:

from transformers import BertModel, BertTokenizer

# Load pre-trained BERT model and tokenizer

model_name = 'bert-base-uncased'

tokenizer = BertTokenizer.from_pretrained(model_name)

model = BertModel.from_pretrained(model_name)

# Tokenize input text

text = "Hello, Huggingface!"

inputs = tokenizer(text, return_tensors='pt')

# Generate embedding vectors

outputs = model(**inputs)

embedding_vectors = outputs.last_hidden_state

print(embedding_vectors)

SNE is an early method for dimensionality reduction, developed by Geoffrey Hinton and Sam Roweis. It works by calculating pairwise similarities in the high-dimensional space and trying to preserve these similarities in a lower-dimensional space.

An improvement over SNE, t-SNE is widely used for visualizing high-dimensional data. It minimizes the divergence between two distributions: one representing pairwise similarities in the original space and the other in the reduced space, using a heavy-tailed Student-t distribution.

UMAP is a more recent technique that offers faster computation and better preservation of global data structure compared to t-SNE. It works by constructing a high-dimensional graph and optimizing a low-dimensional graph to be as structurally similar as possible.

Several tools and libraries facilitate the visualization of embedding vectors:

An embedding vector is a dense numerical representation of data, mapping each data point to a position in a multidimensional space to capture semantic and contextual relationships.

Embedding vectors are foundational in AI for simplifying complex data, enabling tasks such as text classification, image recognition, and personalized recommendations.

Embedding vectors can be generated using pre-trained models like BERT from the Huggingface Transformers library. By tokenizing your data and passing it through such models, you obtain high-quality embeddings for further analysis.

Dimensionality reduction techniques like t-SNE and UMAP are commonly used to visualize high-dimensional embedding vectors, helping to interpret and analyze data patterns.

Start building your own AI tools and chatbots with FlowHunt’s no-code platform. Turn your ideas into automated Flows easily.

Word embeddings are sophisticated representations of words in a continuous vector space, capturing semantic and syntactic relationships for advanced NLP tasks l...

AI Search is a semantic or vector-based search methodology that uses machine learning models to understand the intent and contextual meaning behind search queri...

Feature extraction transforms raw data into a reduced set of informative features, enhancing machine learning by simplifying data, improving model performance, ...