Model Drift

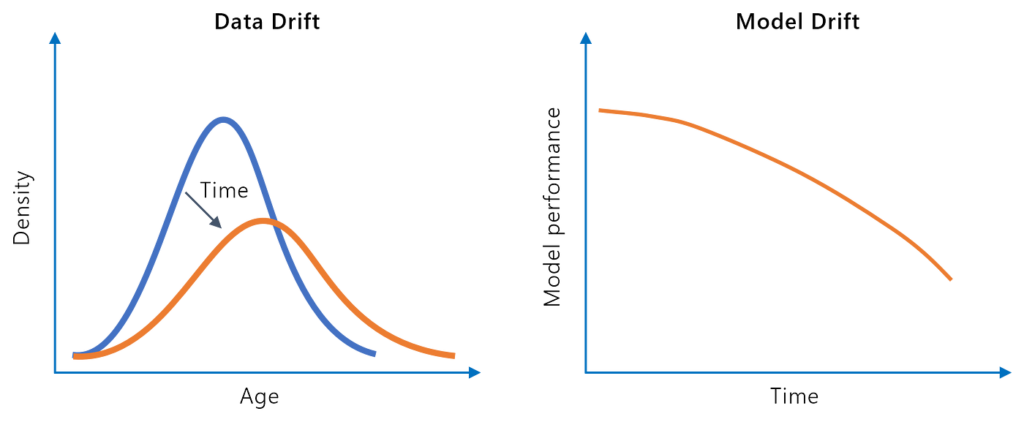

Model drift, or model decay, refers to the decline in a machine learning model’s predictive performance over time due to changes in the real-world environment. ...

Model collapse occurs when AI models degrade due to over-reliance on synthetic data, resulting in less diverse, creative, and original outputs.

Model collapse is a phenomenon in artificial intelligence (AI) where a trained model degrades over time, especially when relying on synthetic or AI-generated data. This degradation manifests as reduced output diversity, a propensity for “safe” responses, and a diminished ability to produce creative or original content.

Model collapse occurs when AI models, particularly generative models, lose their effectiveness due to repetitive training on AI-generated content. Over generations, these models start to forget the true underlying data distribution, which leads to increasingly homogeneous and less diverse outputs.

Model collapse is critical because it threatens the future of generative AI. As more online content is generated by AI, the training data for new models becomes polluted, reducing the quality of future AI outputs. This phenomenon can lead to a cycle where AI-generated data gradually loses its value, making it harder to train high-quality models in the future.

Model collapse typically occurs due to several intertwined factors:

When AI models are trained primarily on AI-generated content, they begin to mimic these patterns rather than learning from the complexities of real-world, human-generated data.

Massive datasets often contain inherent biases. To avoid generating offensive or controversial outputs, models may be trained to produce safe, bland responses, contributing to a lack of diversity in outputs.

As models generate less creative output, this uninspiring AI-generated content can be fed back into the training data, creating a feedback loop that further entrenches the model’s limitations.

AI models driven by reward systems may learn to optimize for specific metrics, often finding ways to “cheat” the system by producing responses that maximize rewards but lack creativity or originality.

The primary cause of model collapse is the excessive reliance on synthetic data for training. When models are trained on data that is itself generated by other models, the nuances and complexities of human-generated data are lost.

As the internet becomes inundated with AI-generated content, finding and utilizing high-quality human-generated data becomes increasingly difficult. This pollution of training data leads to models that are less accurate and more prone to collapse.

Training on repetitive and homogeneous data leads to a loss of diversity in the model’s outputs. Over time, the model forgets less common but important aspects of the data, further degrading its performance.

Model collapse can lead to several noticeable effects, including:

Collapsed models struggle to innovate or push boundaries in their respective fields, leading to stagnation in AI development.

If models consistently default to “safe” responses, meaningful progress in AI capabilities is hindered.

Model collapse makes AIs less capable of tackling real-world problems that require nuanced understanding and flexible solutions.

Since model collapse often results from biases in training data, it risks reinforcing existing stereotypes and unfairness.

GANs, which involve a generator creating realistic data and a discriminator distinguishing real from fake data, can suffer from mode collapse. This occurs when the generator produces only a limited variety of outputs, failing to capture the full diversity of real data.

VAEs, which aim to encode data into a lower-dimensional space and then decode it back, can also be impacted by model collapse, leading to less diverse and creative outputs.

Model collapse is when an AI model's performance degrades over time, especially from training on synthetic or AI-generated data, leading to less diverse and less creative outputs.

Model collapse is mainly caused by over-reliance on synthetic data, data pollution, training biases, feedback loops, and reward hacking, resulting in models that forget real-world data diversity.

Consequences include limited creativity, stagnation of AI development, perpetuation of biases, and missed opportunities for tackling complex, real-world problems.

Prevention involves ensuring access to high-quality human-generated data, minimizing synthetic data in training, and addressing biases and feedback loops in model development.

Discover how to prevent model collapse and ensure your AI models remain creative and effective. Explore best practices and tools for training high-quality AI.

Model drift, or model decay, refers to the decline in a machine learning model’s predictive performance over time due to changes in the real-world environment. ...

Overfitting is a critical concept in artificial intelligence (AI) and machine learning (ML), occurring when a model learns the training data too well, including...

Data scarcity refers to insufficient data for training machine learning models or comprehensive analysis, hindering the development of accurate AI systems. Disc...