Model Collapse

Model collapse is a phenomenon in artificial intelligence where a trained model degrades over time, especially when relying on synthetic or AI-generated data. T...

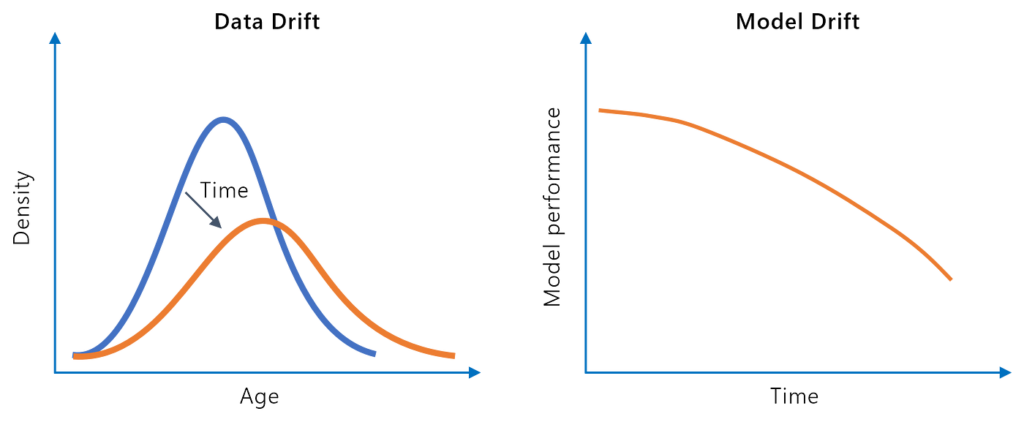

Model drift is the degradation of a machine learning model’s accuracy as real-world conditions change, highlighting the need for ongoing monitoring and adaptation.

Model drift, or model decay, occurs when a model’s predictive performance deteriorates due to changes in the real-world environment. This necessitates continuous monitoring and adaptation to maintain accuracy in AI and machine learning applications.

Model drift, often referred to as model decay, describes the phenomenon where the predictive performance of a machine learning model deteriorates over time. This decline is primarily triggered by shifts in the real-world environment that alter the relationships between input data and target variables. As the foundational assumptions upon which the model was trained become obsolete, the model’s capacity to generate accurate predictions diminishes. This concept is crucial in domains such as artificial intelligence, data science, and machine learning, as it directly influences the dependability of model predictions.

In the fast-evolving landscape of data-driven decision-making, model drift presents a significant challenge. It underscores the necessity for continuous model monitoring and adaptation to ensure sustained accuracy and relevancy. Machine learning models, once deployed, do not operate in a static environment; they encounter dynamic and evolving data streams. Without proper monitoring, these models may produce erroneous outputs, leading to flawed decision-making processes.

Model drift manifests in various forms, each impacting model performance in distinct ways. Understanding these types is essential for effectively managing and mitigating drift:

Model drift can arise from a variety of factors, including:

Effective detection of model drift is crucial for maintaining the performance of machine learning models. Several methods are commonly employed for drift detection:

Once model drift is detected, several strategies can be employed to address it:

Model drift is relevant in a variety of domains:

Managing model drift is critical for ensuring the long-term success and reliability of machine learning applications. By actively monitoring and addressing drift, organizations can maintain model accuracy, reduce the risk of incorrect predictions, and enhance decision-making processes. This proactive approach supports sustained adoption and trust in AI and machine learning technologies across various sectors. Effective drift management requires a combination of robust monitoring systems, adaptive learning techniques, and a culture of continuous improvement in model development and deployment.

Model Drift, also known as Concept Drift, is a phenomenon where the statistical properties of the target variable, which the model is trying to predict, change over time. This change can lead to a decline in the model’s predictive performance as it no longer accurately reflects the underlying data distribution. Understanding and managing model drift is crucial in various applications, particularly those involving data streams and real-time predictions.

Key Research Papers:

A comprehensive analysis of concept drift locality in data streams

Published: 2023-12-09

Authors: Gabriel J. Aguiar, Alberto Cano

This paper addresses the challenges of adapting to drifting data streams in online learning. It highlights the importance of detecting concept drift for effective model adaptation. The authors present a new categorization of concept drift based on its locality and scale, and propose a systematic approach that results in 2,760 benchmark problems. The paper conducts a comparative assessment of nine state-of-the-art drift detectors, examining their strengths and weaknesses. The study also explores how drift locality affects classifier performance and suggests strategies to minimize recovery time. The benchmark data streams and experiments are publicly available here.

Tackling Virtual and Real Concept Drifts: An Adaptive Gaussian Mixture Model

Published: 2021-02-11

Authors: Gustavo Oliveira, Leandro Minku, Adriano Oliveira

This work delves into handling data changes due to concept drift, particularly distinguishing between virtual and real drifts. The authors propose an On-line Gaussian Mixture Model with a Noise Filter for managing both types of drift. Their approach, OGMMF-VRD, demonstrates superior performance in terms of accuracy and runtime when tested on seven synthetic and three real-world datasets. The paper provides an in-depth analysis of the impact of both drifts on classifiers, offering valuable insights for better model adaptation.

Model Based Explanations of Concept Drift

Published: 2023-03-16

Authors: Fabian Hinder, Valerie Vaquet, Johannes Brinkrolf, Barbara Hammer

This paper explores the concept of explaining drift by characterizing the change in data distribution in a human-understandable manner. The authors introduce a novel technology that uses various explanation techniques to describe concept drift through the characteristic change of spatial features. This approach not only aids in understanding how and where drift occurs but also enhances the acceptance of life-long learning models. The methodology proposed reduces the explanation of concept drift to the explanation of suitably trained models.

Model drift, also known as model decay, is the phenomenon where a machine learning model’s predictive performance deteriorates over time because of changes in the environment, input data, or target variables.

The main types are concept drift (changes in the statistical properties of the target variable), data drift (changes in input data distribution), upstream data changes (alterations in data pipelines or formats), feature drift (changes in feature distributions), and prediction drift (changes in prediction distributions).

Model drift can be detected through continuous evaluation of model performance, using statistical tests like Population Stability Index (PSI), Kolmogorov-Smirnov test, and Z-score analysis to monitor changes in data or prediction distributions.

Strategies include retraining the model with new data, implementing online learning, updating features through feature engineering, or replacing the model if necessary to maintain accuracy.

Managing model drift ensures sustained accuracy and reliability of AI and machine learning applications, supports better decision-making, and maintains user trust in automated systems.

Start building smart chatbots and AI solutions with FlowHunt’s intuitive platform. Connect blocks, automate Flows, and stay ahead with adaptive AI.

Model collapse is a phenomenon in artificial intelligence where a trained model degrades over time, especially when relying on synthetic or AI-generated data. T...

Underfitting occurs when a machine learning model is too simplistic to capture the underlying trends of the data it is trained on. This leads to poor performanc...

Discover the importance of AI model accuracy and stability in machine learning. Learn how these metrics impact applications like fraud detection, medical diagno...