Retrieval Augmented Generation (RAG)

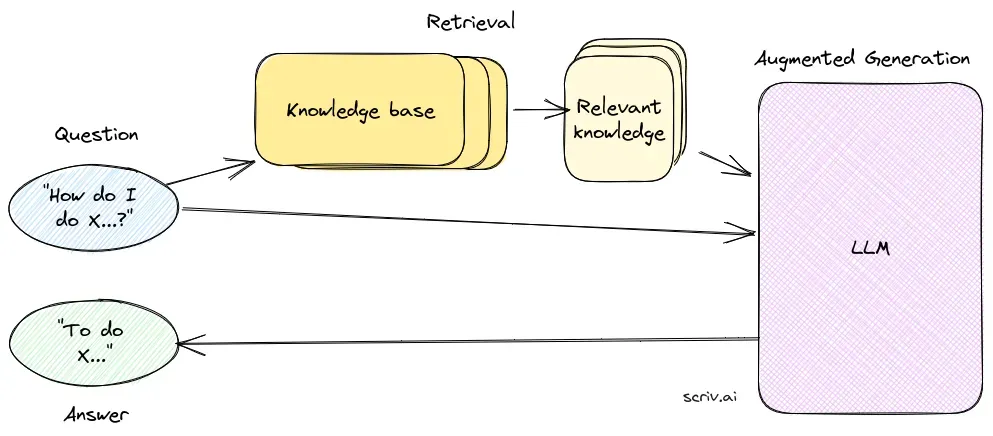

Retrieval Augmented Generation (RAG) is an advanced AI framework that combines traditional information retrieval systems with generative large language models (...

Question Answering with RAG enhances LLMs by integrating real-time data retrieval and natural language generation for accurate, contextually relevant responses.

Question Answering with Retrieval-Augmented Generation (RAG) enhances language models by integrating real-time external data for accurate and relevant responses. It optimizes performance in dynamic fields, offering improved accuracy, dynamic content, and enhanced relevance.

Question Answering with Retrieval-Augmented Generation (RAG) is an innovative method that combines the strengths of information retrieval and natural language generation creates human-like text from data, enhancing AI, chatbots, reports, and personalizing experiences."). This hybrid approach enhances the capabilities of large language models (LLMs) by supplementing their responses with relevant, up-to-date information retrieved from external data sources. Unlike traditional methods that rely solely on pre-trained models, RAG dynamically integrates external data, allowing systems to provide more accurate and contextually relevant answers, particularly in domains requiring the latest information or specialized knowledge.

RAG optimizes the performance of LLMs by ensuring that answers are not only generated from an internal dataset but also informed by real-time, authoritative sources. This approach is crucial for question-answering tasks in dynamic fields where information is constantly evolving.

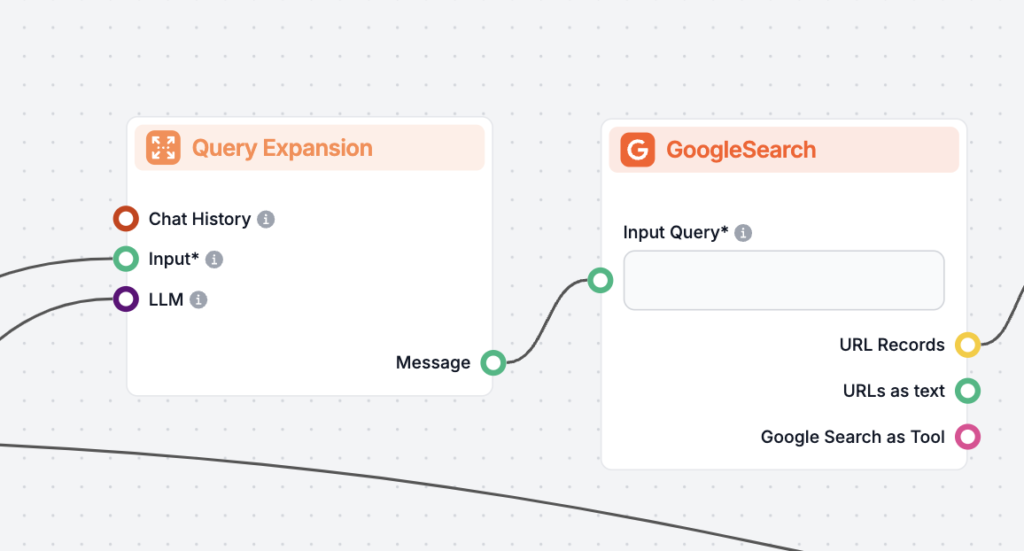

The retrieval component is responsible for sourcing relevant information from vast datasets, typically stored in a vector database. This component employs semantic search techniques to identify and extract text segments or documents that are highly relevant to the user’s query.

The generation component, usually an LLM such as GPT-3 or BERT, synthesizes an answer by combining the user’s original query with the retrieved context. This component is crucial for generating coherent and contextually appropriate responses.

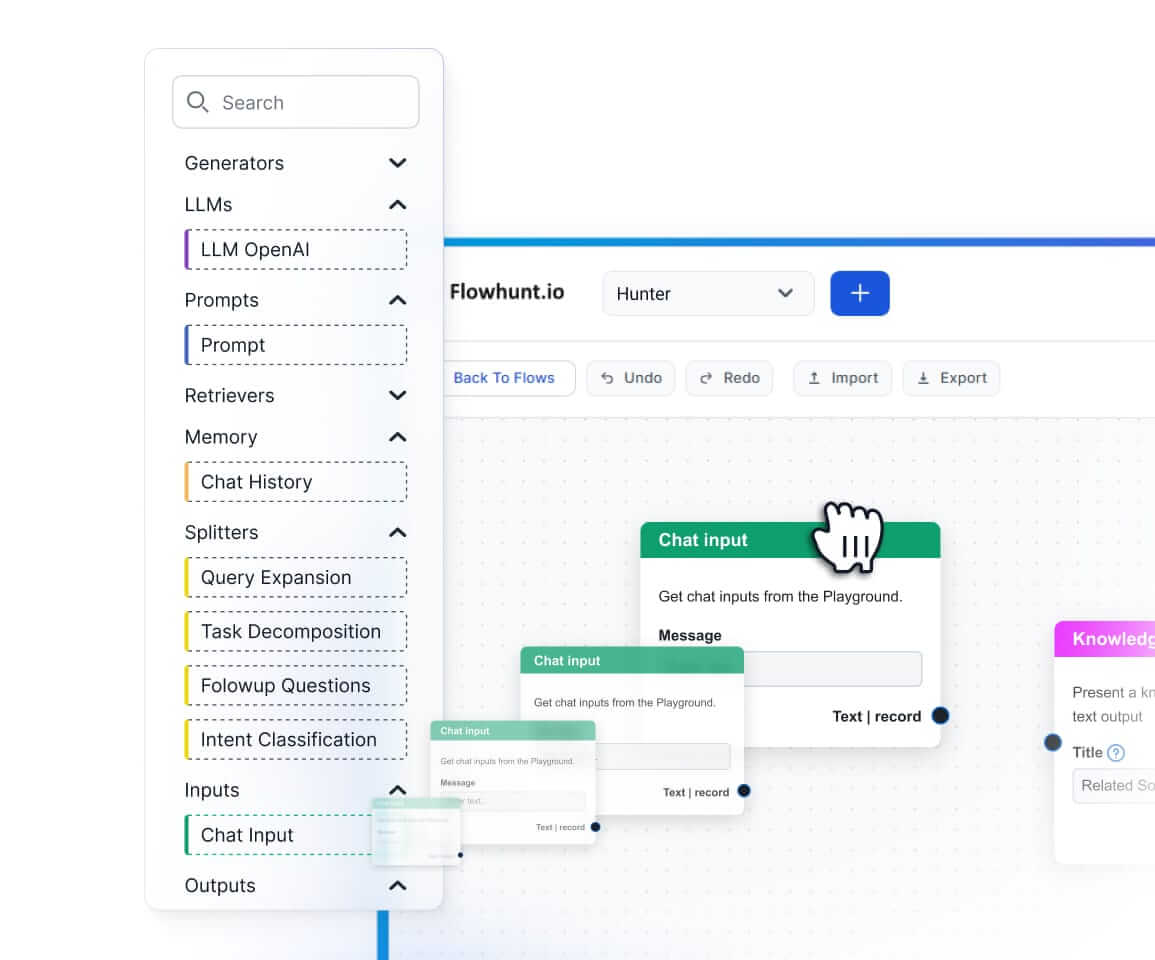

Implementing a RAG system involves several technical steps:

Research on Question Answering with Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a method that enhances question-answering systems by combining retrieval mechanisms with generative models. Recent research has explored the efficacy and optimization of RAG in various contexts.

RAG is a method that combines information retrieval and natural language generation to provide accurate, up-to-date answers by integrating external data sources into large language models.

A RAG system consists of a retrieval component, which sources relevant information from vector databases using semantic search, and a generation component, typically an LLM, that synthesizes answers using both the user query and retrieved context.

RAG improves accuracy by retrieving contextually relevant information, supports dynamic content updates from external knowledge bases, and enhances the relevance and quality of generated responses.

Common use cases include AI chatbots, customer support, automated content creation, and educational tools that require accurate, context-aware, and up-to-date responses.

RAG systems can be resource-intensive, require careful integration for optimal performance, and must ensure factual accuracy in the retrieved information to avoid misleading or outdated answers.

Discover how Retrieval-Augmented Generation can boost your chatbot and support solutions with real-time, accurate responses.

Retrieval Augmented Generation (RAG) is an advanced AI framework that combines traditional information retrieval systems with generative large language models (...

Discover the key differences between Retrieval-Augmented Generation (RAG) and Cache-Augmented Generation (CAG) in AI. Learn how RAG dynamically retrieves real-t...

Document reranking is the process of reordering retrieved documents based on their relevance to a user's query, refining search results to prioritize the most p...