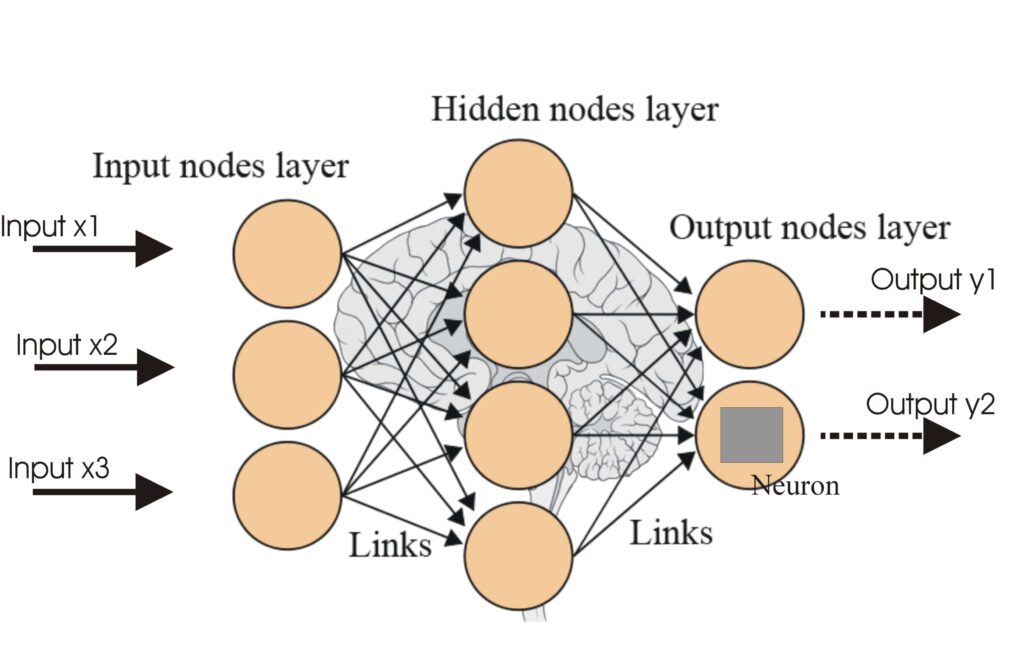

Neural Networks

A neural network, or artificial neural network (ANN), is a computational model inspired by the human brain, essential in AI and machine learning for tasks like ...

RNNs are neural networks designed for sequential data, using memory to process inputs and capture temporal dependencies, ideal for NLP, speech recognition, and forecasting.

Recurrent Neural Networks (RNNs) are a sophisticated class of artificial neural networks designed for processing sequential data. Unlike traditional feedforward neural networks that process inputs in a single pass, RNNs have a built-in memory mechanism that allows them to maintain information about previous inputs, making them particularly well-suited for tasks where the order of data is crucial, such as language modeling, speech recognition, and time-series forecasting.

RNN stands for Recurrent Neural Network. This type of neural network is characterized by its ability to process sequences of data by maintaining a hidden state that gets updated at each time step based on the current input and the previous hidden state.

A Recurrent Neural Network (RNN) is a type of artificial neural network and discover their role in AI. Learn about types, training, and applications across various industries.") where connections between nodes form a directed graph along a temporal sequence. This allows it to exhibit dynamic temporal behavior for a time sequence. Unlike feedforward neural networks, RNNs can use their internal state (memory) to process sequences of inputs, making them suitable for tasks like handwriting recognition, speech recognition, and natural language processing bridges human-computer interaction. Discover its key aspects, workings, and applications today!").

The core idea behind RNNs is their ability to remember past information and use it to influence the current output. This is achieved through the use of a hidden state, which is updated at every time step. The hidden state acts as a form of memory that retains information about previous inputs. This feedback loop enables RNNs to capture dependencies in sequential data.

The fundamental building block of an RNN is the recurrent unit, which consists of:

RNNs come in various architectures depending on the number of inputs and outputs:

RNNs are incredibly versatile and are used in a wide range of applications:

Feedforward neural networks process inputs in a single pass and are typically used for tasks where the order of data is not important, such as image classification. In contrast, RNNs process sequences of inputs, allowing them to capture temporal dependencies and retain information across multiple time steps.

To address some of the limitations of traditional RNNs, advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) have been developed. These architectures have mechanisms to better capture long-term dependencies and mitigate the vanishing gradient problem.

A Recurrent Neural Network (RNN) is a type of artificial neural network designed for processing sequential data. Unlike feedforward neural networks, RNNs use memory of previous inputs to inform current outputs, making them ideal for tasks like language modeling, speech recognition, and time-series forecasting.

Feedforward neural networks process inputs in a single pass without memory, while RNNs process sequences of inputs and retain information across time steps, allowing them to capture temporal dependencies.

RNNs are used in natural language processing (NLP), speech recognition, time-series forecasting, handwriting recognition, chatbots, predictive text, and financial market analysis.

RNNs can struggle with the vanishing gradient problem, making it difficult to learn long-term dependencies. They are also more computationally intensive compared to feedforward networks.

Advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) have been developed to address RNN limitations, particularly for learning long-term dependencies.

Smart Chatbots and AI tools under one roof. Connect intuitive blocks to turn your ideas into automated Flows.

A neural network, or artificial neural network (ANN), is a computational model inspired by the human brain, essential in AI and machine learning for tasks like ...

Artificial Neural Networks (ANNs) are a subset of machine learning algorithms modeled after the human brain. These computational models consist of interconnecte...

Long Short-Term Memory (LSTM) is a specialized type of Recurrent Neural Network (RNN) architecture designed to learn long-term dependencies in sequential data. ...