Question Answering

Question Answering with Retrieval-Augmented Generation (RAG) combines information retrieval and natural language generation to enhance large language models (LL...

A retrieval pipeline enables chatbots to fetch and process relevant external knowledge for accurate, real-time, and context-aware responses using RAG, embeddings, and vector databases.

A retrieval pipeline for chatbots refers to the technical architecture and process that enables chatbots to fetch, process, and retrieve relevant information in response to user queries. Unlike simple question-answering systems that rely only on pre-trained language models, retrieval pipelines incorporate external knowledge bases or data sources. This allows the chatbot to provide accurate, contextually relevant, and updated responses even when the data is not inherent to the language model itself.

The retrieval pipeline typically consists of multiple components, including data ingestion, embedding creation, vector storage, context retrieval, and response generation. Its implementation often leverages Retrieval-Augmented Generation (RAG), which combines the strengths of data retrieval systems and Large Language Models (LLMs) for response generation.

A retrieval pipeline is used to enhance a chatbot’s capabilities by enabling it to:

Document Ingestion

Collecting and preprocessing raw data, which could include PDFs, text files, databases, or APIs. Tools like LangChain or LlamaIndex are often employed for seamless data ingestion.

Example: Loading customer service FAQs or product specifications into the system.

Document Preprocessing

Long documents are split into smaller, semantically meaningful chunks. This is essential for fitting the text into embedding models that usually have token limits (e.g., 512 tokens).

Example Code Snippet:

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

chunks = text_splitter.split_documents(document_list)

Embedding Generation

Text data is converted into high-dimensional vector representations using embedding models. These embeddings numerically encode the semantic meaning of the data.

Example Embedding Model: OpenAI’s text-embedding-ada-002 or Hugging Face’s e5-large-v2.

Vector Storage

Embeddings are stored in vector databases optimized for similarity searches. Tools like Milvus, Chroma, or PGVector are commonly used.

Example: Storing product descriptions and their embeddings for efficient retrieval.

Query Processing

When a user query is received, it is transformed into a query vector using the same embedding model. This enables semantic similarity matching with stored embeddings.

Example Code Snippet:

query_vector = embedding_model.encode("What are the specifications of Product X?")

retrieved_docs = vector_db.similarity_search(query_vector, k=5)

Data Retrieval

The system retrieves the most relevant chunks of data based on similarity scores (e.g., cosine similarity). Multi-modal retrieval systems may combine SQL databases, knowledge graphs, and vector searches for more robust results.

Response Generation

The retrieved data is combined with the user query and passed to a large language model (LLM) to generate a final, natural language response. This step is often referred to as augmented generation.

Example Prompt Template:

prompt_template = """

Context: {context}

Question: {question}

Please provide a detailed response using the context above.

"""

Post-Processing and Validation

Advanced retrieval pipelines include hallucination detection, relevancy checks, or response grading to ensure the output is factual and relevant.

Customer Support

Chatbots can retrieve product manuals, troubleshooting guides, or FAQs to provide instant responses to customer queries.

Example: A chatbot helping a customer reset a router by retrieving the relevant section of the user manual.

Enterprise Knowledge Management

Internal enterprise chatbots can access company-specific data like HR policies, IT support documentation, or compliance guidelines.

Example: Employees querying an internal chatbot for sick leave policies.

E-Commerce

Chatbots assist users by retrieving product details, reviews, or inventory availability.

Example: “What are the top features of Product Y?”

Healthcare

Chatbots retrieve medical literature, guidelines, or patient data to assist healthcare professionals or patients.

Example: A chatbot retrieving drug interaction warnings from a pharmaceutical database.

Education and Research

Academic chatbots use RAG pipelines to fetch scholarly articles, answer questions, or summarize research findings.

Example: “Can you summarize the findings of this 2023 study on climate change?”

Legal and Compliance

Chatbots retrieve legal documents, case laws, or compliance requirements to assist legal professionals.

Example: “What is the latest update on GDPR regulations?”

A chatbot built to answer questions from a company’s annual financial report in PDF format.

A chatbot combining SQL, vector search, and knowledge graphs to answer an employee’s question.

By leveraging retrieval pipelines, chatbots are no longer limited by the constraints of static training data, enabling them to deliver dynamic, precise, and context-rich interactions.

Retrieval pipelines play a pivotal role in modern chatbot systems, enabling intelligent and context-aware interactions.

“Lingke: A Fine-grained Multi-turn Chatbot for Customer Service” by Pengfei Zhu et al. (2018)

Introduces Lingke, a chatbot that integrates information retrieval to handle multi-turn conversations. It leverages fine-grained pipeline processing to distill responses from unstructured documents and employs attentive context-response matching for sequential interactions, significantly improving the chatbot’s ability to address complex user queries.

Read the paper here.

“FACTS About Building Retrieval Augmented Generation-based Chatbots” by Rama Akkiraju et al. (2024)

Explores the challenges and methodologies in developing enterprise-grade chatbots using Retrieval Augmented Generation (RAG) pipelines and Large Language Models (LLMs). The authors propose the FACTS framework, emphasizing Freshness, Architectures, Cost, Testing, and Security in RAG pipeline engineering. Their empirical findings highlight the trade-offs between accuracy and latency when scaling LLMs, offering valuable insights into building secure and high-performance chatbots. Read the paper here.

“From Questions to Insightful Answers: Building an Informed Chatbot for University Resources” by Subash Neupane et al. (2024)

Presents BARKPLUG V.2, a chatbot system designed for university settings. Utilizing RAG pipelines, the system provides accurate and domain-specific answers to users about campus resources, improving access to information. The study evaluates the chatbot’s effectiveness using frameworks like RAG Assessment (RAGAS) and showcases its usability in academic environments. Read the paper here.

A retrieval pipeline is a technical architecture allowing chatbots to fetch, process, and retrieve relevant information from external sources in response to user queries. It combines data ingestion, embedding, vector storage, and LLM response generation for dynamic, context-aware replies.

RAG combines the strengths of data retrieval systems and large language models (LLMs), enabling chatbots to ground responses in factual, up-to-date external data, thus reducing hallucinations and increasing accuracy.

Key components include document ingestion, preprocessing, embedding generation, vector storage, query processing, data retrieval, response generation, and post-processing validation.

Use cases include customer support, enterprise knowledge management, e-commerce product info, healthcare guidance, education and research, and legal compliance assistance.

Challenges include latency from real-time retrieval, operational costs, data privacy concerns, and scalability requirements for handling large data volumes.

Unlock the power of Retrieval-Augmented Generation (RAG) and external data integration to deliver intelligent, accurate chatbot responses. Try FlowHunt’s no-code platform today.

Question Answering with Retrieval-Augmented Generation (RAG) combines information retrieval and natural language generation to enhance large language models (LL...

Discover the key differences between Retrieval-Augmented Generation (RAG) and Cache-Augmented Generation (CAG) in AI. Learn how RAG dynamically retrieves real-t...

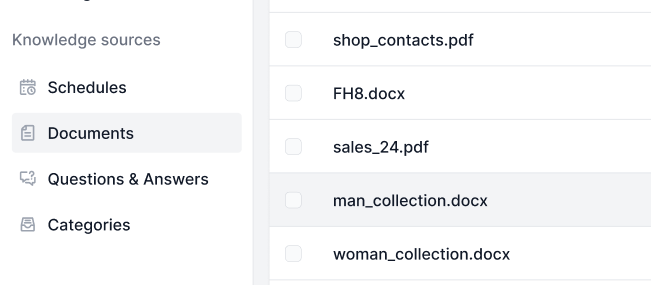

Knowledge Sources make teaching the AI according to your needs a breeze. Discover all the ways of linking knowledge with FlowHunt. Easily connect websites, docu...