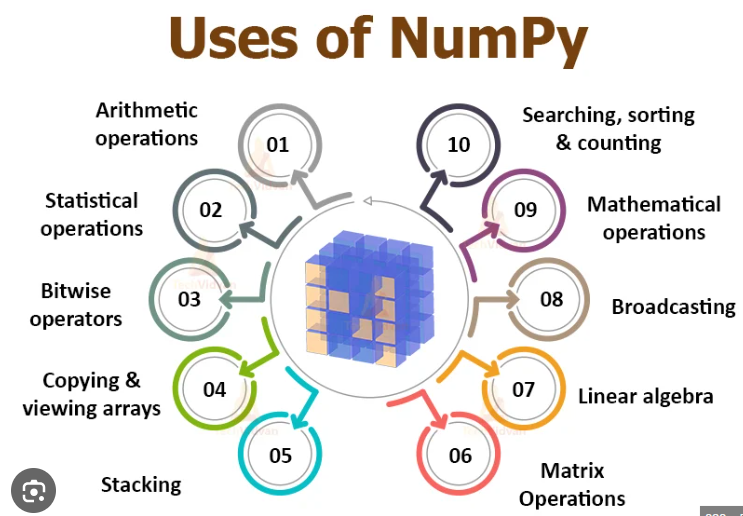

NumPy

NumPy is an open-source Python library crucial for numerical computing, providing efficient array operations and mathematical functions. It underpins scientific...

Scikit-learn is a free, open-source Python library offering simple and efficient tools for data mining and machine learning, including classification, regression, clustering, and dimensionality reduction.

Scikit-learn, often stylized as scikit-learn or abbreviated as sklearn, is a powerful open-source machine learning library for the Python programming language. Designed to provide simple and efficient tools for predictive data analysis, it has become an indispensable resource for data scientists and machine learning practitioners worldwide.

Scikit-learn is built on top of several popular Python libraries, namely NumPy, SciPy, and matplotlib. It offers a range of supervised and unsupervised machine learning algorithms through a consistent interface in Python. The library is known for its ease of use, performance, and clean API, making it suitable for both beginners and experienced users.

The project started as scikits.learn, a Google Summer of Code project by David Cournapeau in 2007. The “scikits” (SciPy Toolkits) namespace was used to develop and distribute extensions to the SciPy library. In 2010, the project was further developed by Fabian Pedregosa, Gaël Varoquaux, Alexandre Gramfort, and Vincent Michel from the French Institute for Research in Computer Science and Automation (INRIA) in Saclay, France.

Since its first public release in 2010, Scikit-learn has undergone significant development with contributions from an active community of developers and researchers. It has evolved into one of the most popular machine learning libraries in Python, widely used in academia and industry.

Scikit-learn provides implementations of many machine learning algorithms for:

Scikit-learn is designed with a consistent API across all its modules. This means that once you understand the basic interface, you can switch between different models with ease. The API is built around key interfaces like:

fit(): To train a model.predict(): To make predictions using the trained model.transform(): To modify or reduce data (used in preprocessing and dimensionality reduction).The library is optimized for performance, with core algorithms implemented in Cython (a superset of Python designed to give C-like performance), ensuring efficient computation even with large datasets.

Scikit-learn integrates seamlessly with other Python libraries:

This integration allows for flexible and powerful data processing pipelines.

As an open-source library under the BSD license, Scikit-learn is free for both personal and commercial use. Its comprehensive documentation and active community support make it accessible to users at all levels.

Installing Scikit-learn is straightforward, especially if you already have NumPy and SciPy installed. You can install it using pip:

pip install -U scikit-learn

Or using conda if you are using the Anaconda distribution:

conda install scikit-learn

Scikit-learn is used for building predictive models and performing various machine learning tasks. Below are common steps involved in using Scikit-learn:

Before applying machine learning algorithms, data must be preprocessed:

StandardScaler or MinMaxScaler.Split the dataset into training and testing sets to evaluate the model’s performance on unseen data:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

Select an appropriate algorithm based on the problem (classification, regression, clustering) and train the model:

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier()

model.fit(X_train, y_train)

Use the trained model to make predictions on new data:

y_pred = model.predict(X_test)

Assess the model’s performance using appropriate metrics:

from sklearn.metrics import accuracy_score

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy}")

Optimize the model’s performance by tuning hyperparameters using techniques like Grid Search or Random Search:

from sklearn.model_selection import GridSearchCV

param_grid = {'n_estimators': [100, 200], 'max_depth': [3, 5, None]}

grid_search = GridSearchCV(RandomForestClassifier(), param_grid)

grid_search.fit(X_train, y_train)

Validate the model’s performance by testing it on multiple subsets of the data:

from sklearn.model_selection import cross_val_score

scores = cross_val_score(model, X, y, cv=5)

print(f"Cross-validation scores: {scores}")

One of the classic datasets included in Scikit-learn is the Iris dataset. It involves classifying iris flowers into three species based on four features: sepal length, sepal width, petal length, and petal width.

Steps:

from sklearn.datasets import load_irisiris = load_iris()X, y = iris.data, iris.targetX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)from sklearn.svm import SVCmodel = SVC()model.fit(X_train, y_train)y_pred = model.predict(X_test)accuracy = accuracy_score(y_test, y_pred)print(f"Accuracy: {accuracy}")Using the Boston Housing dataset (note: the Boston dataset has been deprecated due to ethical concerns; alternative datasets like California Housing are recommended), you can perform regression to predict house prices based on features like the number of rooms, crime rate, etc.

Steps:

from sklearn.datasets import fetch_california_housinghousing = fetch_california_housing()X, y = housing.data, housing.targetX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)from sklearn.linear_model import LinearRegressionmodel = LinearRegression()model.fit(X_train, y_train)y_pred = model.predict(X_test)mse = mean_squared_error(y_test, y_pred)print(f"MSE: {mse}")Clustering can be used in customer segmentation to group customers based on purchasing behavior.

Steps:

from sklearn.preprocessing import StandardScalerscaler = StandardScaler()X_scaled = scaler.fit_transform(X)from sklearn.cluster import KMeanskmeans = KMeans(n_clusters=3)kmeans.fit(X_scaled)clusters = kmeans.labels_While Scikit-learn is not specifically designed for natural language processing (NLP) or chatbots, it is instrumental in building machine learning models that can be part of an AI system, including chatbots.

Scikit-learn provides tools for converting text data into numerical features:

from sklearn.feature_extraction.text import TfidfVectorizer

documents = ["Hello, how can I help you?", "What is your name?", "Goodbye!"]

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(documents)

Chatbots often need to classify user queries into intents to provide appropriate responses. Scikit-learn can be used to train classifiers for intent detection.

Steps:

vectorizer = TfidfVectorizer()X = vectorizer.fit_transform(queries)model = LogisticRegression()model.fit(X, intents)new_query = "Can you help me with my account?"X_new = vectorizer.transform([new_query])predicted_intent = model.predict(X_new)Understanding the sentiment behind user messages can enhance chatbot interactions.

from sklearn.datasets import fetch_openml

# Assuming you have a labeled dataset for sentiment analysis

X_train, X_test, y_train, y_test = train_test_split(X, y)

model = SVC()

model.fit(X_train, y_train)

Scikit-learn models can be integrated into larger AI systems and automated workflows:

Pipeline Integration: Scikit-learn’s Pipeline class allows for chaining transformers and estimators, facilitating the automation of preprocessing and modeling steps.

from sklearn.pipeline import Pipeline

pipeline = Pipeline([

('vectorizer', TfidfVectorizer()),

('classifier', LogisticRegression())

])

pipeline.fit(queries, intents)

Model Deployment: Trained models can be saved using joblib and integrated into production systems.

import joblib

joblib.dump(model, 'model.joblib')

# Later

model = joblib.load('model.joblib')

While Scikit-learn is a versatile library, there are alternatives for specific needs:

Research on Scikit-learn

Scikit-learn is a comprehensive Python module that integrates a wide range of state-of-the-art machine learning algorithms suitable for medium-scale supervised and unsupervised problems. A significant paper titled “Scikit-learn: Machine Learning in Python” by Fabian Pedregosa and others, published in 2018, provides an in-depth look at this tool. The authors emphasize that Scikit-learn is designed to make machine learning accessible to non-specialists through a general-purpose high-level language. The package focuses on ease of use, performance, and API consistency while maintaining minimal dependencies. This makes it highly suitable for both academic and commercial settings due to its distribution under the simplified BSD license. For more detailed information, source code, binaries, and documentation can be accessed at Scikit-learn. You can find the original paper here.

Scikit-learn is an open-source machine learning library for Python designed to provide simple, efficient tools for data analysis and modeling. It supports a wide range of supervised and unsupervised learning algorithms, including classification, regression, clustering, and dimensionality reduction.

Scikit-learn offers a consistent API, efficient implementations of many machine learning algorithms, integration with popular Python libraries like NumPy and pandas, comprehensive documentation, and extensive community support.

You can install Scikit-learn using pip with the command 'pip install -U scikit-learn' or with conda using 'conda install scikit-learn' if you use the Anaconda distribution.

Scikit-learn is not designed for deep learning. For advanced neural networks and deep learning tasks, libraries like TensorFlow or PyTorch are more suitable.

Yes, Scikit-learn is known for its ease of use, clean API, and excellent documentation, making it ideal for both beginners and experienced users in machine learning.

Discover how Scikit-learn can streamline your machine learning projects. Build, train, and deploy models efficiently with Python's leading ML library.

NumPy is an open-source Python library crucial for numerical computing, providing efficient array operations and mathematical functions. It underpins scientific...

spaCy is a robust open-source Python library for advanced Natural Language Processing (NLP), known for its speed, efficiency, and production-ready features like...

Torch is an open-source machine learning library and scientific computing framework based on Lua, optimized for deep learning and AI tasks. It provides tools fo...