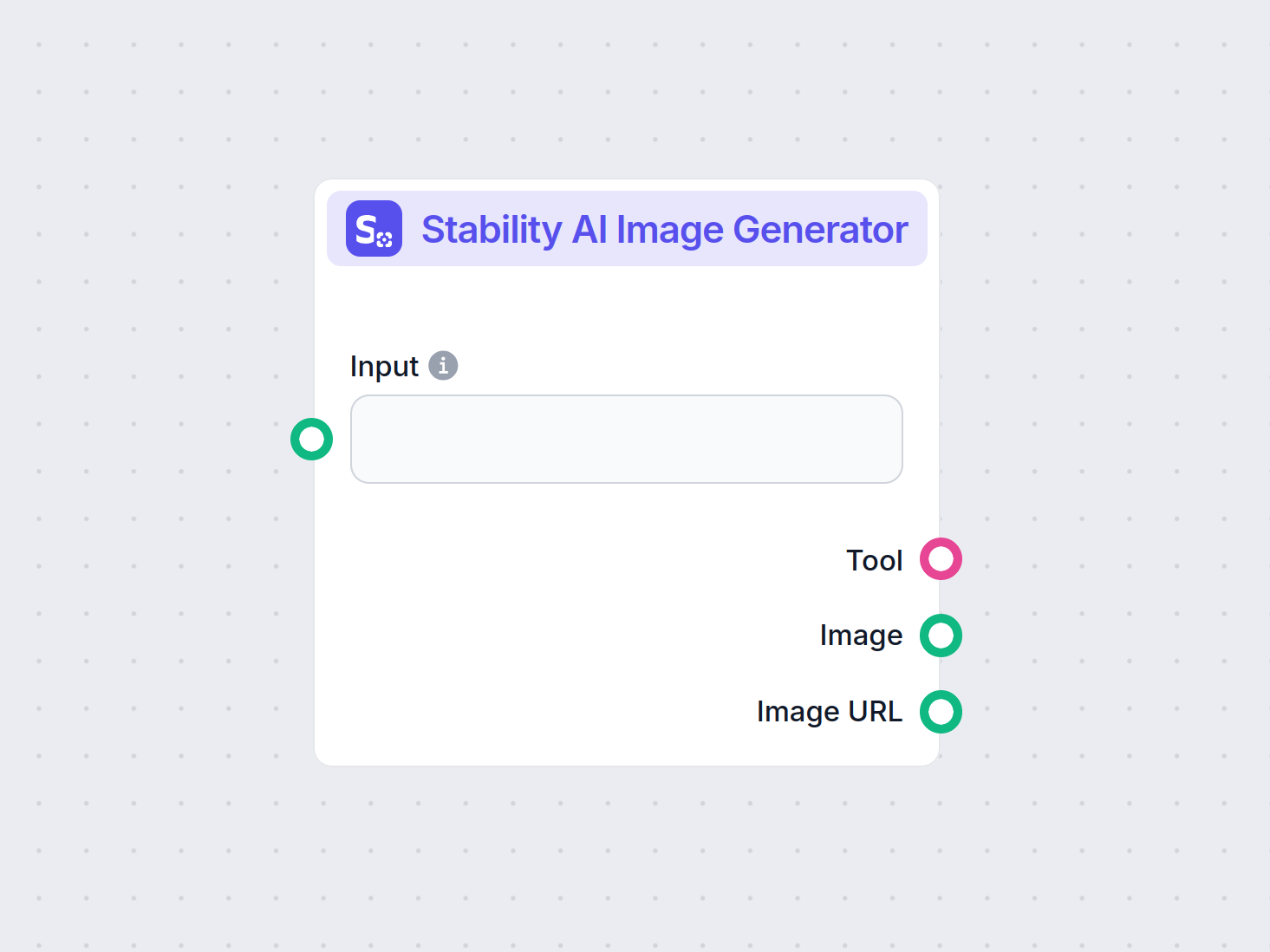

Stability AI Image Generator

Generate high-quality images from text prompts with the Stability AI Image Generator component. Powered by Stable Diffusion models, this tool offers customizabl...

Stable Diffusion is a leading text-to-image AI model enabling users to generate photorealistic visuals from prompts using advanced latent diffusion and deep learning techniques.

Stable Diffusion is a text-to-image AI model that creates high-quality images from descriptions using deep learning. It employs techniques like negative prompts and reference images for better results, especially with complex elements like hands.

Stable Diffusion is an advanced text-to-image generation model that utilizes deep learning techniques to produce high-quality, photorealistic images based on textual descriptions. Developed as a latent diffusion model, it represents a significant breakthrough in the field of generative artificial intelligence, combining the principles of diffusion models and machine learning to create images that closely match given text prompts.

Stable Diffusion uses deep learning and diffusion models to generate images by refining random noise to create coherent visuals. Despite its vast training on millions of images, it struggles with complex elements like hands. Over time, as the models are trained on bigger and bigger datasets, these problems are minimizing and the quality of images is getting more and more realistic.

One effective method to tackle the hand issue is using negative prompts. By adding phrases like (-bad anatomy) or (-bad hands -unnatural hands) to your prompts, you can instruct the AI to avoid producing distorted features. Be cautious not to overuse negative prompts, as they may limit the model’s creative output.

Another technique involves using reference images to guide the AI. By including a {image} tag with a link to a reference image in your prompt, you provide the AI with a visual template for accurate hand rendering. This is particularly useful for maintaining correct hand proportions and poses.

For the best results, combine both negative prompts and reference images. This dual approach ensures the AI avoids common errors while adhering to high-quality examples.

(-bent fingers) or (realistic perspectives) to further enhance hand quality.By mastering these techniques, you can significantly improve hand rendering in your Stable Diffusion creations, achieving artwork with the finesse of a seasoned artist. Gather your reference images, craft precise prompts, and watch your AI art evolve!

At its core, Stable Diffusion operates by transforming text prompts into visual representations through a series of computational processes. Understanding its functionality involves delving into the concepts of diffusion models, latent spaces, and neural networks.

Diffusion models are a class of generative models in machine learning that learn to create data by reversing a diffusion process. The diffusion process involves gradually adding noise to data—such as images—until they become indistinguishable from random noise. The model then learns to reverse this process, removing noise step by step to recover the original data. This reverse diffusion process is key to generating new, coherent data from random noise.

Stable Diffusion specifically uses a latent diffusion model. Unlike traditional diffusion models that operate directly in the high-dimensional pixel space of images, latent diffusion models work within a compressed latent space. This latent space is a lower-dimensional representation of the data, capturing essential features while reducing computational complexity. By operating in the latent space, Stable Diffusion can generate high-resolution images more efficiently.

The core mechanism of Stable Diffusion involves the reverse diffusion process in the latent space. Starting with a random noise latent vector, the model iteratively refines this latent representation by predicting and removing noise at each step. This refinement is guided by the textual description provided by the user. The process continues until the latent vector converges to a state that, when decoded, produces an image consistent with the text prompt.

Stable Diffusion’s architecture integrates several key components that work together to transform text prompts into images.

The VAE serves as the encoder-decoder system that compresses images into the latent space and reconstructs them back into images. The encoder transforms an image into its latent representation, capturing the fundamental features in a reduced form. The decoder takes this latent representation and reconstructs it into the detailed image.

This process is crucial because it allows the model to work with lower-dimensional data, significantly reducing computational resources compared to operating in the full pixel space.

The U-Net is a specialized neural network architecture used within Stable Diffusion for image processing tasks. It consists of an encoding path and a decoding path with skip connections between mirrored layers. In the context of Stable Diffusion, the U-Net functions as the noise predictor during the reverse diffusion process.

At each timestep of the diffusion process, the U-Net predicts the amount of noise present in the latent representation. This prediction is then used to refine the latent vector by subtracting the estimated noise, progressively denoising the latent space towards an image that aligns with the text prompt.

To incorporate textual information, Stable Diffusion employs a text encoder based on the CLIP (Contrastive Language-Image Pretraining) model. CLIP is designed to understand and relate textual and visual information by mapping them into a shared latent space.

When a user provides a text prompt, the text encoder converts this prompt into a series of embeddings—numerical representations of the textual data. These embeddings condition the U-Net during the reverse diffusion process, guiding the image generation to reflect the content of the text prompt.

Stable Diffusion offers versatility in generating images and can be utilized in various ways depending on user needs.

The primary use of Stable Diffusion is generating images from text prompts. Users input descriptive text, and the model generates an image that represents the description. For example, a user could input “A serene beach at sunset with palm trees” and receive an image depicting that scene.

This capability is particularly valuable in creative industries, content creation, and design, where rapid visualization of concepts is essential.

Beyond generating images from scratch, Stable Diffusion can also modify existing images based on textual instructions. By providing an initial image and a text prompt, the model can produce a new image that incorporates changes described by the text.

For instance, a user might input an image of a daytime cityscape with the prompt “change to nighttime with neon lights,” resulting in an image that reflects these modifications.

Inpainting involves filling in missing or corrupted parts of an image. Stable Diffusion excels in this area by using text prompts to guide the reconstruction of specific image areas. Users can mask portions of an image and provide textual descriptions of what should fill the space.

This feature is useful in photo restoration, removing unwanted objects, or altering specific elements within an image while maintaining overall coherence.

By generating sequences of images with slight variations, Stable Diffusion can be extended to create animations or video content. Tools like Deforum enhance Stable Diffusion’s capabilities to produce dynamic visual content guided by text prompts over time.

This opens up possibilities in animation, visual effects, and dynamic content generation without the need for traditional frame-by-frame animation techniques.

Stable Diffusion’s ability to generate images from textual descriptions makes it a powerful tool in AI automation and chatbot development.

Incorporating Stable Diffusion into chatbots enables the generation of visual content in response to user queries. For example, in a customer service scenario, a chatbot could provide visual guides or illustrations generated on-the-fly to assist users.

Text prompts are converted into embeddings using the CLIP text encoder. These embeddings are crucial for conditioning the image generation process, ensuring that the output image aligns with the user’s textual description.

The reverse diffusion process involves iteratively refining the latent representation by removing predicted noise. At each timestep, the model considers the text embeddings and the current state of the latent vector to predict the noise component accurately.

The model’s proficiency in handling noisy images stems from its training on large datasets where it learns to distinguish and denoise images effectively. This training enables it to generate clear images even when starting from random noise.

Working in the latent space offers computational efficiency. Since the latent space has fewer dimensions than the pixel space, operations are less resource-intensive. This efficiency allows Stable Diffusion to generate high-resolution images without excessive computational demands.

Artists and designers can use Stable Diffusion to rapidly prototype visuals based on conceptual descriptions, aiding in the creative process and reducing the time from idea to visualization.

Marketing teams can generate custom imagery for campaigns, social media, and advertisements without the need for extensive graphic design resources.

Game developers can create assets, environments, and concept art by providing descriptive prompts, streamlining the asset creation pipeline.

Retailers can generate images of products in various settings or configurations, enhancing product visualization and customer experience.

Educators and content creators can produce illustrations and diagrams to explain complex concepts, making learning materials more engaging.

Researchers in artificial intelligence and computer vision can use Stable Diffusion to explore the capabilities of diffusion models and latent spaces further.

To effectively use Stable Diffusion, certain technical considerations should be noted.

To begin using Stable Diffusion, follow these steps:

For developers building AI automation systems and chatbots, Stable Diffusion can be integrated to enhance functionality.

When using Stable Diffusion, it’s important to be mindful of ethical implications.

Stable diffusion is a significant topic in the field of generative models, particularly for data augmentation and image synthesis. Recent studies have explored various aspects of stable diffusion, highlighting its applications and effectiveness.

Diffusion Least Mean P-Power Algorithms for Distributed Estimation in Alpha-Stable Noise Environments by Fuxi Wen (2013):

Introduces a diffusion least mean p-power (LMP) algorithm designed for distributed estimation in environments characterized by alpha-stable noise. The study compares the diffusion LMP method with the diffusion least mean squares (LMS) algorithm and demonstrates improved performance in alpha-stable noise conditions. This research is crucial for developing robust estimation techniques in noisy environments. Read more

Stable Diffusion for Data Augmentation in COCO and Weed Datasets by Boyang Deng (2024):

Investigates the use of stable diffusion models for generating high-resolution synthetic images to improve small datasets. By leveraging techniques like Image-to-image translation, Dreambooth, and ControlNet, the research evaluates the efficiency of stable diffusion in classification and detection tasks. The findings suggest promising applications of stable diffusion in various fields. Read more

Diffusion and Relaxation Controlled by Tempered α-stable Processes by Aleksander Stanislavsky, Karina Weron, and Aleksander Weron (2011):

Derives properties of anomalous diffusion and nonexponential relaxation using tempered α-stable processes. It addresses the infinite-moment difficulty associated with α-stable random operational time and provides a model that includes subdiffusion as a special case. Read more

Evaluating a Synthetic Image Dataset Generated with Stable Diffusion by Andreas Stöckl (2022):

Evaluates synthetic images generated by the Stable Diffusion model using Wordnet taxonomy. It assesses the model’s capability to produce correct images for various concepts, illustrating differences in representation accuracy. These evaluations are vital for understanding stable diffusion’s role in data augmentation. Read more

Comparative Analysis of Generative Models: Enhancing Image Synthesis with VAEs, GANs, and Stable Diffusion by Sanchayan Vivekananthan (2024):

Explores three generative frameworks: VAEs, GANs, and Stable Diffusion models. The research highlights the strengths and limitations of each model, noting that while VAEs and GANs have their advantages, stable diffusion excels in certain synthesis tasks. Read more

Let’s look at how to implement a Stable Diffusion Model in Python using the Hugging Face Diffusers library.

Install the required libraries:

pip install torch transformers diffusers accelerate

pip install xformers # Optional

The Diffusers library provides a convenient way to load pre-trained models:

from diffusers import StableDiffusionPipeline

import torch

# Load the Stable Diffusion model

model_id = "stabilityai/stable-diffusion-2-1"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda") # Move the model to GPU for faster inference

To generate images, simply provide a text prompt:

prompt = "A serene landscape with mountains and a lake, photorealistic, 8K resolution"

image = pipe(prompt).images[0]

# Save or display the image

image.save("generated_image.png")

model_id.torch.float16 reduces memory usage.You can customize various parameters:

image = pipe(

prompt=prompt,

num_inference_steps=50, # Number of denoising steps

guidance

Stable Diffusion is an advanced AI model designed for generating high-quality, photorealistic images from text prompts. It uses latent diffusion and deep learning to transform textual descriptions into visuals.

Stable Diffusion operates by converting text prompts into image embeddings using a CLIP text encoder, then iteratively denoising a latent representation guided by the prompt, resulting in a coherent image output.

Stable Diffusion is used for creative content generation, marketing materials, game asset creation, e-commerce product visualization, educational illustrations, and AI-powered chatbots.

Yes, Stable Diffusion supports image-to-image translation and inpainting, allowing users to alter existing images or fill in missing parts based on text prompts.

A computer with a modern GPU is recommended for efficient image generation using Stable Diffusion. The model also requires Python and libraries such as PyTorch and Diffusers.

Yes, Stable Diffusion is released under a permissive open-source license, encouraging community contributions, customization, and broad accessibility.

Unleash your creativity with Stable Diffusion and see how AI can transform your ideas into stunning visuals.

Generate high-quality images from text prompts with the Stability AI Image Generator component. Powered by Stable Diffusion models, this tool offers customizabl...

Explore our in-depth review of Stability AI SD3 Large. Analyze its strengths, weaknesses, and creative output across diverse text-to-image prompts, and discover...

The Flux AI Model by Black Forest Labs is an advanced text-to-image generation system that converts natural language prompts into highly detailed, photorealisti...