Eunomia MCP Server

Eunomia MCP Server is an extension of the Eunomia framework that orchestrates data governance policies—like PII detection and access control—across text streams...

Eunomia MCP Server is an extension of the Eunomia framework that orchestrates data governance policies—like PII detection and access control—across text streams...

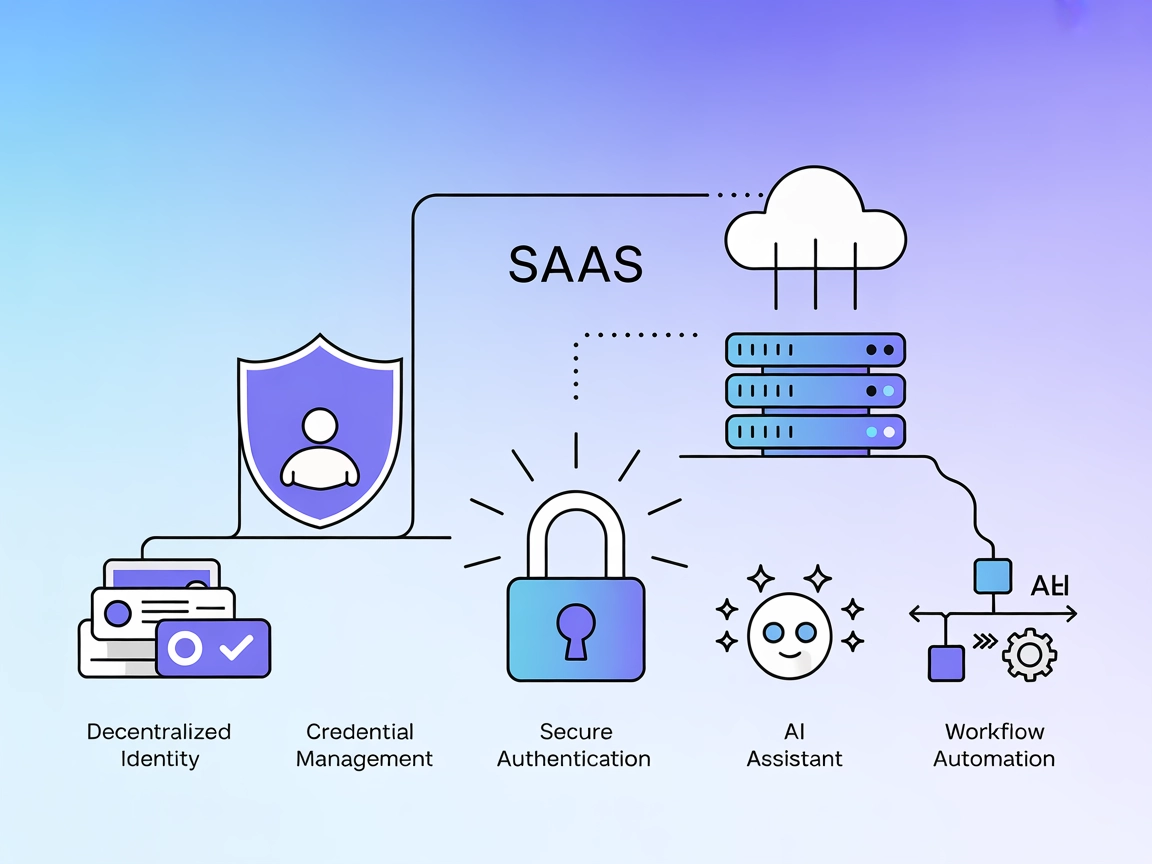

The cheqd MCP Server integrates decentralized identity management into AI workflows, enabling secure identity verification, credential management, and interoper...

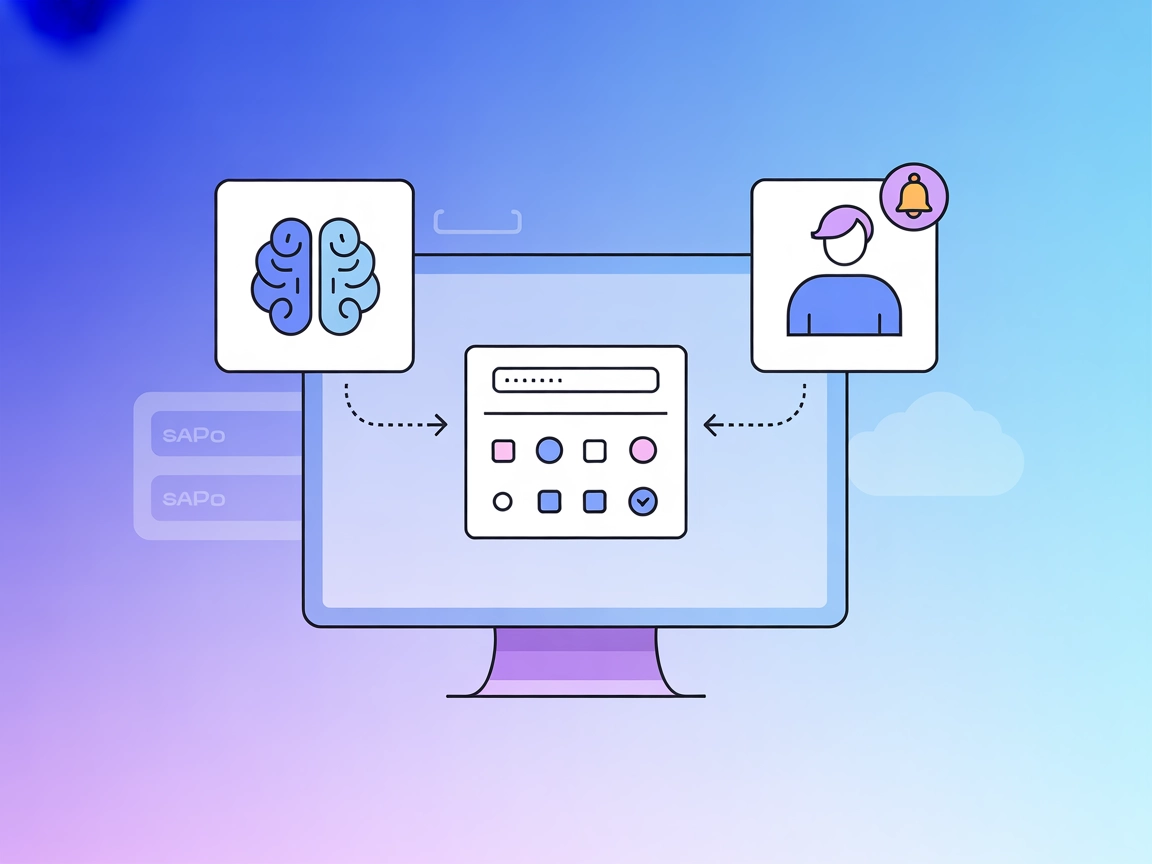

The Human-In-the-Loop MCP Server for FlowHunt enables seamless integration of human judgment, approval, and input into AI workflows through real-time interactiv...

The Pagos MCP Server bridges AI assistants with the Pagos API, enabling real-time access to BIN (Bank Identification Number) data for secure and intelligent pay...

The Attestable MCP Server brings remote attestation and confidential computing to FlowHunt workflows, letting AI agents and clients verify server integrity befo...

The FDIC BankFind MCP Server connects AI assistants and developer workflows to authoritative U.S. banking data via the FDIC BankFind API, enabling search, retri...

The SEC EDGAR MCP Server connects AI agents with the SEC’s EDGAR system, enabling automated access, querying, and analysis of public financial filings and discl...

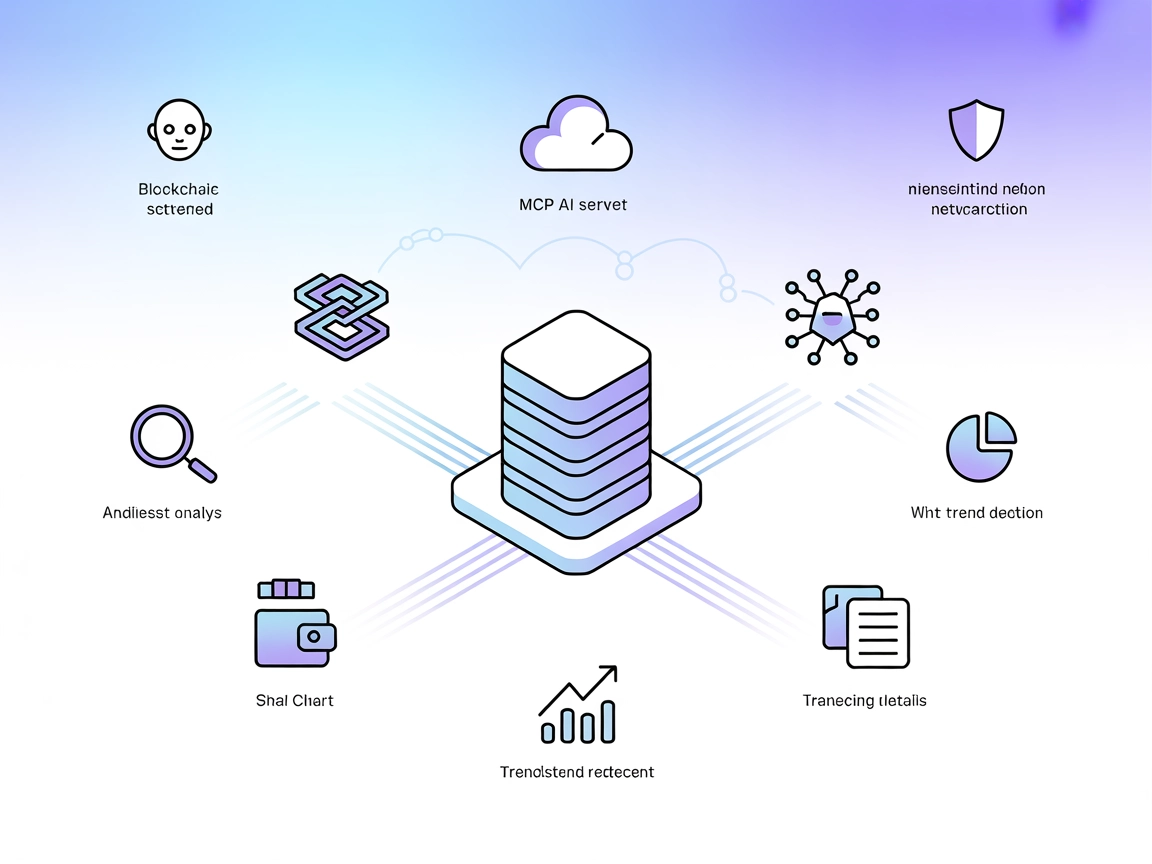

The TON Blockchain MCP Server enables natural language interaction with the TON blockchain for AI assistants. It supports real-time analysis, scam detection, tr...

The OceanBase MCP Server bridges secure AI interactions with OceanBase databases, enabling tasks like listing tables, reading data, and executing SQL queries in...

The Sanctions MCP Server connects AI assistants to robust sanctions screening capabilities, enabling automated compliance checks against global sanctions lists ...

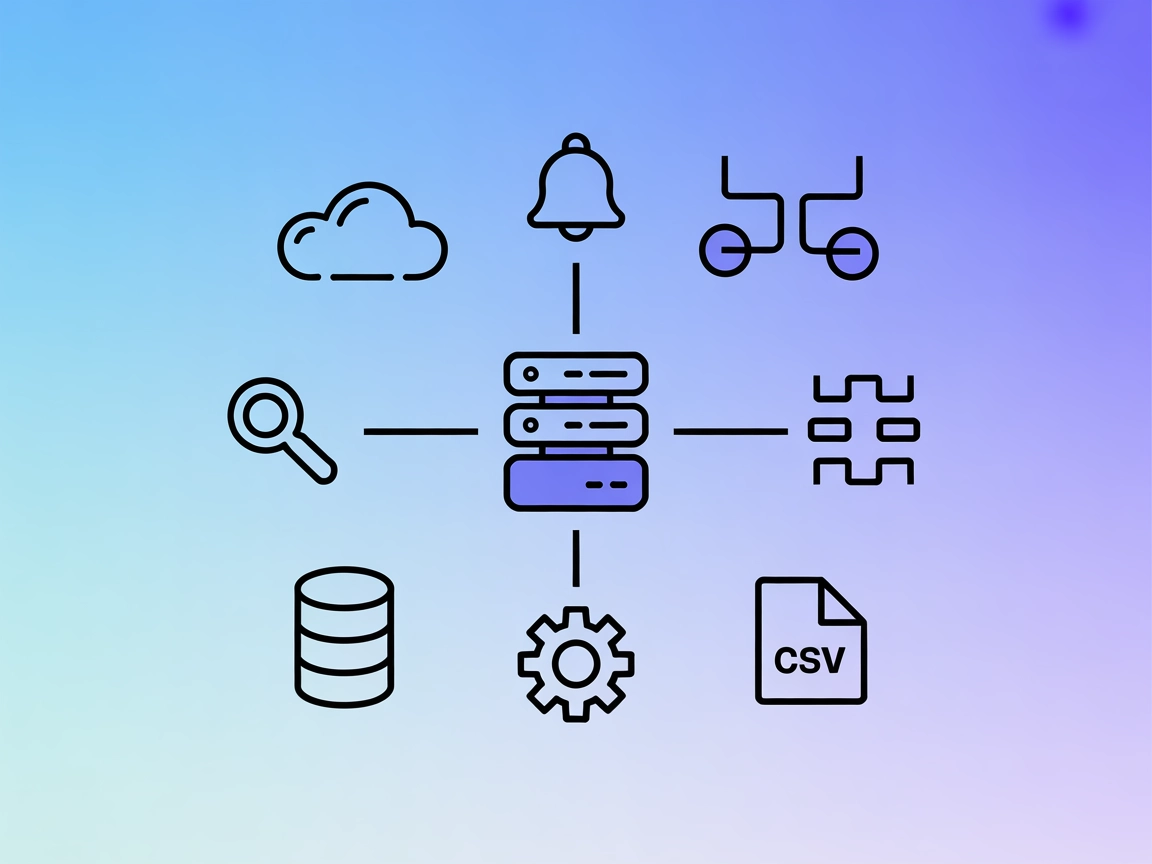

The Splunk MCP Server bridges AI assistants and Splunk, exposing search, alert, index, and macro functionalities as tools for seamless operational integration a...

The OpsLevel MCP Server bridges AI assistants with OpsLevel's service catalog and engineering data, enabling real-time access to service metadata, compliance au...

Discover FlowHunt's comprehensive security policy, covering infrastructure, organizational, product, and data privacy practices to ensure the highest standards ...

AI certification processes are comprehensive assessments and validations designed to ensure that artificial intelligence systems meet predefined standards and r...

AI regulatory frameworks are structured guidelines and legal measures designed to govern the development, deployment, and use of artificial intelligence technol...

Discover how the European AI Act impacts chatbots, detailing risk classifications, compliance requirements, deadlines, and the penalties for non-compliance to e...

Compliance reporting is a structured and systematic process that enables organizations to document and present evidence of their adherence to internal policies,...

Data governance is the framework of processes, policies, roles, and standards that ensure the effective and efficient use, availability, integrity, and security...

Data protection regulations are legal frameworks, policies, and standards that secure personal data, manage its processing, and safeguard individuals’ privacy r...

The European Union Artificial Intelligence Act (EU AI Act) is the world’s first comprehensive regulatory framework designed to manage the risks and harness the ...

A practical guide for business leaders on implementing Human-in-the-Loop (HITL) frameworks for responsible AI governance, risk reduction, compliance, and buildi...

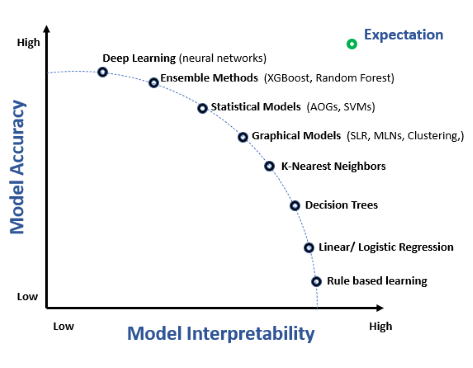

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

Explore the EU AI Act’s tiered penalty framework, with fines up to €35 million or 7% of global turnover for severe violations including manipulation, exploitati...

Explore the KPMG AI Risk and Controls Guide—a practical framework to help organizations manage AI risks ethically, ensure compliance, and build trustworthy, res...