AI Transparency

AI transparency is the practice of making the workings and decision-making processes of artificial intelligence systems comprehensible to stakeholders. Learn it...

AI transparency is the practice of making the workings and decision-making processes of artificial intelligence systems comprehensible to stakeholders. Learn it...

AI Explainability refers to the ability to understand and interpret the decisions and predictions made by artificial intelligence systems. As AI models become m...

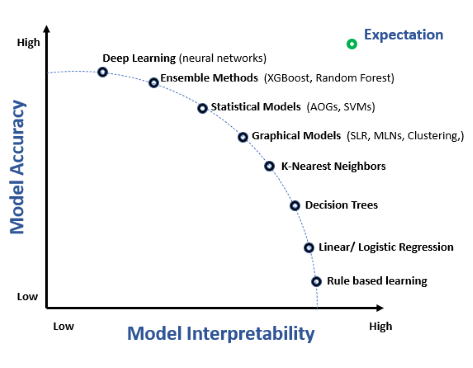

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

Transparency in Artificial Intelligence (AI) refers to the openness and clarity with which AI systems operate, including their decision-making processes, algori...

Explainable AI (XAI) is a suite of methods and processes designed to make the outputs of AI models understandable to humans, fostering transparency, interpretab...