Large Language Models and GPU Requirements

Discover the essential GPU requirements for Large Language Models (LLMs), including training vs inference needs, hardware specifications, and choosing the right...

Discover the essential GPU requirements for Large Language Models (LLMs), including training vs inference needs, hardware specifications, and choosing the right...

Explore the top large language models (LLMs) for coding in June 2025. This complete educational guide provides insights, comparisons, and practical tips for stu...

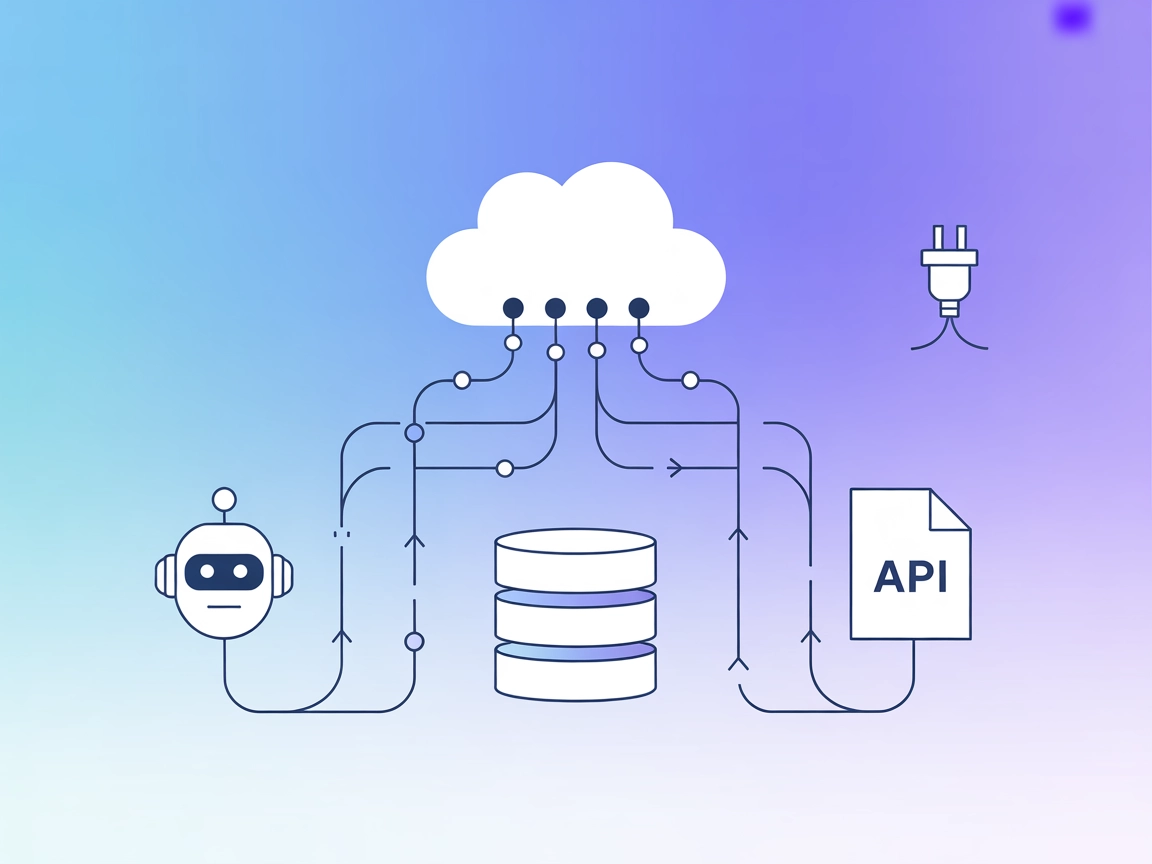

Eunomia MCP Server is an extension of the Eunomia framework that orchestrates data governance policies—like PII detection and access control—across text streams...

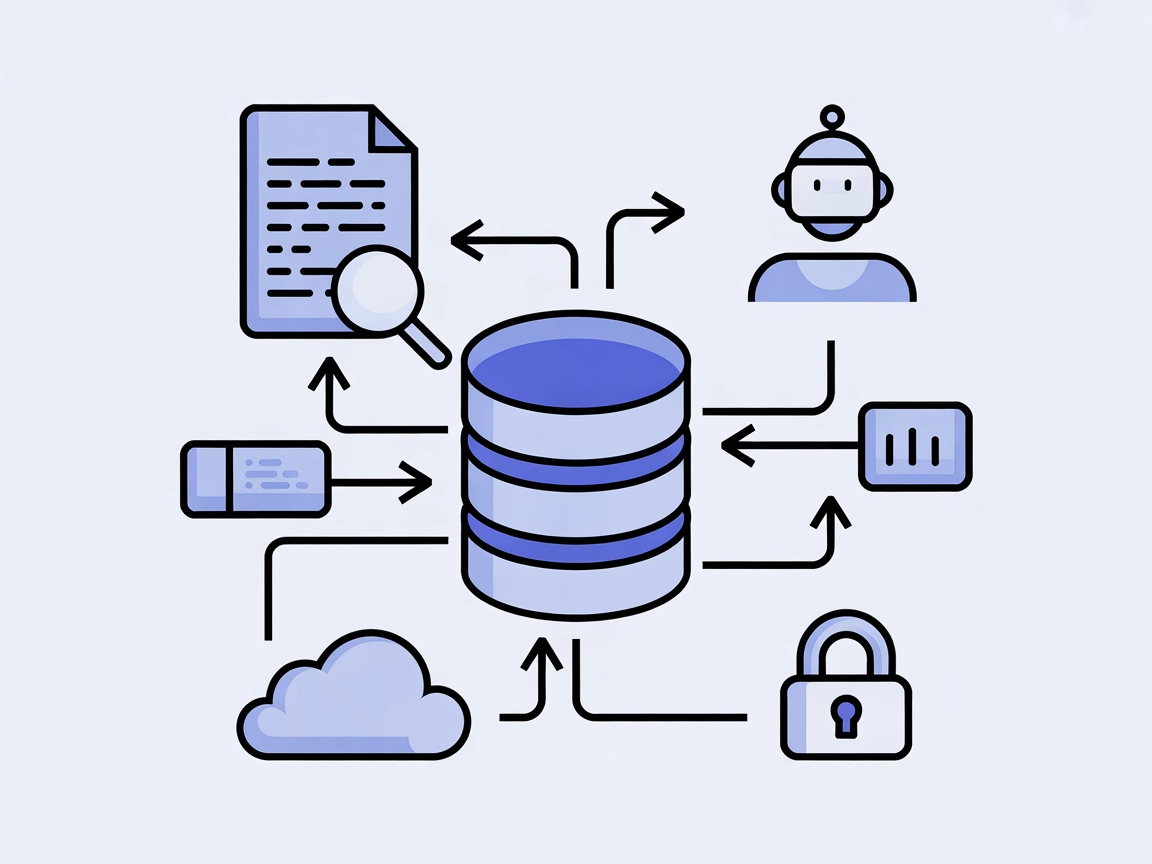

The MongoDB MCP Server enables seamless integration between AI assistants and MongoDB databases, allowing for direct database management, query automation, and ...

The Nile MCP Server bridges AI assistants with the Nile database platform, enabling seamless automation of database operations, credential management, SQL execu...

The Astra DB MCP Server bridges Large Language Models (LLMs) and Astra DB, enabling secure, automated data querying and management. It empowers AI-driven workfl...

DocsMCP is a Model Context Protocol (MCP) server that empowers Large Language Models (LLMs) with real-time access to both local and remote documentation sources...

The Linear MCP Server connects Linear’s project management platform with AI assistants and LLMs, empowering teams to automate issue management, search, updates,...

The LlamaCloud MCP Server connects AI assistants to multiple managed indexes on LlamaCloud, enabling enterprise-scale document retrieval, search, and knowledge ...

The mcp-local-rag MCP Server enables privacy-respecting, local Retrieval-Augmented Generation (RAG) web search for LLMs. It allows AI assistants to access, embe...

The nx-mcp MCP Server bridges Nx monorepo build tools with AI assistants and LLM workflows via the Model Context Protocol. Automate workspace management, run Nx...

The Serper MCP Server bridges AI assistants with Google Search via the Serper API, enabling real-time web, image, video, news, maps, reviews, shopping, and acad...

Home Assistant MCP Server (hass-mcp) bridges AI assistants with your Home Assistant smart home, enabling LLMs to query, control, and summarize devices and autom...

The any-chat-completions-mcp MCP Server connects FlowHunt and other tools to any OpenAI SDK-compatible Chat Completion API. It enables seamless integration of m...

The Browserbase MCP Server enables secure, cloud-based browser automation for AI and LLMs, allowing powerful web interaction, data extraction, UI testing, and a...

Chat MCP is a cross-platform desktop chat application that leverages the Model Context Protocol (MCP) to interface with various Large Language Models (LLMs). It...

The Couchbase MCP Server connects AI agents and LLMs directly to Couchbase clusters, enabling seamless natural language database operations, automated managemen...

The Firecrawl MCP Server supercharges FlowHunt and AI assistants with advanced web scraping, deep research, and content discovery capabilities. Seamless integra...

The Microsoft Fabric MCP Server enables seamless AI-driven interaction with Microsoft Fabric's data engineering and analytics ecosystem. It supports workspace m...

The OpenAPI Schema MCP Server exposes OpenAPI specifications to Large Language Models, enabling API exploration, schema search, code generation, and security re...

The Patronus MCP Server streamlines LLM evaluation and experimentation for developers and researchers, providing automation, batch processing, and robust setup ...

The QGIS MCP Server bridges QGIS Desktop with LLMs for AI-driven automation—enabling project, layer, and algorithm control, as well as Python code execution dir...

The YDB MCP Server connects AI assistants and LLMs with YDB databases, enabling natural language access, querying, and management of YDB instances. It empowers ...

The Mesh Agent MCP Server connects AI assistants with external data sources, APIs, and services, bridging large language models (LLMs) with real-world informati...

Integrate the Vectorize MCP Server with FlowHunt to enable advanced vector retrieval, semantic search, and text extraction for powerful AI-driven workflows. Eff...

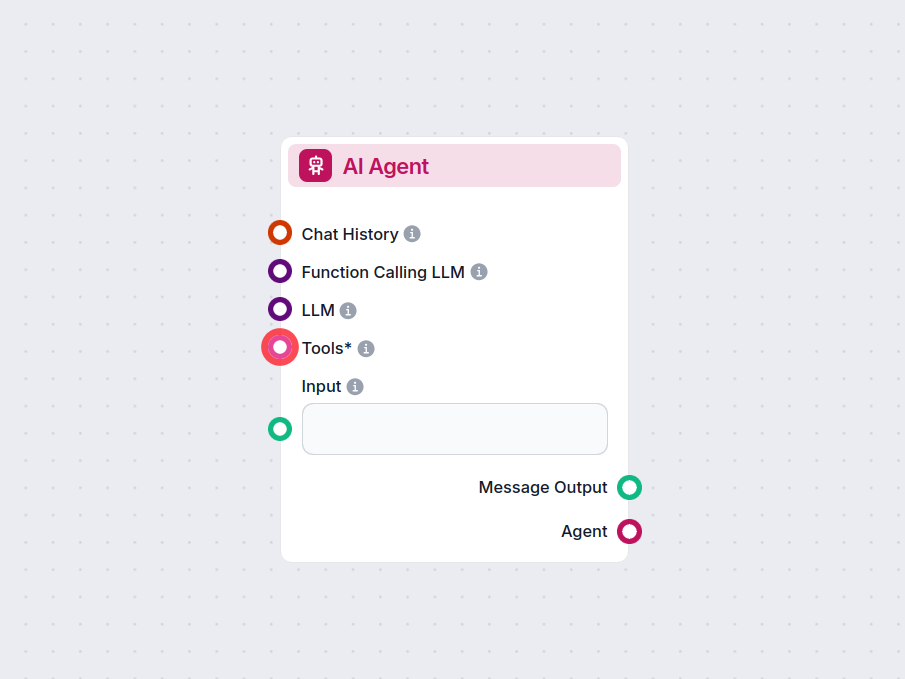

The AI Agent component in FlowHunt empowers your workflows with autonomous decision-making and tool-using capabilities. It leverages large language models and c...

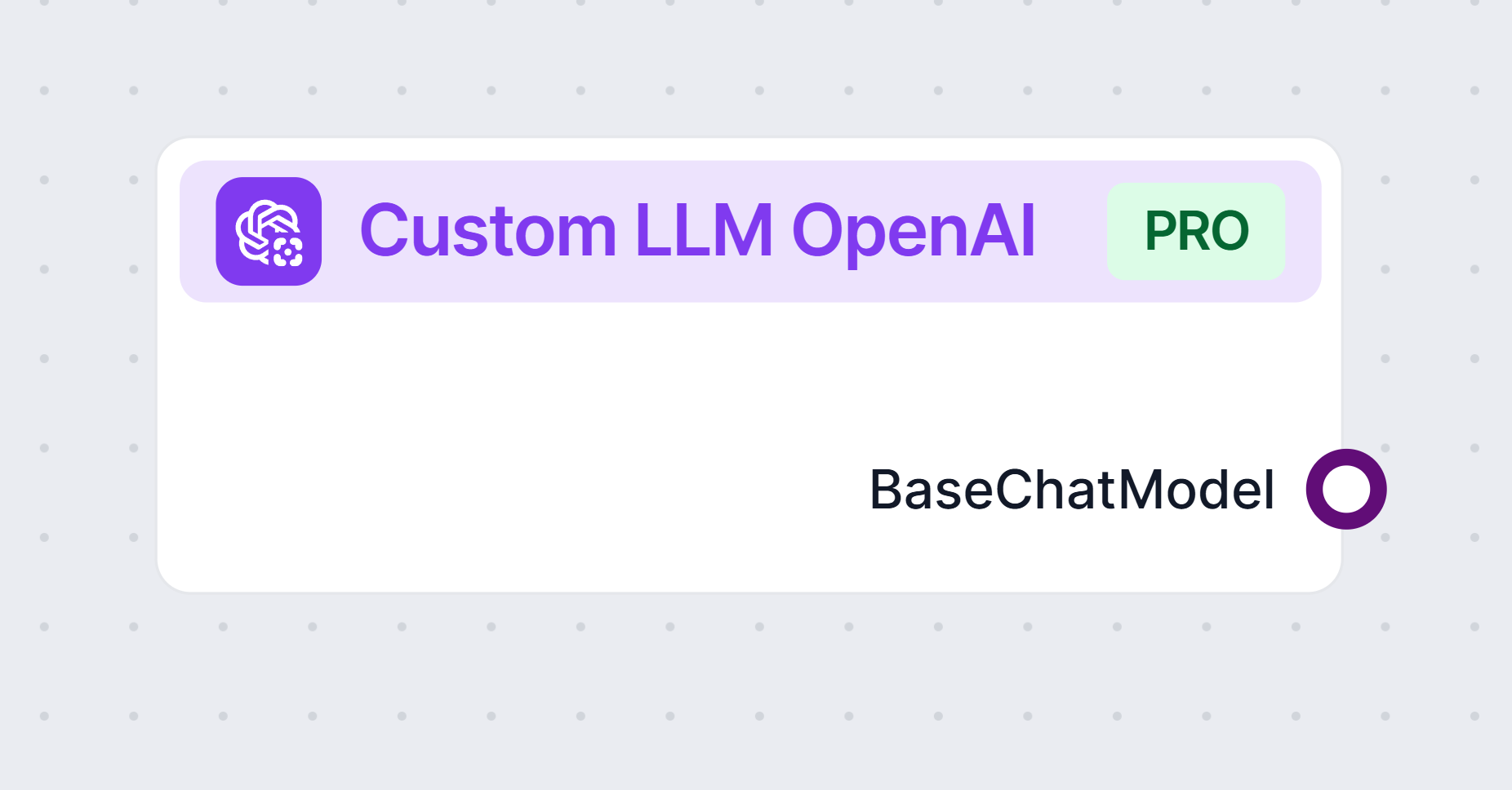

Unlock the power of custom language models with the Custom OpenAI LLM component in FlowHunt. Seamlessly integrate your own OpenAI-compatible models—including Ji...

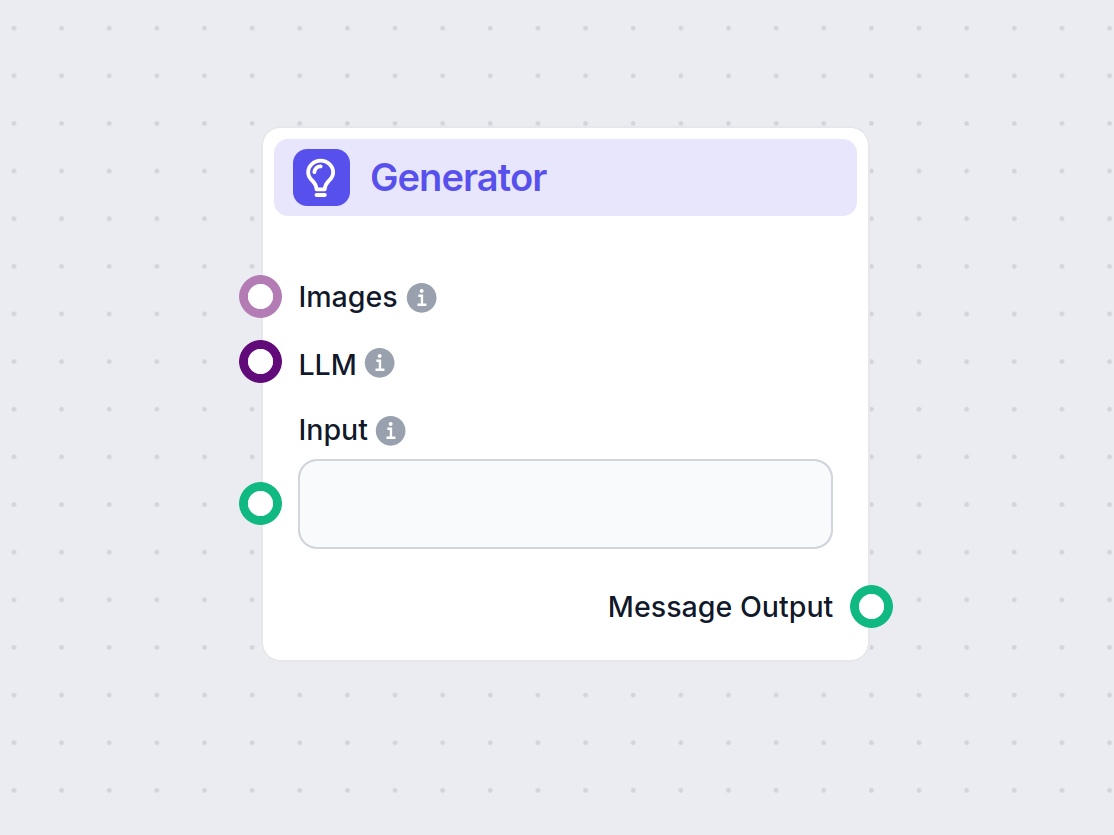

Explore the Generator component in FlowHunt—powerful AI-driven text generation using your chosen LLM model. Effortlessly create dynamic chatbot responses by com...

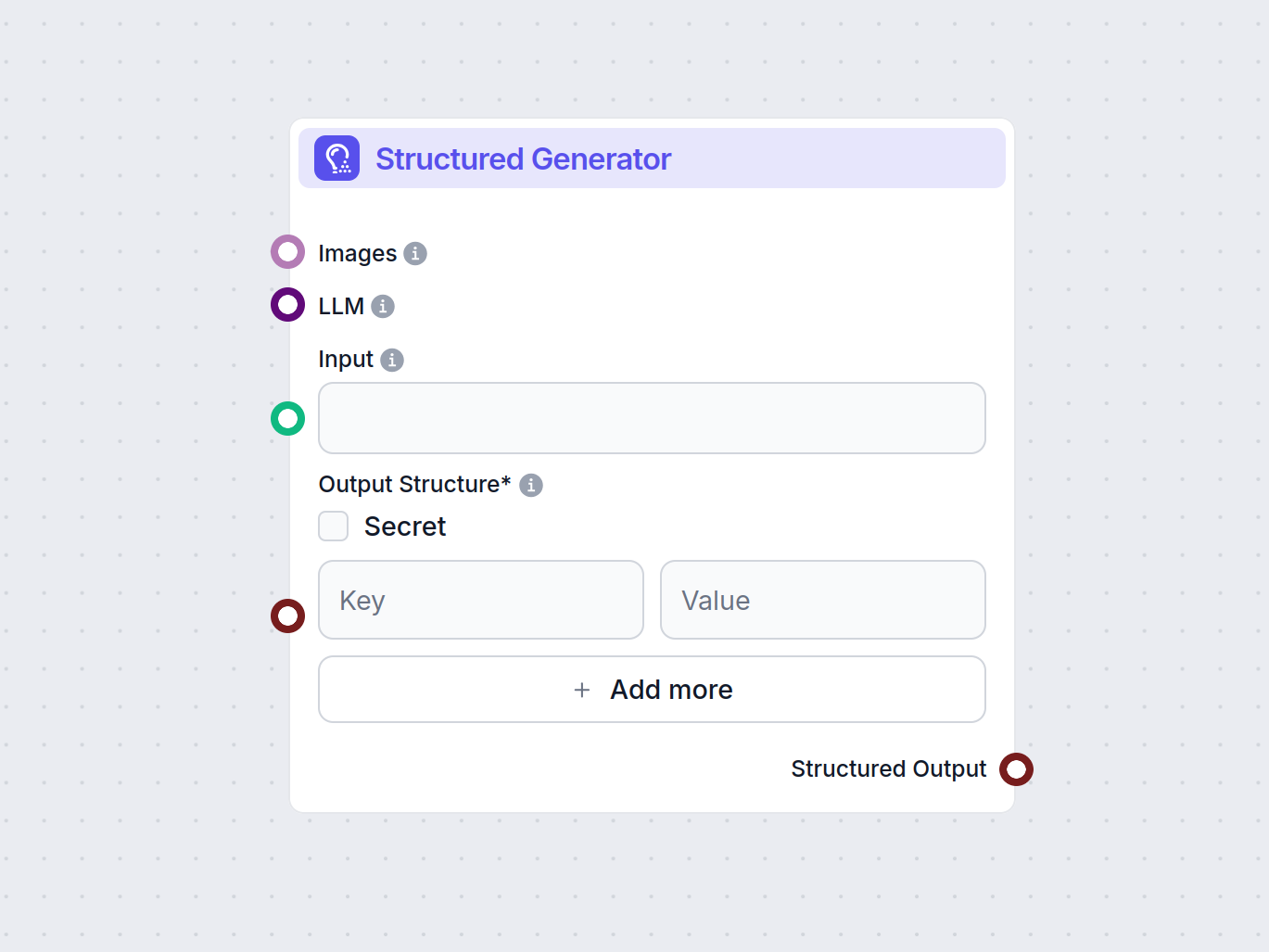

The Structured Output Generator component lets you create precise, structured data from any input prompt using your chosen LLM model. Define the exact data fiel...

Agentic RAG (Agentic Retrieval-Augmented Generation) is an advanced AI framework that integrates intelligent agents into traditional RAG systems, enabling auton...

Explore the thought processes of AI Agents in this comprehensive evaluation of GPT-4o. Discover how it performs across tasks like content generation, problem-so...

AI is revolutionizing entertainment, enhancing gaming, film, and music through dynamic interactions, personalization, and real-time content evolution. It powers...

Cache Augmented Generation (CAG) is a novel approach to enhancing large language models (LLMs) by preloading knowledge as precomputed key-value caches, enabling...

Learn more about Claude by Anthropic. Understand what it is used for, the different models offered, and its unique features.

Discover the costs associated with training and deploying Large Language Models (LLMs) like GPT-3 and GPT-4, including computational, energy, and hardware expen...

Learn to build an AI JavaScript game generator in FlowHunt using the Tool Calling Agent, Prompt node, and Anthropic LLM. Step-by-step guide based on flow diagra...

FlowHunt 2.4.1 introduces major new AI models including Claude, Grok, Llama, Mistral, DALL-E 3, and Stable Diffusion, expanding your options for experimentation...

Learn more about the Grok model by xAI, an advanced AI chatbot led by Elon Musk. Discover its real-time data access, key features, benchmarks, use cases, and ho...

Explore the advanced capabilities of Llama 3.3 70B Versatile 128k as an AI Agent. This in-depth review examines its reasoning, problem-solving, and creative ski...

Instruction tuning is a technique in AI that fine-tunes large language models (LLMs) on instruction-response pairs, enhancing their ability to follow human inst...

LangChain is an open-source framework for developing applications powered by Large Language Models (LLMs), streamlining the integration of powerful LLMs like Op...

LangGraph is an advanced library for building stateful, multi-actor applications using Large Language Models (LLMs). Developed by LangChain Inc, it extends Lang...

FlowHunt supports dozens of AI models, including Claude models by Anthropic. Learn how to use Claude in your AI tools and chatbots with customizable settings fo...

FlowHunt supports dozens of AI models, including the revolutionary DeepSeek models. Here's how to use DeepSeek in your AI tools and chatbots.

FlowHunt supports dozens of AI models, including Google Gemini. Learn how to use Gemini in your AI tools and chatbots, switch between models, and control advanc...

FlowHunt supports dozens of text generation models, including Meta's Llama models. Learn how to integrate Llama into your AI tools and chatbots, customize setti...

FlowHunt supports dozens of AI text models, including models by Mistral. Here's how to use Mistral in your AI tools and chatbots.

FlowHunt supports dozens of text generation models, including models by OpenAI. Here's how to use ChatGPT in your AI tools and chatbots.

FlowHunt supports dozens of text generation models, including models by xAI. Here's how to use the xAI models in your AI tools and chatbots.

Discover how MIT researchers are advancing large language models (LLMs) with new insights into human beliefs, novel anomaly detection tools, and strategies for ...

Learn how FlowHunt used one-shot prompting to teach LLMs to find and embed relevant YouTube videos in WordPress. This technique ensures perfect iframe embeds, s...

Perplexity AI is an advanced AI-powered search engine and conversational tool that leverages NLP and machine learning to deliver precise, contextual answers wit...

In the realm of LLMs, a prompt is input text that guides the model’s output. Learn how effective prompts, including zero-, one-, few-shot, and chain-of-thought ...

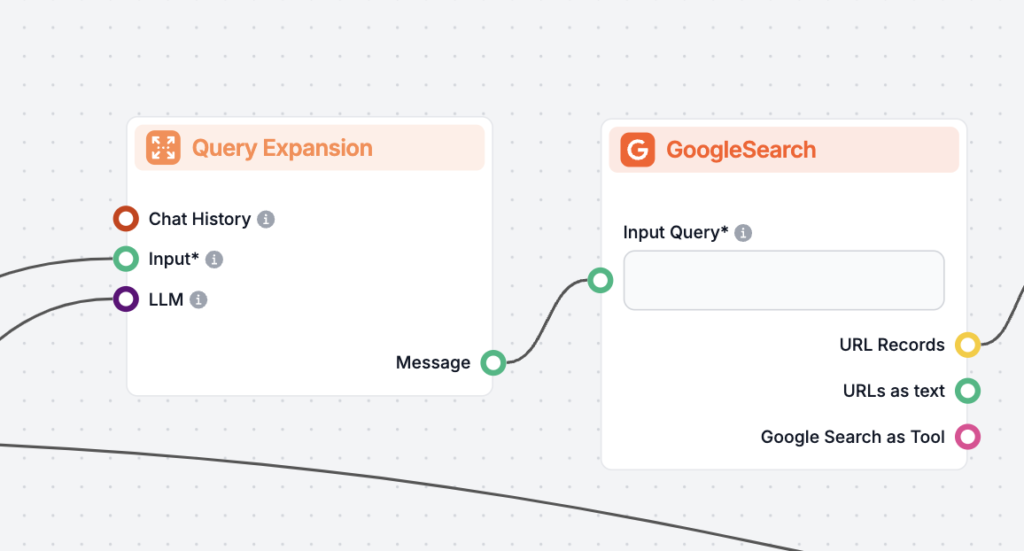

Query Expansion is the process of enhancing a user’s original query by adding terms or context, improving document retrieval for more accurate and contextually ...

Question Answering with Retrieval-Augmented Generation (RAG) combines information retrieval and natural language generation to enhance large language models (LL...

Reduce AI hallucinations and ensure accurate chatbot responses by using FlowHunt's Schedule feature. Discover the benefits, practical use cases, and step-by-ste...

Text Generation with Large Language Models (LLMs) refers to the advanced use of machine learning models to produce human-like text from prompts. Explore how LLM...

Learn how to build robust, production-ready AI agents with our comprehensive 12-factor methodology. Discover best practices for natural language processing, con...

A token in the context of large language models (LLMs) is a sequence of characters that the model converts into numeric representations for efficient processing...