Replicate MCP Server Integration

FlowHunt's Replicate MCP Server connector allows seamless access to Replicate's vast AI model hub, enabling developers to search, explore, and run machine learn...

FlowHunt's Replicate MCP Server connector allows seamless access to Replicate's vast AI model hub, enabling developers to search, explore, and run machine learn...

Integrate AI assistants with Label Studio using the Label Studio MCP Server. Seamlessly manage labeling projects, tasks, and predictions through standardized MC...

Explore 3D Reconstruction: Learn how this advanced process captures real-world objects or environments and transforms them into detailed 3D models using techniq...

Activation functions are fundamental to artificial neural networks, introducing non-linearity and enabling learning of complex patterns. This article explores t...

Adaptive learning is a transformative educational method that leverages technology to create a customized learning experience for each student. Using AI, machin...

Adjusted R-squared is a statistical measure used to evaluate the goodness of fit of a regression model, accounting for the number of predictors to avoid overfit...

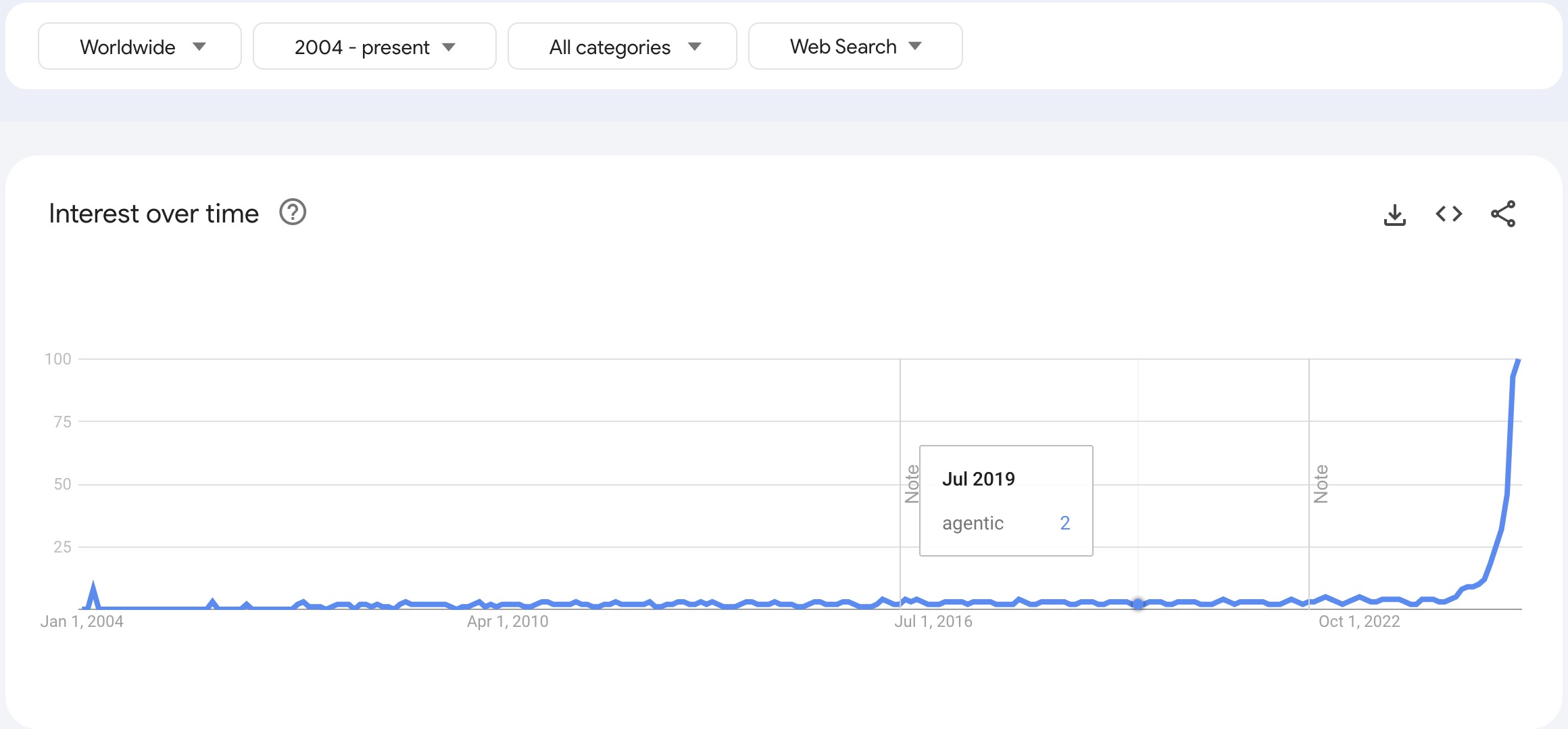

Agentic AI is an advanced branch of artificial intelligence that empowers systems to act autonomously, make decisions, and accomplish complex tasks with minimal...

Explore how Artificial Intelligence impacts human rights, balancing benefits like improved access to services with risks such as privacy violations and bias. Le...

An AI Automation System integrates artificial intelligence technologies with automation processes, enhancing traditional automation with cognitive abilities lik...

An AI Consultant bridges AI technology with business strategy, guiding companies in AI integration to drive innovation, efficiency, and growth. Learn about thei...

AI Content Creation leverages artificial intelligence to automate and enhance digital content generation, curation, and personalization across text, visuals, an...

An AI Data Analyst synergizes traditional data analysis skills with artificial intelligence (AI) and machine learning (ML) to extract insights, predict trends, ...

Ideogram.ai is a powerful tool that democratizes AI image creation, making it accessible to a wide range of users. Explore its feature-rich, user-friendly inter...

Artificial Intelligence (AI) in cybersecurity leverages AI technologies such as machine learning and NLP to detect, prevent, and respond to cyber threats by aut...

AI is revolutionizing entertainment, enhancing gaming, film, and music through dynamic interactions, personalization, and real-time content evolution. It powers...

Artificial Intelligence (AI) in healthcare leverages advanced algorithms and technologies like machine learning, NLP, and deep learning to analyze complex medic...

Artificial Intelligence (AI) in manufacturing is transforming production by integrating advanced technologies to boost productivity, efficiency, and decision-ma...

Artificial Intelligence (AI) in retail leverages advanced technologies such as machine learning, NLP, computer vision, and robotics to enhance customer experien...

Discover the importance of AI model accuracy and stability in machine learning. Learn how these metrics impact applications like fraud detection, medical diagno...

Discover a scalable Python solution for invoice data extraction using AI-based OCR. Learn how to convert PDFs, upload images to FlowHunt’s API, and retrieve str...

AI Project Management in R&D refers to the strategic application of artificial intelligence (AI) and machine learning (ML) technologies to enhance the managemen...

AI Prototype Development is the iterative process of designing and creating preliminary versions of AI systems, enabling experimentation, validation, and resour...

An AI Quality Assurance Specialist ensures the accuracy, reliability, and performance of AI systems by developing test plans, executing tests, identifying issue...

Discover what an AI SDR is and how Artificial Intelligence Sales Development Representatives automate prospecting, lead qualification, outreach, and follow-ups,...

AI Search is a semantic or vector-based search methodology that uses machine learning models to understand the intent and contextual meaning behind search queri...

Discover the role of an AI Systems Engineer: design, develop, and maintain AI systems, integrate machine learning, manage infrastructure, and drive AI automatio...

AI technology trends encompass current and emerging advancements in artificial intelligence, including machine learning, large language models, multimodal capab...

Explore the top AI trends for 2025, including the rise of AI agents and AI crews, and discover how these innovations are transforming industries with automation...

AI-based student feedback leverages artificial intelligence to deliver personalized, real-time evaluative insights and suggestions to students. Utilizing machin...

An AI-driven startup is a business that centers its operations, products, or services around artificial intelligence technologies to innovate, automate, and gai...

AI-powered marketing leverages artificial intelligence technologies like machine learning, NLP, and predictive analytics to automate tasks, gain customer insigh...

Algorithmic transparency refers to the clarity and openness regarding the inner workings and decision-making processes of algorithms. It's crucial in AI and mac...

Amazon SageMaker is a fully managed machine learning (ML) service from AWS that enables data scientists and developers to quickly build, train, and deploy machi...

Anaconda is a comprehensive, open-source distribution of Python and R, designed to simplify package management and deployment for scientific computing, data sci...

Anomaly detection is the process of identifying data points, events, or patterns that deviate from the expected norm within a dataset, often leveraging AI and m...

The Area Under the Curve (AUC) is a fundamental metric in machine learning used to evaluate the performance of binary classification models. It quantifies the o...

Artificial Neural Networks (ANNs) are a subset of machine learning algorithms modeled after the human brain. These computational models consist of interconnecte...

Artificial Superintelligence (ASI) is a theoretical AI that surpasses human intelligence in all domains, with self-improving, multimodal capabilities. Discover ...

Auto-classification automates content categorization by analyzing properties and assigning tags using technologies like machine learning, NLP, and semantic anal...

Explore autonomous vehicles—self-driving cars that use AI, sensors, and connectivity to operate without human input. Learn about their key technologies, AI’s ro...

Backpropagation is an algorithm for training artificial neural networks by adjusting weights to minimize prediction error. Learn how it works, its steps, and it...

Bagging, short for Bootstrap Aggregating, is a fundamental ensemble learning technique in AI and machine learning that improves model accuracy and robustness by...

Batch normalization is a transformative technique in deep learning that significantly enhances the training process of neural networks by addressing internal co...

A Bayesian Network (BN) is a probabilistic graphical model that represents variables and their conditional dependencies via a Directed Acyclic Graph (DAG). Baye...

Discover BERT (Bidirectional Encoder Representations from Transformers), an open-source machine learning framework developed by Google for natural language proc...

Explore bias in AI: understand its sources, impact on machine learning, real-world examples, and strategies for mitigation to build fair and reliable AI systems...

BigML is a machine learning platform designed to simplify the creation and deployment of predictive models. Founded in 2011, its mission is to make machine lear...

Boosting is a machine learning technique that combines the predictions of multiple weak learners to create a strong learner, improving accuracy and handling com...

Caffe is an open-source deep learning framework from BVLC, optimized for speed and modularity in building convolutional neural networks (CNNs). Widely used in i...

Causal inference is a methodological approach used to determine the cause-and-effect relationships between variables, crucial in sciences for understanding caus...

Chainer is an open-source deep learning framework offering a flexible, intuitive, and high-performance platform for neural networks, featuring dynamic define-by...

ChatGPT is a state-of-the-art AI chatbot developed by OpenAI, utilizing advanced Natural Language Processing (NLP) to enable human-like conversations and assist...

An AI classifier is a machine learning algorithm that assigns class labels to input data, categorizing information into predefined classes based on learned patt...

Find out more about Anthropic's Claude 3.5 Sonnet: how it compares to other models, its strengths, weaknesses, and applications in areas like reasoning, coding,...

Clearbit is a powerful data activation platform that helps businesses, especially sales and marketing teams, enrich customer data, personalize marketing efforts...

Clustering is an unsupervised machine learning technique that groups similar data points together, enabling exploratory data analysis without labeled data. Lear...

Cognitive computing represents a transformative technology model that simulates human thought processes in complex scenarios. It integrates AI and signal proces...

Computer Vision is a field within artificial intelligence (AI) focused on enabling computers to interpret and understand the visual world. By leveraging digital...

A confusion matrix is a machine learning tool for evaluating the performance of classification models, detailing true/false positives and negatives to provide i...

Convergence in AI refers to the process by which machine learning and deep learning models attain a stable state through iterative learning, ensuring accurate p...

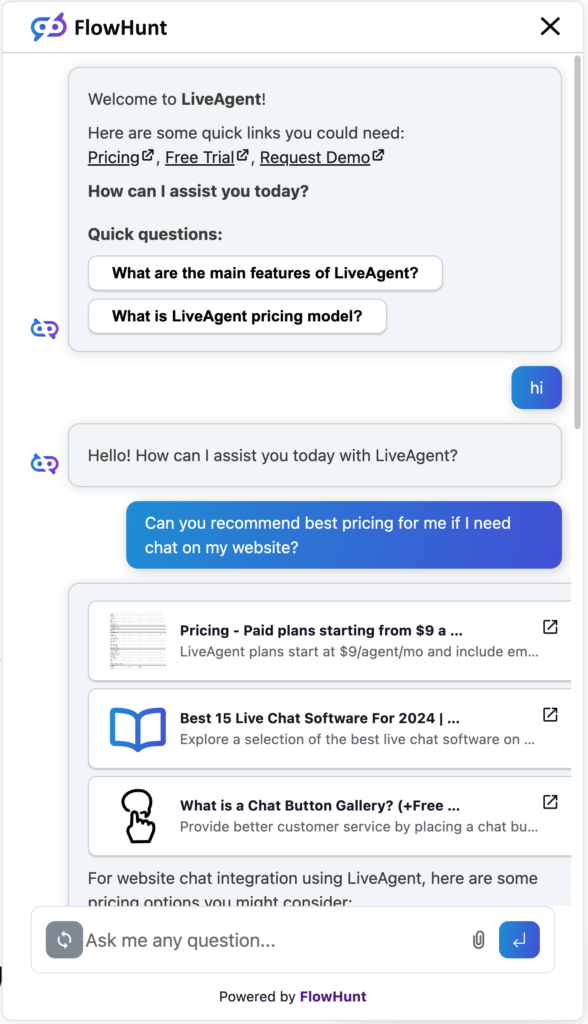

Conversational AI refers to technologies that enable computers to simulate human conversations using NLP, machine learning, and other language technologies. It ...

Coreference resolution is a fundamental NLP task that identifies and links expressions in text referring to the same entity, crucial for machine understanding i...

A Corpus (plural: corpora) in AI refers to a large, structured set of texts or audio data used for training and evaluating AI models. Corpora are essential for ...

Discover the costs associated with training and deploying Large Language Models (LLMs) like GPT-3 and GPT-4, including computational, energy, and hardware expen...

Cross-entropy is a pivotal concept in both information theory and machine learning, serving as a metric to measure the divergence between two probability distri...

Cross-validation is a statistical method used to evaluate and compare machine learning models by partitioning data into training and validation sets multiple ti...

A knowledge cutoff date is the specific point in time after which an AI model no longer has updated information. Learn why these dates matter, how they affect A...

Data cleaning is the crucial process of detecting and fixing errors or inconsistencies in data to enhance its quality, ensuring accuracy, consistency, and relia...

Data mining is a sophisticated process of analyzing vast sets of raw data to uncover patterns, relationships, and insights that can inform business strategies a...

Data scarcity refers to insufficient data for training machine learning models or comprehensive analysis, hindering the development of accurate AI systems. Disc...

Data validation in AI refers to the process of assessing and ensuring the quality, accuracy, and reliability of data used to train and test AI models. It involv...

DataRobot is a comprehensive AI platform that simplifies the creation, deployment, and management of machine learning models, making predictive and generative A...

A decision tree is a powerful and intuitive tool for decision-making and predictive analysis, used in both classification and regression tasks. Its tree-like st...

A Decision Tree is a supervised learning algorithm used for making decisions or predictions based on input data. It is visualized as a tree-like structure where...

Explore the world of AI agent models with a comprehensive analysis of 20 cutting-edge systems. Discover how they think, reason, and perform in various tasks, an...

A Deep Belief Network (DBN) is a sophisticated generative model utilizing deep architectures and Restricted Boltzmann Machines (RBMs) to learn hierarchical data...

Deep Learning is a subset of machine learning in artificial intelligence (AI) that mimics the workings of the human brain in processing data and creating patter...

Deepfakes are a form of synthetic media where AI is used to generate highly realistic but fake images, videos, or audio recordings. The term “deepfake” is a por...

Dependency Parsing is a syntactic analysis method in NLP that identifies grammatical relationships between words, forming tree-like structures essential for app...

Discover how 'Did You Mean' (DYM) in NLP identifies and corrects errors in user input, such as typos or misspellings, and suggests alternatives to enhance user ...

Dimensionality reduction is a pivotal technique in data processing and machine learning, reducing the number of input variables in a dataset while preserving es...

Learn about Discriminative AI Models—machine learning models focused on classification and regression by modeling decision boundaries between classes. Understan...

DL4J, or DeepLearning4J, is an open-source, distributed deep learning library for the Java Virtual Machine (JVM). Part of the Eclipse ecosystem, it enables scal...

Discover how AI is transforming SEO by automating keyword research, content optimization, and user engagement. Explore key strategies, tools, and future trends ...

Dropout is a regularization technique in AI, especially neural networks, that combats overfitting by randomly disabling neurons during training, promoting robus...

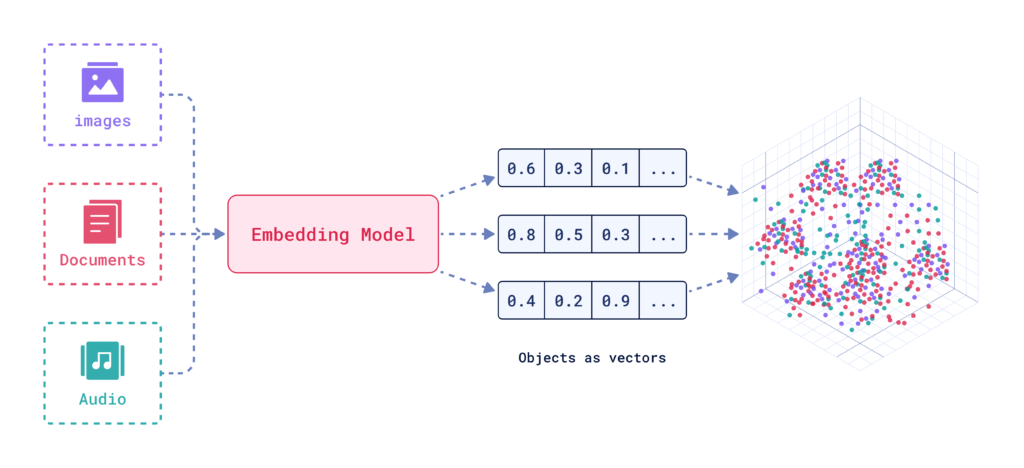

An embedding vector is a dense numerical representation of data in a multidimensional space, capturing semantic and contextual relationships. Learn how embeddin...

AI Explainability refers to the ability to understand and interpret the decisions and predictions made by artificial intelligence systems. As AI models become m...

The F-Score, also known as the F-Measure or F1 Score, is a statistical metric used to evaluate the accuracy of a test or model, particularly in binary classific...

Explore how Feature Engineering and Extraction enhance AI model performance by transforming raw data into valuable insights. Discover key techniques like featur...

Feature extraction transforms raw data into a reduced set of informative features, enhancing machine learning by simplifying data, improving model performance, ...

Federated Learning is a collaborative machine learning technique where multiple devices train a shared model while keeping training data localized. This approac...

Few-Shot Learning is a machine learning approach that enables models to make accurate predictions using only a small number of labeled examples. Unlike traditio...

AI in finance fraud detection refers to the application of artificial intelligence technologies to identify and prevent fraudulent activities within financial s...

Financial forecasting is a sophisticated analytical process used to predict a company’s future financial outcomes by analyzing historical data, market trends, a...

Model fine-tuning adapts pre-trained models for new tasks by making minor adjustments, reducing data and resource needs. Learn how fine-tuning leverages transfe...

The Flux AI Model by Black Forest Labs is an advanced text-to-image generation system that converts natural language prompts into highly detailed, photorealisti...

A Foundation AI Model is a large-scale machine learning model trained on vast amounts of data, adaptable to a wide range of tasks. Foundation models have revolu...

Fraud Detection with AI leverages machine learning to identify and mitigate fraudulent activities in real time. It enhances accuracy, scalability, and cost-effe...

Garbage In, Garbage Out (GIGO) highlights how the quality of output from AI and other systems is directly dependent on input quality. Learn about its implicatio...

Generalization error measures how well a machine learning model predicts unseen data, balancing bias and variance to ensure robust and reliable AI applications....

Showing 1 to 100 of 211 results