Model Interpretability

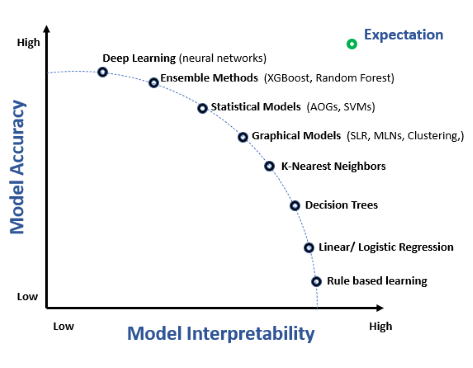

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

7 min read

Model Interpretability

AI

+4

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.

These cookies are required for the website to function and cannot be disabled.

These cookies help us understand how visitors interact with our website.