mcp-local-rag MCP Server

The mcp-local-rag MCP Server enables privacy-respecting, local Retrieval-Augmented Generation (RAG) web search for LLMs. It allows AI assistants to access, embe...

The mcp-local-rag MCP Server enables privacy-respecting, local Retrieval-Augmented Generation (RAG) web search for LLMs. It allows AI assistants to access, embe...

Context Portal (ConPort) is a memory bank MCP server that empowers AI assistants and developer tools by managing structured project context, enabling Retrieval ...

Integrate FlowHunt with Pinecone vector databases using the Pinecone MCP Server. Enable semantic search, Retrieval-Augmented Generation (RAG), and efficient doc...

The RAG Web Browser MCP Server equips AI assistants and LLMs with live web search and content extraction capabilities, enabling retrieval-augmented generation (...

The Agentset MCP Server is an open-source platform enabling Retrieval-Augmented Generation (RAG) with agentic capabilities, allowing AI assistants to connect wi...

The Inkeep MCP Server connects AI assistants and developer tools to up-to-date product documentation managed in Inkeep, enabling direct, secure, and efficient r...

The mcp-rag-local MCP Server empowers AI assistants with semantic memory, enabling storage and retrieval of text passages based on meaning, not just keywords. I...

The Graphlit MCP Server connects FlowHunt and other MCP clients to a unified knowledge platform, enabling seamless ingestion, aggregation, and retrieval of docu...

Vectara MCP Server is an open source bridge between AI assistants and Vectara's Trusted RAG platform, enabling secure, efficient Retrieval-Augmented Generation ...

Cache Augmented Generation (CAG) is a novel approach to enhancing large language models (LLMs) by preloading knowledge as precomputed key-value caches, enabling...

Document grading in Retrieval-Augmented Generation (RAG) is the process of evaluating and ranking documents based on their relevance and quality in response to ...

Document reranking is the process of reordering retrieved documents based on their relevance to a user's query, refining search results to prioritize the most p...

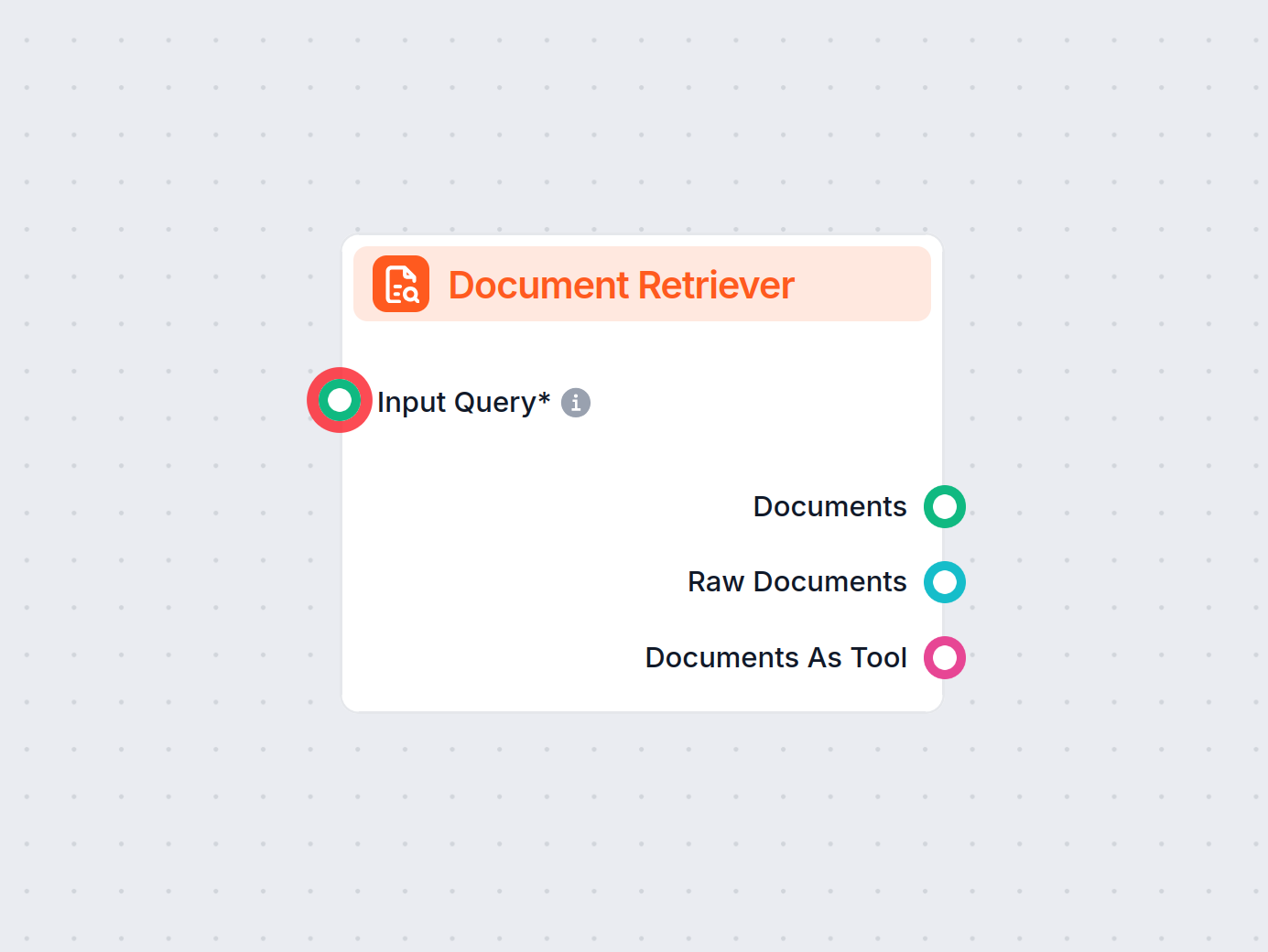

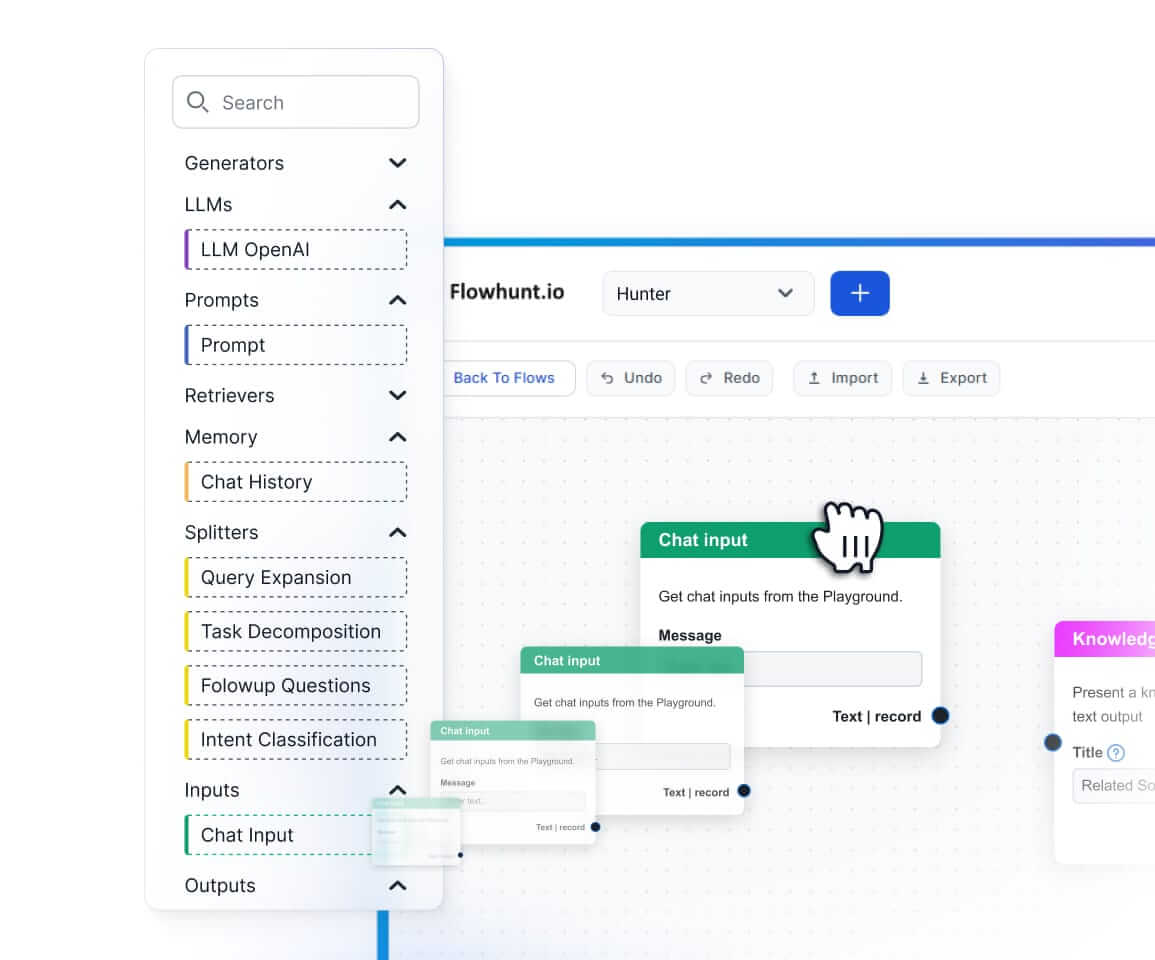

FlowHunt's Document Retriever enhances AI accuracy by connecting generative models to your own up-to-date documents and URLs, ensuring reliable and relevant ans...

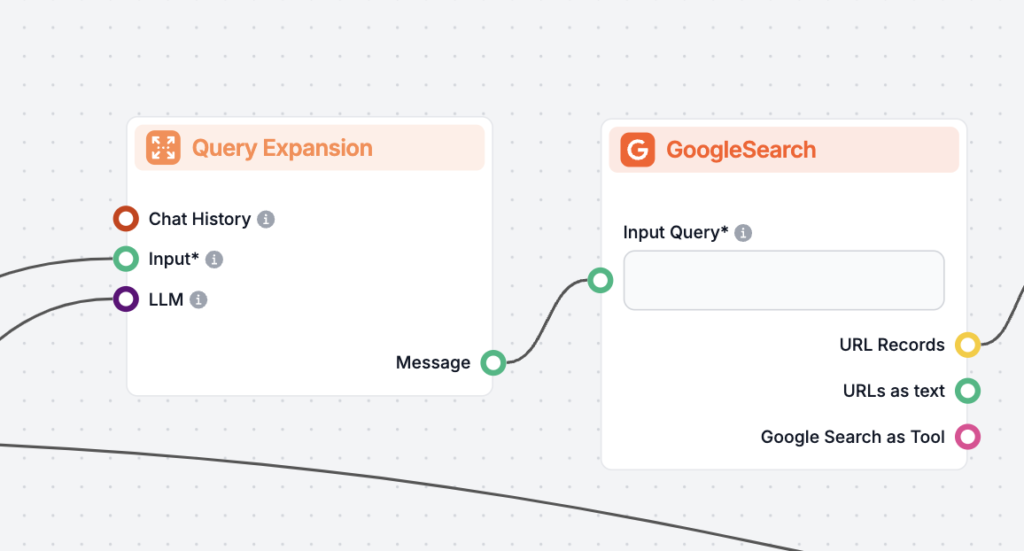

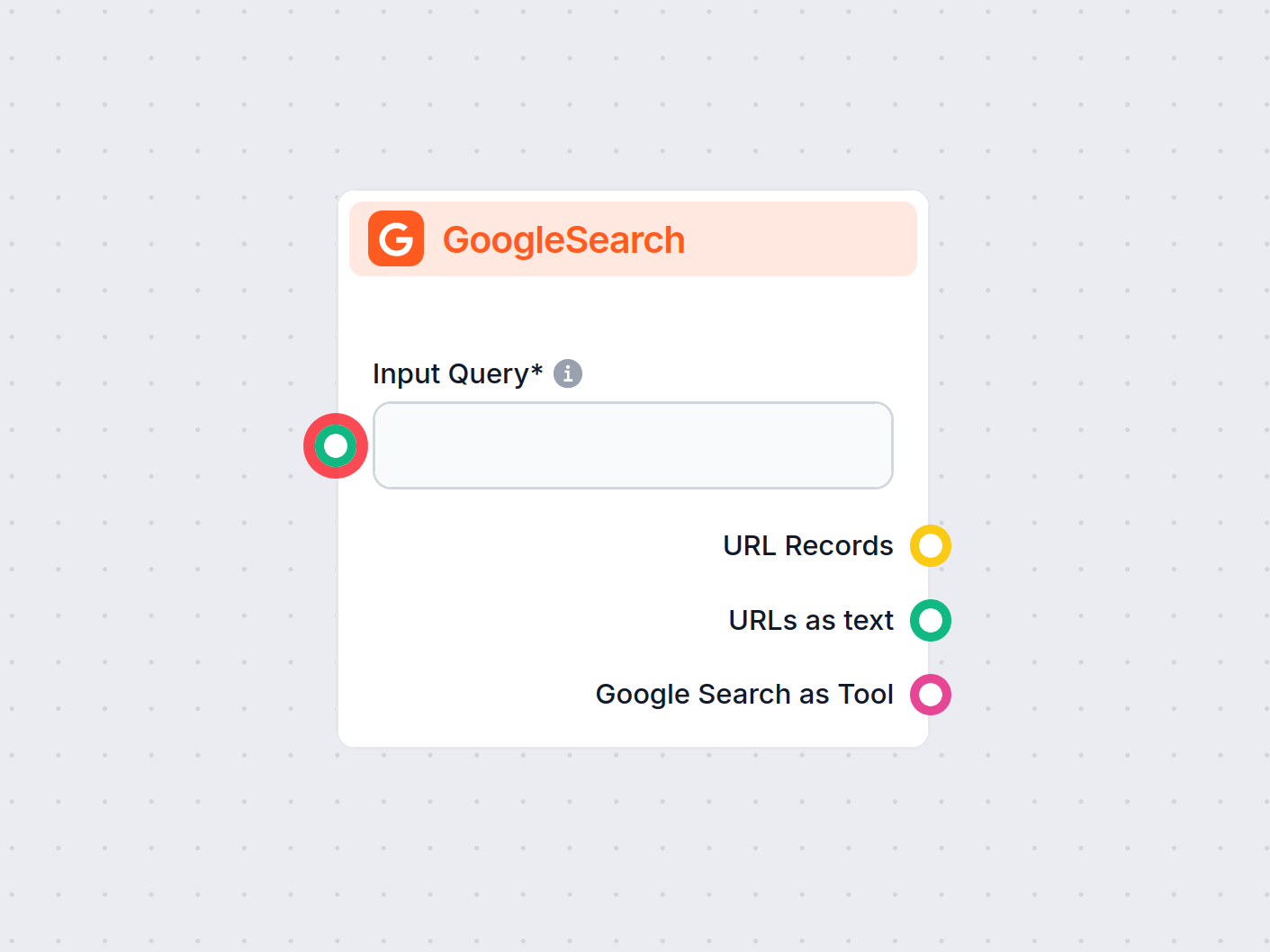

FlowHunt's GoogleSearch component enhances chatbot accuracy using Retrieval-Augmented Generation (RAG) to access up-to-date knowledge from Google. Control resul...

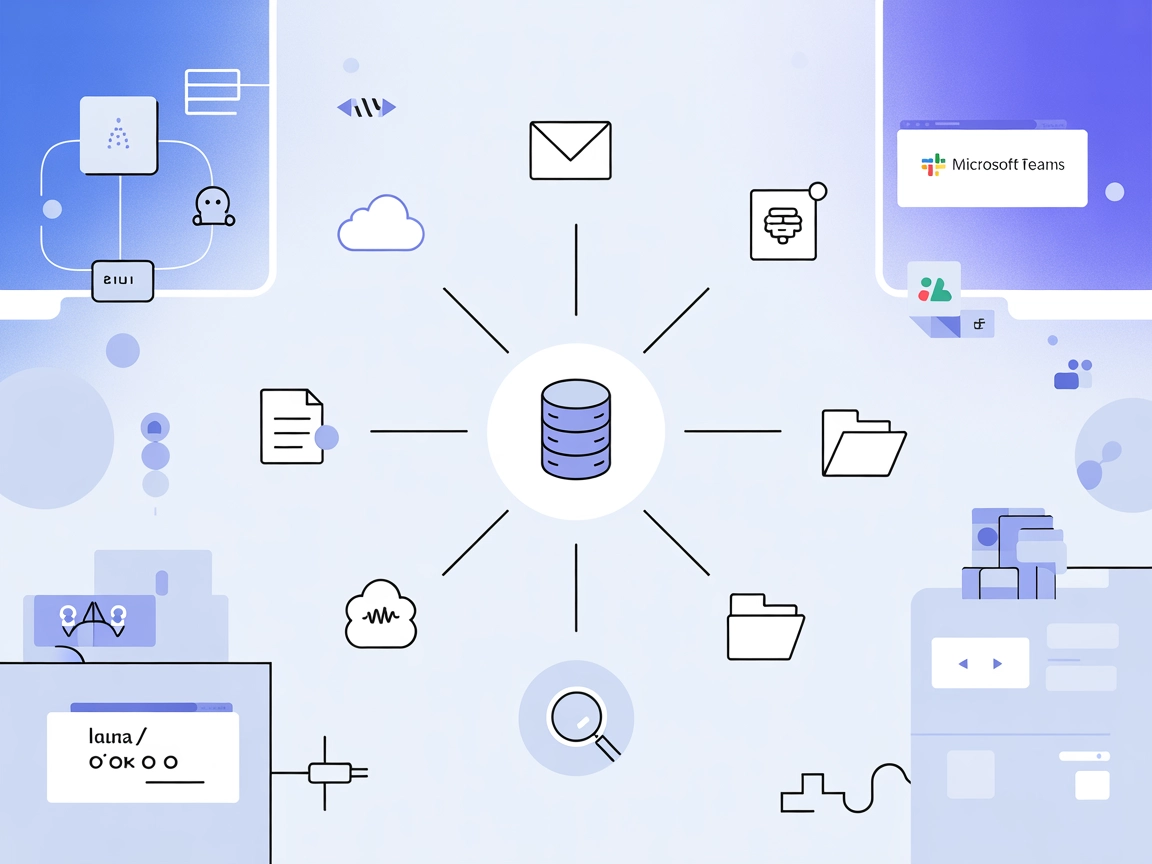

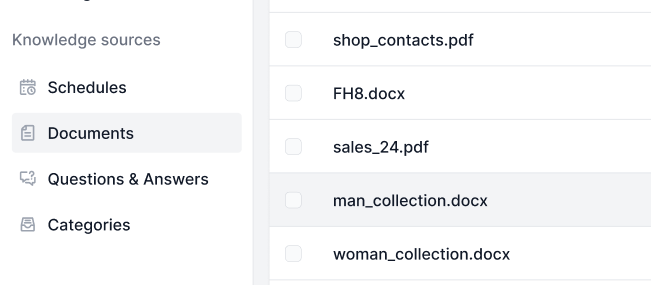

Knowledge Sources make teaching the AI according to your needs a breeze. Discover all the ways of linking knowledge with FlowHunt. Easily connect websites, docu...

LazyGraphRAG is an innovative approach to Retrieval-Augmented Generation (RAG), optimizing efficiency and reducing costs in AI-driven data retrieval by combinin...

Boost AI accuracy with RIG! Learn how to create chatbots that fact-check responses using both custom and general data sources for reliable, source-backed answer...

Query Expansion is the process of enhancing a user’s original query by adding terms or context, improving document retrieval for more accurate and contextually ...

Question Answering with Retrieval-Augmented Generation (RAG) combines information retrieval and natural language generation to enhance large language models (LL...

Explore how OpenAI O1's advanced reasoning capabilities and reinforcement learning outperform GPT4o in RAG accuracy, with benchmarks and cost analysis.

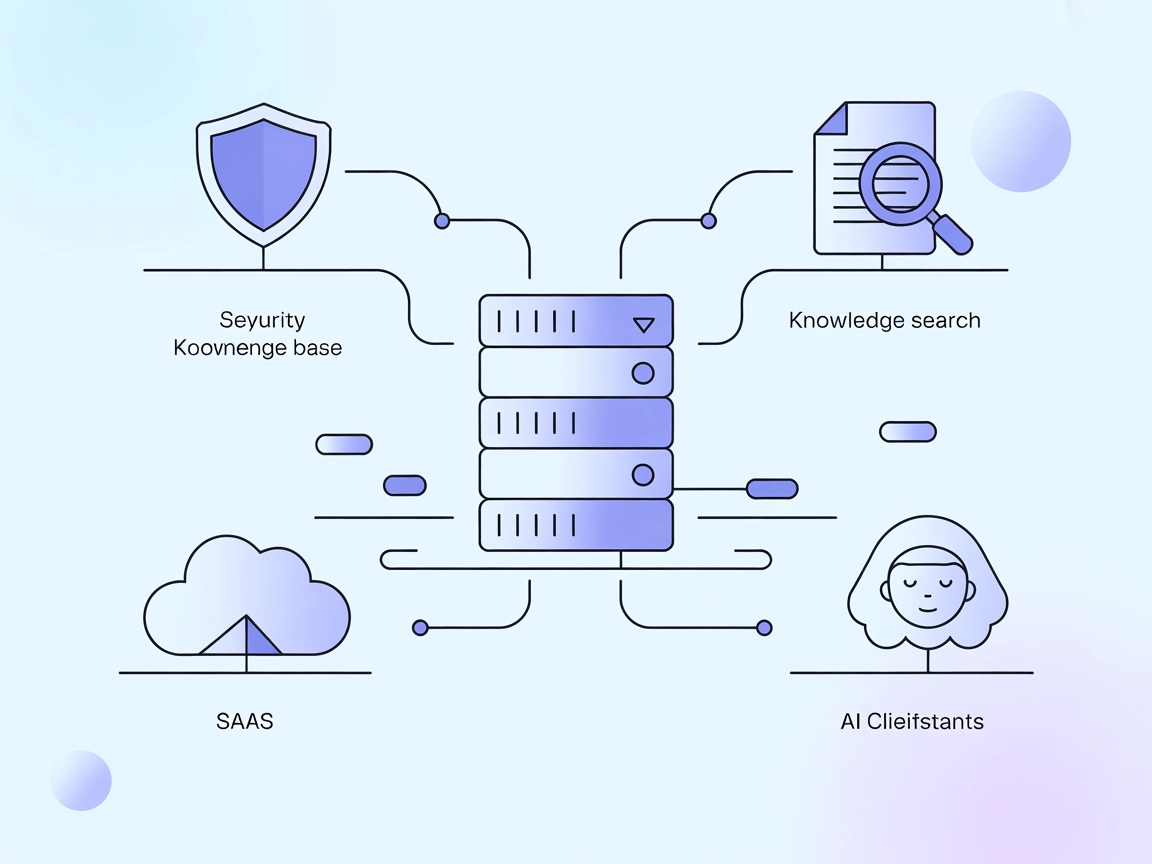

Retrieval Augmented Generation (RAG) is an advanced AI framework that combines traditional information retrieval systems with generative large language models (...

Discover what a retrieval pipeline is for chatbots, its components, use cases, and how Retrieval-Augmented Generation (RAG) and external data sources enable acc...

Discover the key differences between Retrieval-Augmented Generation (RAG) and Cache-Augmented Generation (CAG) in AI. Learn how RAG dynamically retrieves real-t...