Think MCP Server

Think MCP Server provides a structured reasoning tool for agentic AI workflows, enabling explicit thought logging, policy compliance, sequential decision-making...

Think MCP Server provides a structured reasoning tool for agentic AI workflows, enabling explicit thought logging, policy compliance, sequential decision-making...

Explore AI ethics guidelines: principles and frameworks ensuring the ethical development, deployment, and use of AI technologies. Learn about fairness, transpar...

AI Oversight Bodies are organizations tasked with monitoring, evaluating, and regulating AI development and deployment, ensuring responsible, ethical, and trans...

AI regulatory frameworks are structured guidelines and legal measures designed to govern the development, deployment, and use of artificial intelligence technol...

AI transparency is the practice of making the workings and decision-making processes of artificial intelligence systems comprehensible to stakeholders. Learn it...

Algorithmic transparency refers to the clarity and openness regarding the inner workings and decision-making processes of algorithms. It's crucial in AI and mac...

Benchmarking of AI models is the systematic evaluation and comparison of artificial intelligence models using standardized datasets, tasks, and performance metr...

Discover how the European AI Act impacts chatbots, detailing risk classifications, compliance requirements, deadlines, and the penalties for non-compliance to e...

Compliance reporting is a structured and systematic process that enables organizations to document and present evidence of their adherence to internal policies,...

AI Explainability refers to the ability to understand and interpret the decisions and predictions made by artificial intelligence systems. As AI models become m...

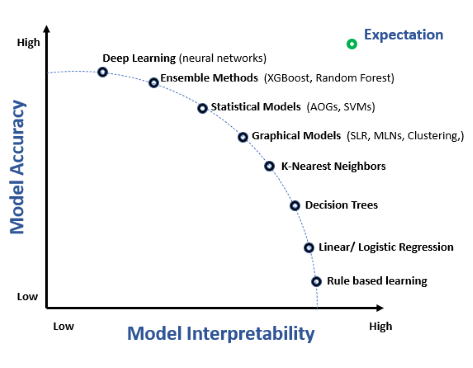

Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is critical in ...

Discover FlowHunt's Multi-source AI Answer Generator—a powerful tool for accessing real-time, credible information from multiple forums and databases. Ideal for...

Discover the RIG Wikipedia Assistant, a tool designed for precise information retrieval from Wikipedia. Ideal for research and content creation, it provides wel...

Transparency in Artificial Intelligence (AI) refers to the openness and clarity with which AI systems operate, including their decision-making processes, algori...

Explainable AI (XAI) is a suite of methods and processes designed to make the outputs of AI models understandable to humans, fostering transparency, interpretab...